zeldaring said:

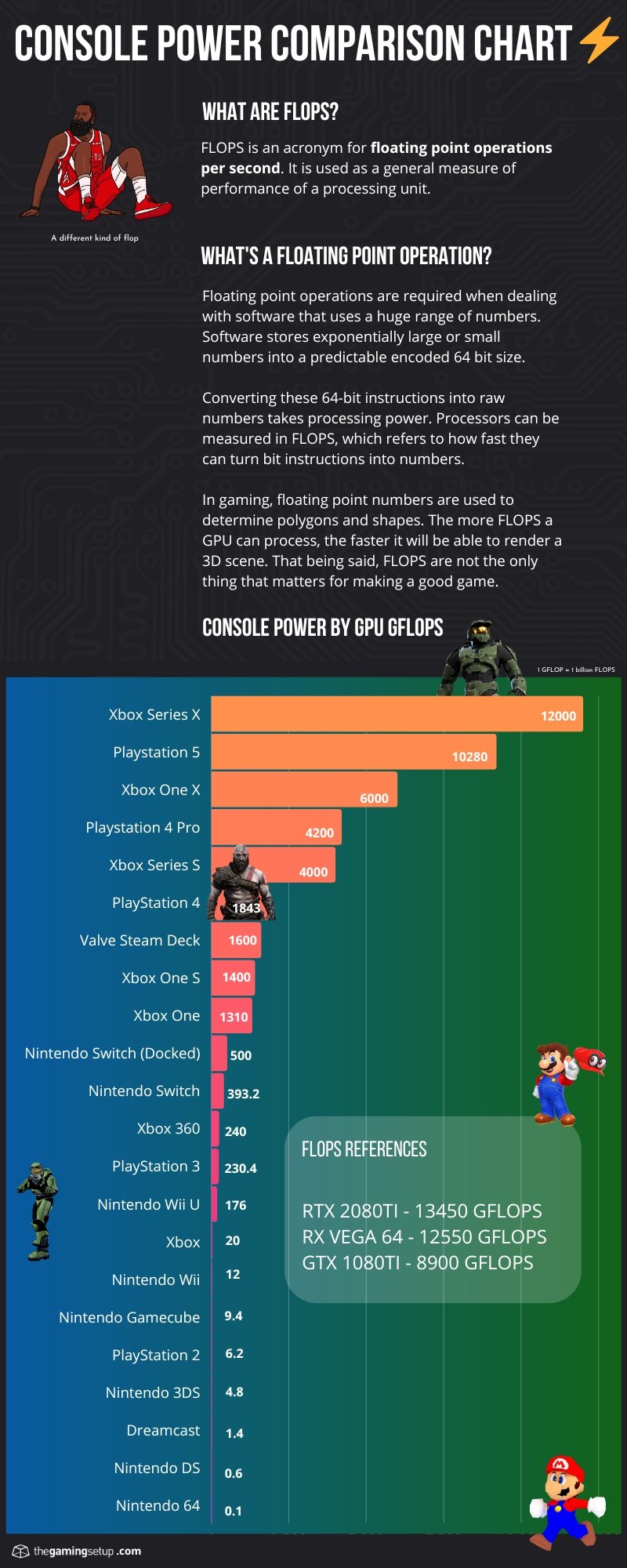

We heard the same thing with wiiu ports, newer architecture will makes games run better then 360 the majority didn't. I don't expect that with switch 2 but the best thing to do is wait and see ports, that honestly gives the best idea of where switch 2 at. |

False equivalence, the Switch which wasn't too different from the WiiU in terms of pure TFlops did manage without a sweat and that's because of the ARM NVDIA architecture. The WiiU was opting for the IBM stuff which was not up to par with x86 architectures.

But indeed, the wait and see approach is always the best

Switch Friend Code : 3905-6122-2909