zero129 said:

goopy20 said:

Maybe that's the only parameter that matters for fanboys. But Halo not being on ps5 is just a business decision, whereas Horizon Zero West being exclusive to the ps5 is a design decision. Big difference there in how far the developers can potentially take their ambitions.

The truth is that MS is too busy being all consumer friendly that they ARE forgetting the people who are excited about next gen. You know the people who want to see exciting new games, conceived to be impossible on current gen. I get what you're saying and that you won't settle for anything less than the best version of Halo Infinite. But how many people bought a X1X or a high-end gpu to play the best version of base console games?

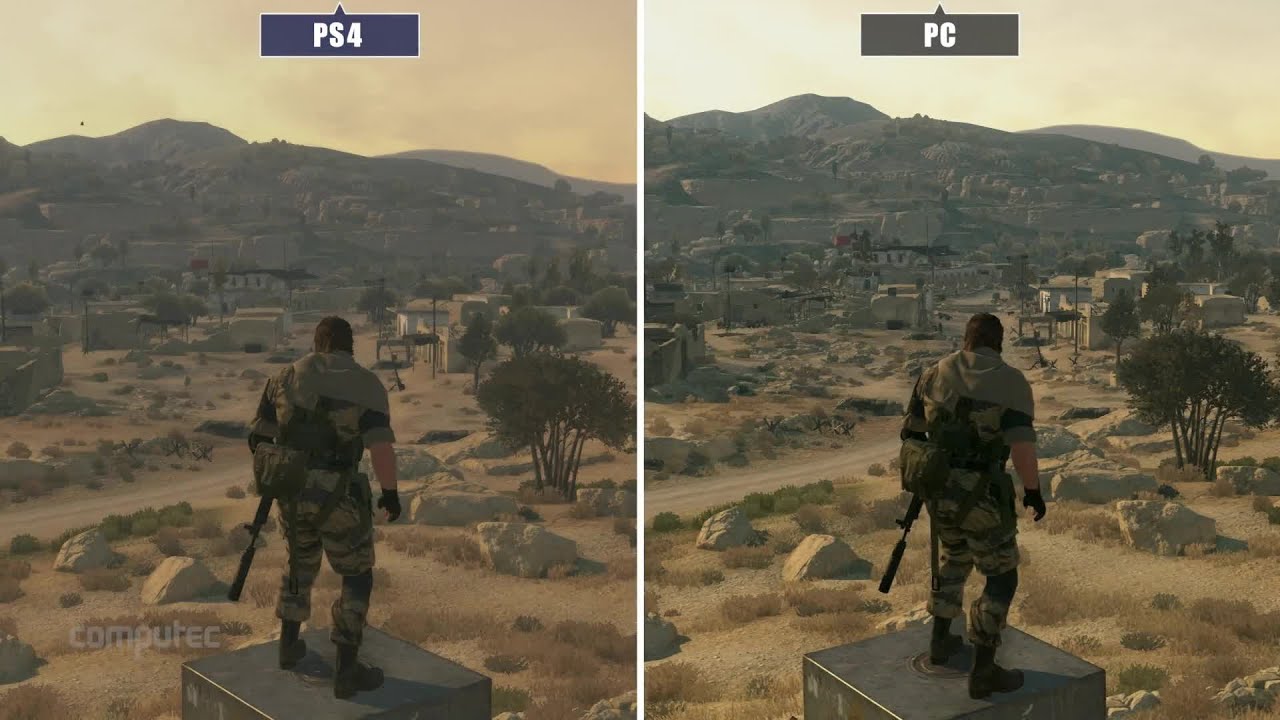

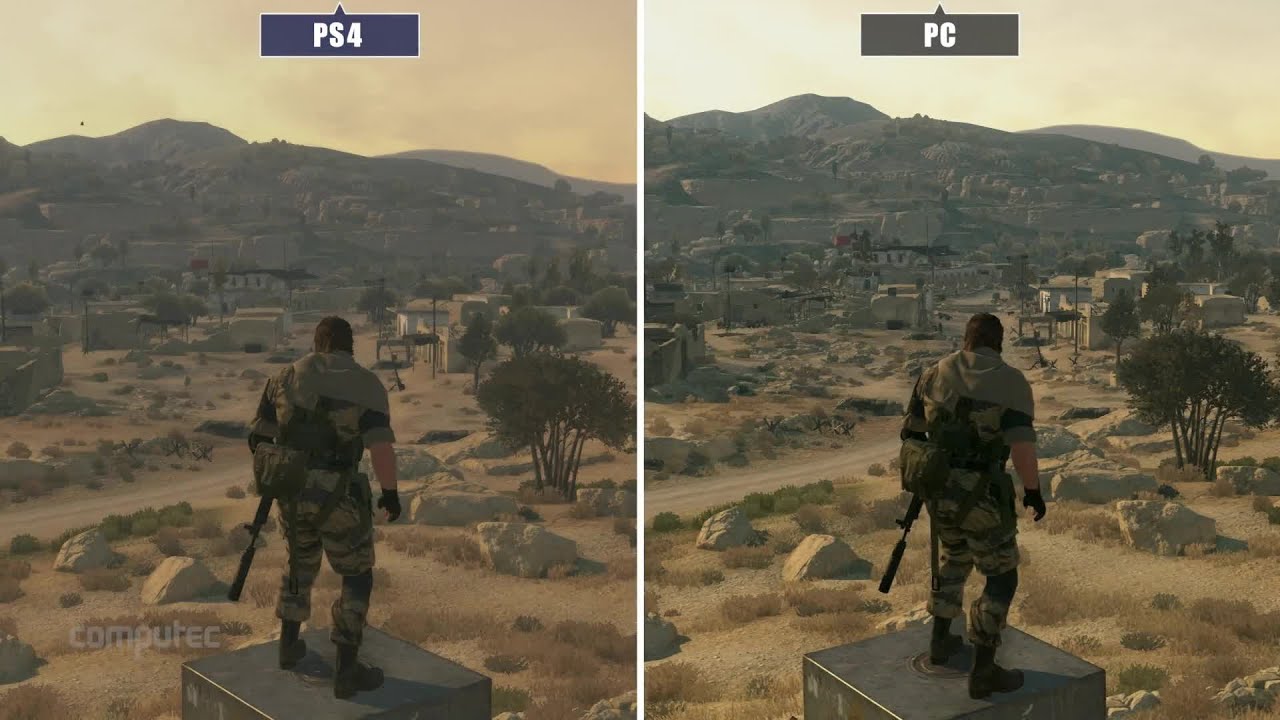

There simply is a difference between a generational jump we typically see when new consoles come out, and scalable graphics like we see on pc. This is the difference.

Generational leap

And it's not just the graphics in this screenshot, it's about the entire scope and visual package of the game.

Scalable graphics on pc

Scalable graphics on pc and the mid-gen consoles are a lot more subtle. Obviously the resolution and framerate jump is noticeable when you're playing the game, but you're still getting the exact same game (same levels, ai, physics etc.) whether your playing on a 1,3TF Xbox One or a X1X/high-end pc.

|

Scalable

The Witcher 3 PC

How do you explain this?. Do the images of the witcher 3 not look at least 2 to 3 gens apart?.

I wonder how many devs it took to make both versions....

|

https://www.eurogamer.net/articles/digitalfoundry-2019-the-witcher-3-switch-tech-interview

Digital Foundry: How long did this take to develop?

Piotr Chrzanowski: Over a year now. Well it depends on whether you want to add the business stuff, but then I would say it's around 12 months at this point.

Digital Foundry: How did the first attempts at getting Witcher 3 running Switch pan out?

Piotr Chrzanowski: We had the project set up in a very clear [direction]. We wanted to achieve each stage that was planned - what was aimed at. Of course one of the first things was to make sure that engine would actually run on the Switch. I would say the other big milestone was to have a piece of the game actually playable. So we went with Kaer Mohren with the prologue, because it was a self-contained world that has all the systems, including combat etc. We looked through that, we saw how we needed to shape the next stages of the project. And then we could expand to the White Orchard, and then to the rest of the world.

https://www.eurogamer.net/articles/digitalfoundry-2019-the-witcher-3-switch-tech-analysis

Pick a busy street in Novigrad for example, and the lowest reading comes in at just 810x456. For perspective, that's 63 per cent on each axis of 720p.

Jump to PS4, and there's not a tangible a difference in NPC density, just going by eye. Of course, their draw distance is compromised on Switch, but the rendering range on NPCs is generous enough to cram everyone in. The only snag is that the frame-rate on characters is halved towards the distance.

Beyond the inevitable blurriness, there are further downsides. The first is pop-in; often it's well-handled, but Switch has limits in how quickly it can draw everything in. The big trouble spot is cut-scenes; fast camera cuts overwhelm the system, and the way geometry flickers in and out can be pretty glaring. Detail-rich areas such as Novigrad can also push the streaming systems hard, resulting in pop-in that varies on repeat tests.

The Witcher 3's drive for compression also has an impact beyond FMV quality. Textures and sound also take a hit. Texture assets and filtering are of a notably lower quality, where a form of trilinear filtering is used which adds to the generally blurry look - while the assets themselves are essentially very similar to the PC' version's low setting.

Docked play is solid, but dense areas like Novigrad will see performance drop to something closer to 20fps. Portable play is similar, but not quite as robust overall.

A lot of work, a lot of compromises and downgrades. And what does it prove, TW3 has no next gen game play, just better graphics!

Yep, if you work long enough on it and comprise enough you can port things down quite a lot

Minecraft on new 3DS

Minecraft on PC

Of course TW3 and Minecraft have nothing to do with automatic scaling. Tons of work went into these versions with severe compromises. TW3 got the game play in yet Minecraft not so much.

Anyway these examples have nothing to do with being held back, since the 3DS version of MC and Switch version of TW3 came later and had no influence on the design of each game.

Last edited by SvennoJ - on 13 July 2020