shikamaru317 said:

victor83fernandes said:

1 - 600 back in 2006 was worth a lot more than 600 now

2 - This could be 600 but its a premium, only for the hardcore who can afford it, just like Xbox X at 500dollars VS xbox S at 250 dollars

3 - They don't expect it to sell a lot, just like current gen, the base consoles sell the most, its all about having the option for people willing to pay

4 - Options are good, it caters to everyone, ideally 3 models, S-AD for people who don't care for graphics and will buy only digital, S model for people who are not fussed with graphics (same people who bought an S instead of the X this gen), and the series X, for the kind of people who bought the X this generation because they are willing to pay for premium graphics and hardware.

5 - The cost of the series X is definitely more than 460dollars, I have no idea how you came up with that number but a 12TF next gen graphics + good CPU + controller + SSD + motherboard, power unit, antennas, case, cables, RAM, 4K bluray drive + cooling system + shipping + building costs + labour costs definitely costs more than 500 dollars, do your research properly.

|

1. Perhaps, but I don't think gamers will be any more forgiving of $600 now than they were 14 years ago. Gamers are an entitled bunch, just look how much they rage when a publisher suggests increasing the price of games from $60, where it has been at for 15+ years, even though every other product has seen inflation based price increases in that time span.

2. I doubt it. Phil has said in interviews that Microsoft understands what gamers consider to be a reasonable price for hardware. I can't see him going for $600, that is definitely over gamer expectations.

3. I'm pretty sure they have higher expectations for Series X than you think. Sales expectations were low for Xbox One X because it was a mid-gen refresh, MS knew that selling people a $500 system 3 years before next gen starts is a tougher prospect than selling them a $500 console at the start of a new gen. Yes, sales expectations will be higher for Series S than Series X, but they likely want to sell at least 25m Series X consoles this gen imo, not 5m or so like Xbox One X.

4. I actually agree with you that they need a disc drive S model for slightly more than the discless model. That might be the model I buy if they release it. I'm just not sure that it is happening, every leak so far has mentioned S being discless, none of them have mentioned the possibility of a slightly more expensive disc drive S model. It's also harder to sell people on a 4 tflop console for $350 when PS5 is expected to be about 10 tflop for $450, only $100 more for 6 more tflops of graphical power. They could go for an unusual price like $320 or $330 for the disc drive model, but console pricing almost always comes in increments of $50.

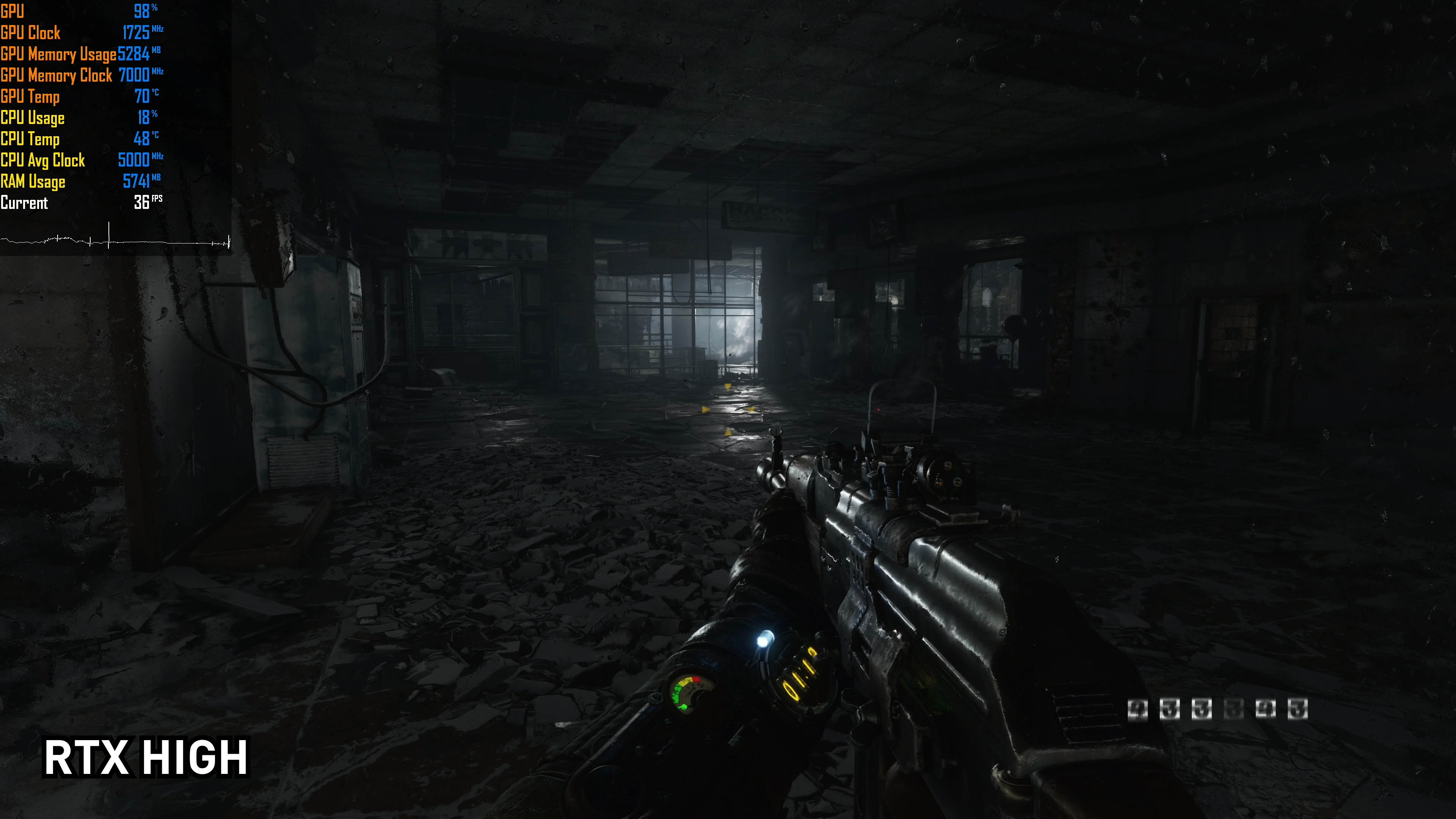

5. The $460-510 estimated cost to build for Series X comes from Industry Analyst Daniel Ahmad (ZhugeEX), based on the rumored specs for Xbox Series X, compared to the rumored specs for PS5, which was recently leaked to have a $450 cost to build. If he is right, I definitely expect MS to sell Series X for $500.

|

1 - Its not perhaps, its definitely, its called inflation, 600 back then is over 800now, 600 now is a bargain, people buy 1500dollars phones now, back then that was unthinkable, people are ready to pay, new games will be 65dollars, so only people with money will jump on launch day, games haven't jumped price yet because you artificially already pay much more, if you buy 1 game per month, and you pay 15 dollars for online, then the game cost you 75dollars, back then ps3 online was free.

2 - But 600 is very very reasonable for hardware that costs over 700, that's his own words, gamers bought the X and thought it was reasonable at 500 with much lower specs, some gamers even buy 1500dollars PCs and think its reasonable price, its all relative, if the machine is 12teraflops with SSD and better architecture, then 600 is more than reasonable

3 - Wrong, expectations for the X were high, in fact there was more fuss about it than the series X, I believe they already accepted that they cant beat Sony no matter what. If they thought they could, they wouldn't be in a rush to market the thing, they would have waited. 25million series X? I don't know what drugs are you on, but 70-80 % of sales will definitely be the cheap model, the series X will sell around 15 million, and series S around 40million

4 - Why? people on this website are hardcore gamers, why buy the cheap model if there's a better model available? I will always go for the best of the best model, my doubts is should I just build a PC which can play xbox games and thousands os pc games and emulators and free online and cheaper games, ps5 on the other hand is a given that I buy one because of the exclusives, xbox wont even have exclusives for the first 2 years, so I can wait and see

Yeah hard to sell xbox at all, it could have the same power as a ps5 and be 100dollars difference, people would still go for ps5, 100dollars is only slightly more than the price of 1 game, 100 dollars is nothing these days, its basically 1 night out with the girlfriend

Also 4TF is just a rumour, and 11TF ps5 is also just a rumour, could be the ps5 pro and not the lower model. We don't know yet.

With that said, lets not forget this generation ps4 was more powerful and a full 100dollars cheaper, no need to buy rechargeable batteries either, so that was like 120cheaper

5 - Yeah if that guy is so good, then how much did he say was the shipping costs? And the manufacturing costs? As in salaries of employes. How much did he say was a next gen controller? How much was the cables and antennas?

I haven't even checked and I can assure you he's wrong, fortunately I got a brain, and Ive been reading gaming news for several generations now

Rumoured ps5 specs? C'mon start thinking for yourself, specs were not even revealed yet, how could analysts know the final specs if even developers dont know?