shikamaru317 said:

You seem to be forgetting some things: 1. Control is a game that was designed to scale on everything from a 1.3 tflop GPU Jaguar CPU base Xbox One to PC's with 12 tflop 2080ti GPU's and Core i9 CPU's. As a late gen game it had to be designed to run on a huge scale of hardware, and that means that visual compromises had to be made on both ends of the scale to get it to run on everything. If Control was designed as a PC exclusive, with minimum specs at 4 tflop and recommended specs at 10 tflop for instance, you would have a much better looking game. 2. Nvidia's 20 series will be 2 years old when next-gen consoles launch. There is a good chance that AMD's RDNA 2 ray tracing will be more efficient than Nvidia's 20 series raytracing, even if it is less efficient than Nvidia's upcoming 30 series. And like I said before, developers may make ray tracing an optional feature in many next-gen games, giving gamers a choice between ray tracing and higher resolution and/or framerate. 3. PC games don't receive the same level of optimization that console games do, and console hardware has less OS overhead than PC's. So comparing Control on PC to next-gen console games and saying that you won't see a graphical improvement if they aim for native 4K is a bad comparison. What does all of that mean? Well, for one thing, the minimum spec for next gen will increase by over 4x compared to last gen, from 1.3 tflop GCN to 4 tflop RDNA 2 (equivalent to 6 tflop GCN at least, possibly more, especially if MS decides to overclock XSS some before release). Assuming the same resolution as last gen, 900p for XB1 compared to 900p for XSS, that extra 4x power can be fully utilized to push graphics forward with ray tracing and other improvements. Then those graphical improvements from the 4x spec improvement on the low end can be ported over to the two higher end consoles, PS5 and XSX, and resolution scaled up as high as it can go without compromising framerate, using variable resolution tech to lower resolution in demanding scenes and increase it in less demanding scenes. Now will devs actually aim for 900p on XSS so that the full 4x spec improvement can be put toward graphical improvements? Some probably will, while others will follow Microsoft's target and aim for 1080p. I'll be very surprised if we see more than a handful of games that run at less than 900p on XSS, sames goes for 1800p on XSX. PS5, well hard to say without knowing more about it's specs, if it is actually 9.2 tflop with Sony attempting to overclock it to 10 tflop as rumored, I would say that we'll see 1440p as the minimum for PS5. And yes, I agree with you that any PS5 and XSX games that are less than native 4K will likely use methods like checkerboarding and temporal injection to improve their upscaling to 4K. |

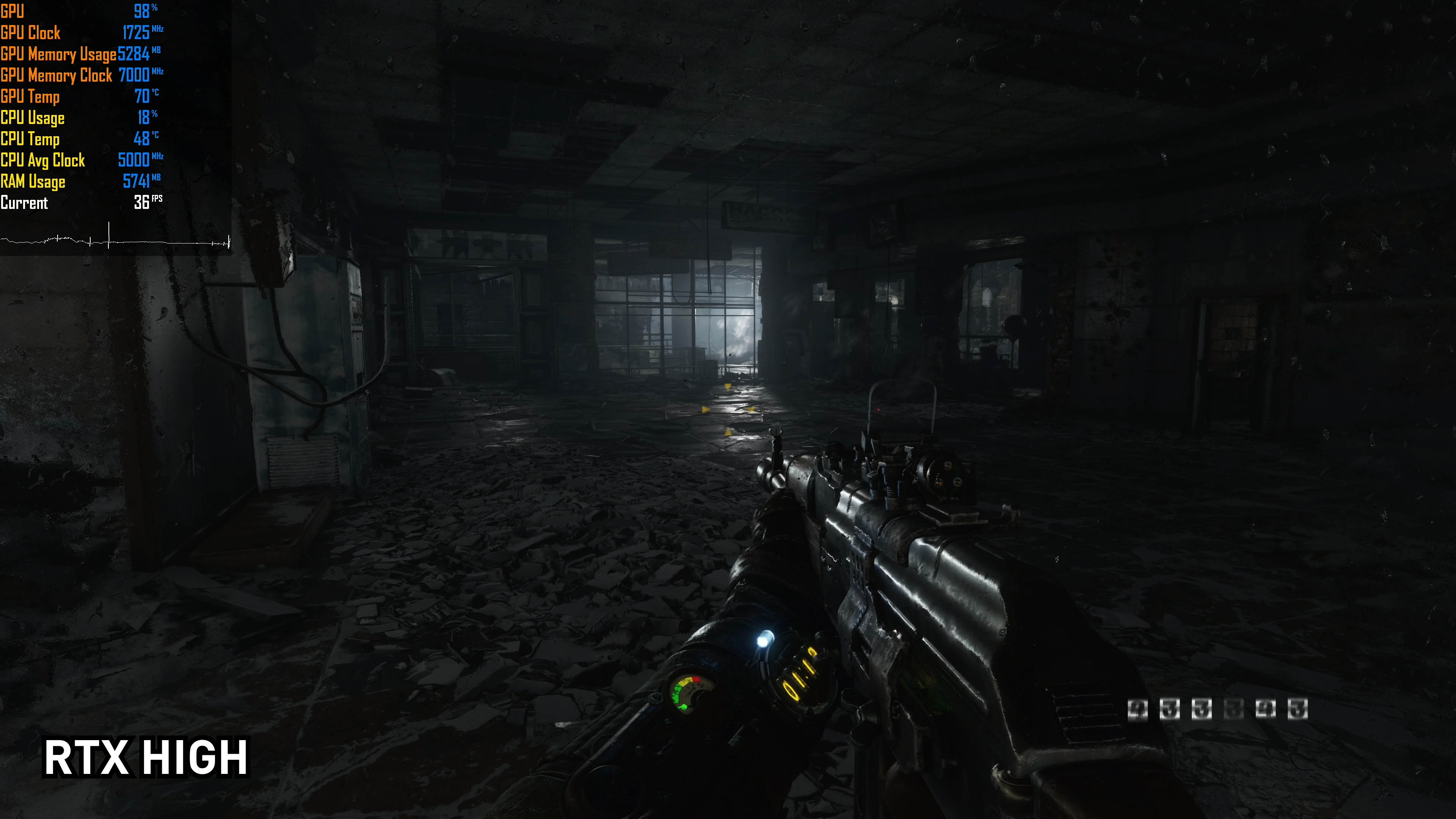

Almost any current gen game will cripple the performance of a 2080Ti in native 4k and RT enabled.

In any case, this whole "Series S is a great idea" is based on assuming developers will waste a ton of resources on native 4k and ultra graphics settings (that most people won't notice) on the ps5. Yes if they would do that, they could lower the graphics settings and have the game running in 1080p on Series S. The only thing that you seem to be forgetting is that developers don't just waste resources like that, and if they did, they would be doing a poor job. In reality they will be as efficient as possible, use things like checkerboard rendering that won't take that much of a hit on performance and avoid using graphics settings that take up too much resources with a relatively small gain in visuals.

Also, if what you say is true and they did target 1080p on Series S, wouldn't that mean visual fidelity would have to get scaled down across all platforms? The whole idea of having minimum and high-end console specs sounds pretty terrible to me. Consoles aren't pc's and I don't want developers making 4 different versions of their games, I want them to focus on a single platform and use it to its fullest potential.

Last edited by goopy20 - on 13 March 2020