Pemalite said:

HollyGamer said:

Yes , because the games you played are using engines that build using new CPU from 2008 and above as baseline. Imagine if game developer still use SNES as baseline of gaming design until now, we might still stuck on 2D even we have raytracing that is under utilize.

Having Xbox One as baseline, means you just stuck on old Jaguar while underutilize the tech available on Scarlett with SSD, AVX 256 on Ryzen 3000 , faster ram, Ray Tracing, and Iq per geometry that only available on RDNA etc etc , not include the tech for machine learning that can used on enhancing gameplay and a lot possibility if Scarlet is the baseline.

As game designer you are limited by the canvas , you need bigger canvas and better ink.

|

Engines are simply scalable, that is all there is to it, that doesn't change when new console hardware comes out with new hardware features that gets baked into new game engines.

You can turn effects down/off, you can use different (less demanding) effects in place of more demanding ones and more, which is why we can take a game like Doom, The Witcher 3, Overwatch, Wolfenstein 2 which scales from high-end PC CPU's, right down to the Switch... A game like the Witcher 3 still fundamentally plays the same as the PC variant despite the catastrophic divide in CPU capabilities.

Scaling a game from 3 CPU cores @ 1ghz on the Switch to 6 CPU cores at 1.6Ghz on the Playstation 4 to 8+ CPU cores @3.4Ghz on the PC just proves that.

The Switch was certainly not the baseline for those titles, the Switch didn't even exist when those games were being developed, yet a big open world game like the Witcher 3 plays great, game design didn't suffer.

I mean, I get what you are saying, developers do try and build a game to a specific hardware set, but that doesn't mean you cannot scale a game downwards or upwards after the fact.

At the end of the day, things like Ray Tracing can simply be turned off, you can reduce geometric complexity in scenes by playing around with Tessellation factors and more and thus scale across different hardware.

drkohler said:

blablabla removed, particularly completely irrelevant "command processor special sauce" and other silly stuff.

Ray tracing doesn't use floating point operations? I thought integer ray tracing was a more or less failed attempt in the early 2000s so colour me surprised.

|

You have misconstrued my statements.

The Single Precision Floating Point numbers being propagated around are NOT including the Ray Tracing capabilities of the part, because the FLOPS are a function of Clockrate multiplied by functional CUDA/RDNA/GCN shader units multiplied by number of instructions per clock. - It excludes absolutely everything else, that includes Ray Tracing capabilities.

drkohler said:

Look, as many times as you falsely yell "Flops are irrelevant", you are still wrong.

The technical baseplate for the new console SoCs are identical. AMD has not gone the extra miles to invent different paths for the identical goals of both consoles. Both MS and Sony have likely added "stuff" to the baseplate, but at the end of the day, it is still the same baseplate both companies relied on when they started designing the new SoCs MANY YEARS AGO.

And for ray tracing, which seems to be your pet argument, do NOT expect to see anything spectacular. You can easily drive a $1200 NVidia 2080Ti into the ground using ray tracing, what do you think entire consoles priced around $450-500 are going to deliver on that war front?

|

You can have identical flops with identical chips and still have half the gaming performance.

Thus flops are certainly irrelevant as it doesn't account for the capabilities of the entire chip.

Even overclocked the Geforce 1030 DDR4 cannot beat the GDDR5 variant, they are the EXACT same chip, roughly the same flops.

https://www.gamersnexus.net/hwreviews/3330-gt-1030-ddr4-vs-gt-1030-gddr5-benchmark-worst-graphics-card-2018

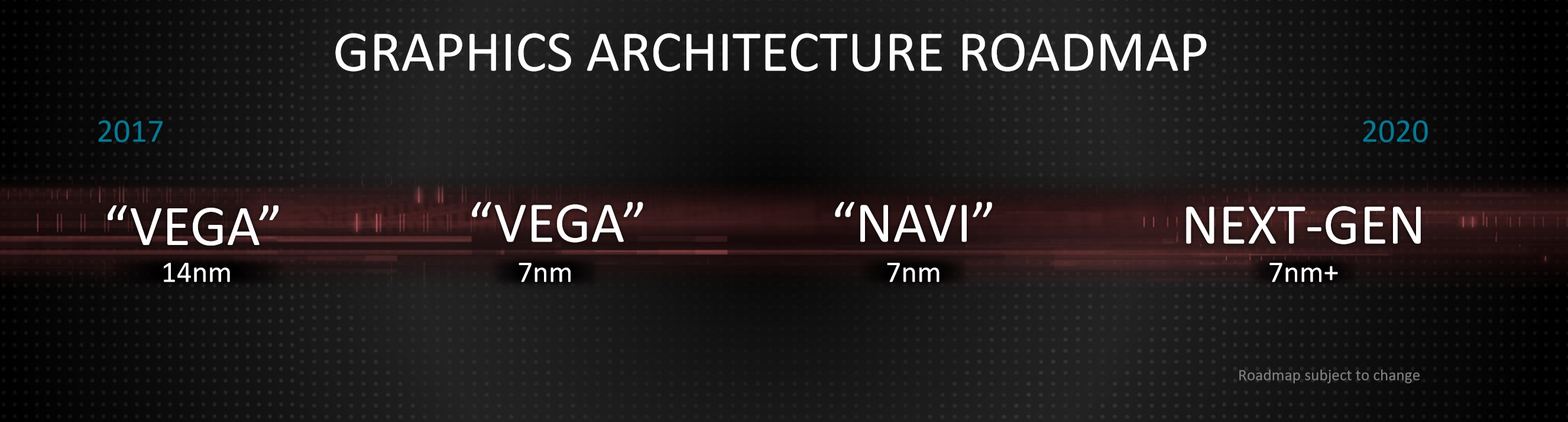

nVidia's Ray Tracing on the 2080Ti is not the same as RDNA2's Ray Tracing coming out next year, the same technology that next-gen consoles are going to leverage, so it's best not to compare.

Plus, developers are still coming to terms on how to more effectively implement Ray Tracing, it is certainly a technology that is a big deal.

DonFerrari said:

CGI I guess the problem is that the way Pema put was that Flops are totally irrelevant.

But if we are looking at basically the same architeture and most stuff on them being the same, looking at the GPU point one being 10TF and other 12TF hardly the 10TF would be the better one.

Now sure on real world application if one have better memory (be it speed, quantity, etc) or CPU that advantage may be reversed.

So basically yes when Pema says it what he wants to say is that Tflop isn't the end all "simple number show it is better", not that it really doesn't matter at all.

|

Well. They are irrelevant, it's a theoretical number, not a real world one, the relevant "Flop number" would be one that is based on actual, real-world capabilities that the chips can actually achieve.

And like the Geforce 1030 example above, you can have identical/more flops, but because of other compromises, you end up with significantly less performance.

DonFerrari said:

I read all the posts on this thread. And you can't claim Oberon is real, no rumor can be claimed real until official information is gave.

Even consoles that were released in the market the real processing power were never confirmed because the measures made by people outside the company aren't reliable. Switch and WiiU we never discovered what is the exact performance of their GPU, we had just good guesses.

So please stop trying to pass rumor as official information. And also you can't claim 4 rumors that are different are all true.

|

The Switch we know exactly what it's capabilities are because Nintendo are using off-the-shelf Tegra components, we know what clockspeed and how many functional units it has as well thanks to Homebrew efforts that cracked the console open.

The WiiU is still a big unknown because it was a semi-custom chip, we do know it's an AMD Based VLIW GPU with an IBM PowerPC CPU though.

And exactly, you can't claim 4 different rumors as all being true.

|