Peh said:

Show me the evidence to the context I was talking about. |

Keep in mind the context was "There is no screen tearing in Nintendo consoles."

But lets see you try disputing Digital Foundry, with Dark Siders 2 on the Wii U.

http://www.eurogamer.net/articles/digitalfoundry-darksiders-2-on-wii-u-face-off

| Peh said: Yes, Free Sync is a hardware solution. The console as well as the TV will need it. I say TV, because I go for the majority of customers that actually attach a console to a TV. Freesync is a solution invented by AMD in contrast to G-Sync from Nvidia. From which company, do you think, does the GPU in the Nintendo Switch comes from? Do I have to write it out for you? Should I also imply a *facepalm* like you did? |

Again. I have elaborated that both the console and display need to support it to work. Not sure why you keep bringing that up.

And Really? You think that having an Nvidia chip excludes you from having Freesync? News flash. It doesn't.

Freesync is an open standard. FreeSync is royalty-free. Freesync is free to use.

The VESA or the "Video Electronics Standards Association" standard has adopted AMD's Freesync dubbed "Adaptive Sync" and integrated it as part of the Displayport 1.2a, 1.3, 1.4 and newer standards.

Nintendo is free to use and license it. And so is nVidia. Nintendo is paying nVidia for it's "semi-custom" SoC. A large part of the work is on the software side as long as the display supports variable refresh rates.

| Peh said:

It has? How about using quotes for that debunked segment. |

Fine.

| Pemalite said:

|

https://developer.nvidia.com/postworks

| Peh said:

An effect that does not appear to be blurry? I am intrigued. Show me. |

Turn 3D off and on.

| Peh said:

1. The main point is at what factor do you notice aliasing and at what strength. |

Doesn't matter. Anti-Aliasing is cheap. Use at-least 2x.

| Peh said:

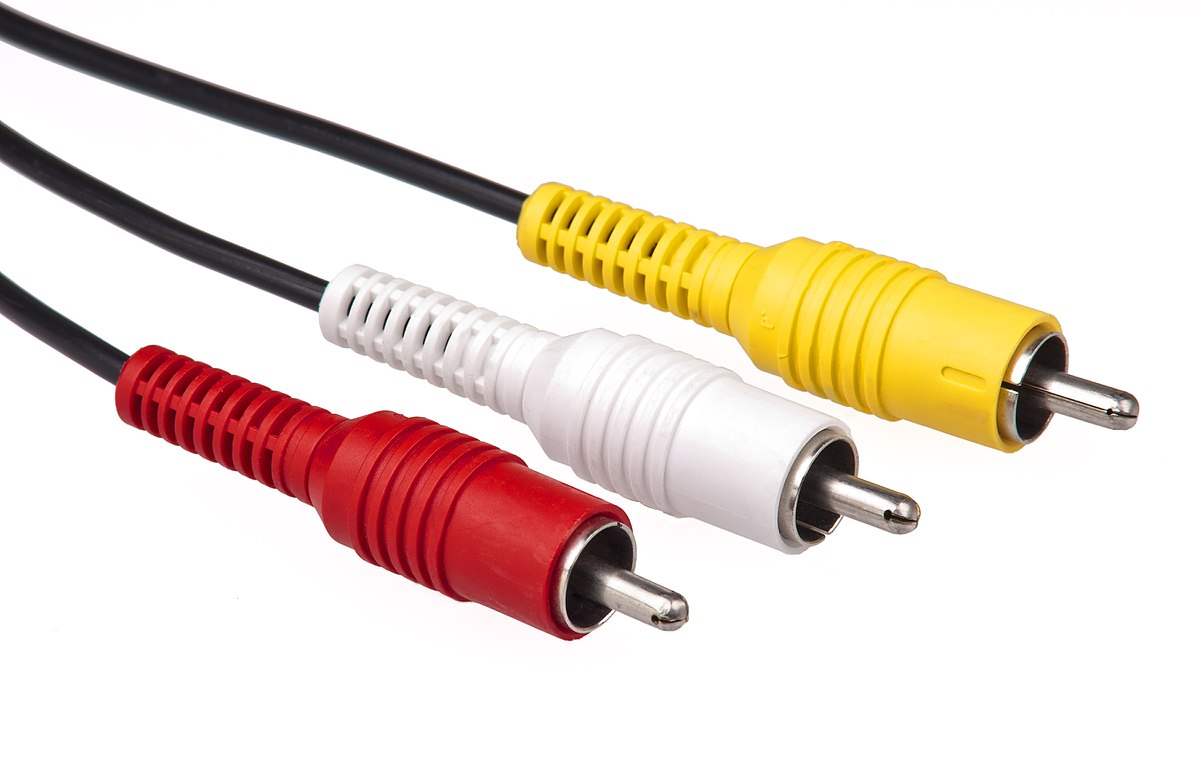

2. I didn't know that the Xbox and the N64 came out at the same time. What? They didn't? Colour me surprised. RCA maximum of 1080i? I know this connector by CINCH. And I have my share amount of doubt that it actually does 1080i. Care to show me the data sheet for that, because I am unable to find it. |

I never said they did come out at the same time.

Component and Composite RCA. Learn the differeces.

https://en.wikipedia.org/wiki/Component_video

https://en.wikipedia.org/wiki/Composite_video

Here are the original Xbox and PS2 Component RCA cables.

--::{PC Gaming Master Race}::--