fatslob-:O said:

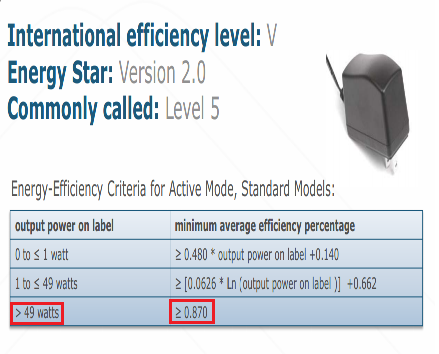

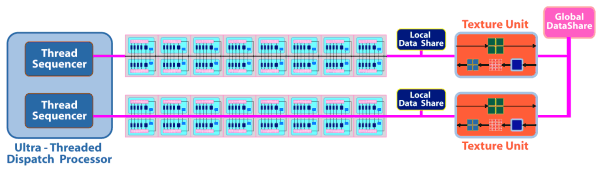

Just how many 4-8K textures are they going to use then ? Once you get alot of objects on screen the complexity increases. As for shin'en's over statement about memory bandwidth, there's only so much you can do with 32MB. See the reason as to why bandwidth was important in the first place was to feed the GPU otherwise functional units will start to get under utilized. TMUs, shaders, ROPS, and everything else in the GPU is exetremely dependent on it. The reason as to why the X1 wasn't able to achieve 1080p for some of multiplatform titles or exclusives has to do with the main memory bandwidth being a bottleneck. (Aside from the lowered amount of ROPS ofcourse.) How else does the GPU get fed with alot of other data ? You can not keep constantly relying on the eDRAM to feed the GPU much like how the X1 relies on the eSRAM! It has to eventually access the main memory and only the main memory because it likely has the most data being resident on it. The purpose of caching is to SAVE BANDWIDTH by storing frequently accessed data. It was not meant to COMPLETELY FEED THE GPU. Now don't get me wrong! I'm not saying that the WII U isn't capable of handling 4-8K textures but it shouldn't be able to handle it at a regular basis given that it likely has a lack of TMUs and everything else I stated before. Do not fret about this issue. There are other ways of about solving this issue like I had said before. The megatexture technology introduced by john carmack in RAGE will resolve alot of issues regarding the WII Us lack of bandwidth and TMUs by trying to only stream the required highest resolution textures for a certain assests of a scene so that it can conserve alot of texture fillrates ad bandwidth so that the level of detail gets scaled back when objects are far away while also being scaled up too where the objects near the camera will have the highest level of detail. |

The car/spaceship models will probably always be 4-8K(maybe 8K at small numbers and 4 at larger numbers) and other significant stuff will be in those resolutions while the rest will be 1080p as far as i can tell

bet with ash3336 he wins if Super Mario 3D World sells less than Mario Sunshine during the first three years, I win if 3D World outsells Sunshine's first 3 years. Loser get sig controlled for 3 months (punishment might change)

Do you doubt the WiiU? Do you believe that it won't match the GC?

Then come and accept this bet

Nintendo eShop USA sales ranking 2/12/2013 : http://gamrconnect.vgchartz.com/thread.php?id=173615