fatslob-:O said:

@Bold Damn your understanding of cache is extremely limited LOL. Must suck not to know anything on this subject. http://www.merriam-webster.com/dictionary/cache This post tells me you have a severe lack of understanding memory hierarchies. The reason why 32MB of eDRAM is considered cache and not main memory has to do with the fact that it has a lower access time than the main memory as evidenced to it being integrated on GPU. The intel iris pro 5200 treats it's own 128MB eDRAM as an L4 cache because it's not a part of the main memory. It is instead used to buffer extra pieces of data that the L3 cache requested. Your the one that supposed to owe me an apology for your atrocious behaviour. |

this tell me you dont get the point

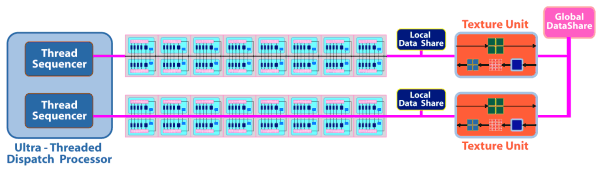

i am basically saying that since the gpus have internal caches of just some kilobytes

shouldnt the 32megabytes of edram be considered like a ra for the gpu?

not saying is a real ram, is just an analogy dude

seriously, you lack of undesrtanding is awful

and now i have already proven to you that cache for gpu is more in the line of kilobytes and not megabytes

just look at how much youy got for texture units spus and other stuff

only 8 kilobytes of texture cache

only 64kilobytes of global data share

about 8 to 16 kilobyte of local data share

can you copare that to 32megabytes?

no

sorry dude, no atter what you say all people here have watched your previous staetents

nd again you forget this

http://hdwarriors.com/why-the-wii-u-is-probably-more-capable-than-you-think-it-is/

"

The easiest way that I can explain this is that when you take each unit of time that the Wii U eDRAM can do work with separate tasks as compared to the 1 Gigabyte of slower RAM, the amount of actual Megabytes of RAM that exist during the same time frame is superior with the eDRAM, regardless of the fact that the size and number applied makes the 1 Gigabyte of DDR3 RAM seem larger. These are units of both time and space. Fast eDRAM that can be used at a speed more useful to the CPU and GPU have certain advantages, that when exploited, give the console great gains in performance.

The eDRAM of the Wii U is embedded right onto the chip logic, which for most intent and purposes negates the classic In/Out bottleneck that developers have faced in the past as well. Reading and writing directly in regard to all of the chips on the Multi Chip Module as instructed.

"

read dude, read

your lack of understanding is awful