| Mar1217 said: Good. Some devs finally understood that graphical fidelity is digging itself into more apparent diminishing returns. This mean will finally get upgraded gameplay experience with smoother framerate. |

Wrong.

What do you think | |||

| Yes | 2 | 6.45% | |

| That's to much | 3 | 9.68% | |

| I dont mind the games running at 30 fps | 0 | 0% | |

| Make it 120 fps with 4k with PS4 graphic | 3 | 9.68% | |

| Make it 60 fps 4k with be... | 23 | 74.19% | |

| Total: | 31 | ||

| Mar1217 said: Good. Some devs finally understood that graphical fidelity is digging itself into more apparent diminishing returns. This mean will finally get upgraded gameplay experience with smoother framerate. |

Wrong.

Mr Puggsly said:

Don partly explained why, next gen is also about a leap forward in visuals. Forza 7 is 4K/60 fps, which is 8th gen graphics in about 8 million pixels. 8K however is more like 33 million pixels. X1X is not was a 2x increase in GPU power, it's more like 4.5x. PS4 Pro more than doubled the GPU power of PS4 and still doesn't hit 4K in GT Sport. Even if PS5 had double the GPU power of X1X, we're talking about quadrupling the pixel count of 4K while improving graphics. In comparison achieving 120 FPS actually requires less power. Lastly, Forza 7 has lower GPU requirements than GT Sport. Forza 7 uses less demanding effects than something like GT Sport because it was built for a console with less GPU power. Lastly, 8K isn't that important or a great use of limited console resources. Making the leap to 120 fps and improving visuals further will appeal to more people than 8K. I think many people using 9th gen consoles will still have 1080p TV. Which is fine because supersampling of 1440p-4K content actually looks really nice on a 1080p screen. |

Next gen for majority of customers using console both 8k and 120p will be useless. Having a good/stable 4k30fps or 60fps (depending on the game) and using everything else to improve other aspects of the graphic will be much better use of power, and in some cases may even lower from 4k to do that.

duduspace11 "Well, since we are estimating costs, Pokemon Red/Blue did cost Nintendo about $50m to make back in 1996"

http://gamrconnect.vgchartz.com/post.php?id=8808363

Mr Puggsly: "Hehe, I said good profit. You said big profit. Frankly, not losing money is what I meant by good. Don't get hung up on semantics"

http://gamrconnect.vgchartz.com/post.php?id=9008994

Azzanation: "PS5 wouldn't sold out at launch without scalpers."

SvennoJ said:

Human vision limit is about 200 pixels per degree of field of view, light sensitivity from 0.01 lumens to 3,500 lumens (before it gets uncomfortably bright) which is about 38,000 nits, up to 1000 fps and a much wider color gamut than can be displayed today. Current displays are approaching full DCI-P3 coverage atm and max 2000 nits. (GT Sport is already made for rec.2020 and up to 10,000 nits) |

I mean, that's all theoretical and on paper values. But look at the law if diminishing returns. How much more effective is 4k vs 12k vs 30k? Or how much of a difference does one notice between 60fos vs 120 vs 240 vs 480? In practical terms, is there an advantage to go in that direction or are we gonna go just for the sake of going?

Just a guy who doesn't want to be bored. Also

Eagle367 said:

I mean, that's all theoretical and on paper values. But look at the law if diminishing returns. How much more effective is 4k vs 12k vs 30k? Or how much of a difference does one notice between 60fos vs 120 vs 240 vs 480? In practical terms, is there an advantage to go in that direction or are we gonna go just for the sake of going? |

How long does it take for you to know it is a game or a movie? And how much can you perceive the movie isn't real life? That is how far we still are of hitting the limit.

duduspace11 "Well, since we are estimating costs, Pokemon Red/Blue did cost Nintendo about $50m to make back in 1996"

http://gamrconnect.vgchartz.com/post.php?id=8808363

Mr Puggsly: "Hehe, I said good profit. You said big profit. Frankly, not losing money is what I meant by good. Don't get hung up on semantics"

http://gamrconnect.vgchartz.com/post.php?id=9008994

Azzanation: "PS5 wouldn't sold out at launch without scalpers."

Mr Puggsly said:

Don partly explained why, next gen is also about a leap forward in visuals. Forza 7 is 4K/60 fps, which is 8th gen graphics in about 8 million pixels. 8K however is more like 33 million pixels. X1X is not was a 2x increase in GPU power, it's more like 4.5x. PS4 Pro more than doubled the GPU power of PS4 and still doesn't hit 4K in GT Sport. Even if PS5 had double the GPU power of X1X, we're talking about quadrupling the pixel count of 4K while improving graphics. In comparison achieving 120 FPS actually requires less power. Lastly, Forza 7 has lower GPU requirements than GT Sport. Forza 7 uses less demanding effects than something like GT Sport because it was built for a console with less GPU power. Lastly, 8K isn't that important or a great use of limited console resources. Making the leap to 120 fps and improving visuals further will appeal to more people than 8K. I think many people using 9th gen consoles will still have 1080p TV. Which is fine because supersampling of 1440p-4K content actually looks really nice on a 1080p screen. |

Yeah agree, 8k is good for marketing but in reality it's bad for performance and overall. I rather have 1440p or 4k checkerboard or other sampling method like DLSS . Also agree as well if we talk about increasing fidelity then yes the performance and frame rates will also be reduce . I just hope developer put an option for gamer to choose between graphic fidelity, resolution, or frame rates in the nest gen consoles (like PS4 pro and Xbox One X) .

HollyGamer said:

It depend on what kind of customization they will do with zen 2, there is a rumor that it's not full Zen 2, instead a cutdown L cache and downlock core speed. I also used the CPU performance comparison between Xbox SX (Scarlet) CPU with Xbox One X mentioned by Spencer and by Xbox official. Phil said the jump from One X to Series X on CPu is around 4 times. I guess PS4 pro to PS5 will be even bigger due to Xbox One X CPU is faster than Jaguar on Pro. |

We already have Zen 2 with cutdown caches and various chips with differing clockrates.

The Ryzen 5 3500 has half the cache as the 3500X for example and the mobile Ryzen 4000 series has significantly less cache again.

Jaguar was AMD's absolute WORST CPU at a time when AMD's entire CPU lineup was garbage.

I would need a link to the Spencer quote... Because if he is using the Xbox One X as the comparison point, that likely includes all the improved CPU capability as Microsoft shifted a heap of processing to the command processor on the GPU portion of the SoC.

Not to mention there are some instructions that Ryzen can do that will simply be multiples better than Jaguar, things like AVX2.

| DonFerrari said: Ray tracing on PS5 is through a dedicated chip so shouldn't impact much the rest. |

Not a dedicated chip. It only has the one chip, the main SoC.

The Ray Tracing is done on dedicated Ray Tracing cores on the main chip, it's part of the GPU... Which is why Flops is a joke as Flops doesn't account for the Ray Tracing capabilities.

| SvennoJ said: There is no such thing as a ray tracing chip. There are many ways to do ray tracing, some faster, some with better quality. Some dedicated hardware can help but it's not magic. RTX cards still struggle with ray tracing. It will impact the rest, dedicated chip or not. |

Not entirely accurate.

Historically what the industry has done was made dedicated DSP/ASIC/FPGA as a separate chip (Aka. Ray Processing Unit) that handled Ray Tracing duties, granted this was for more professional markets... But the point remains.

Dedicated Ray Tracing chips have existed even as far back as the late 90's/early 2000's.

https://en.wikipedia.org/wiki/Ray-tracing_hardware

| Eagle367 said: I mean, that's all theoretical and on paper values. But look at the law if diminishing returns. How much more effective is 4k vs 12k vs 30k? Or how much of a difference does one notice between 60fos vs 120 vs 240 vs 480? In practical terms, is there an advantage to go in that direction or are we gonna go just for the sake of going? |

We are nowhere near the limit of what we can perceive via framerates and resolutions.

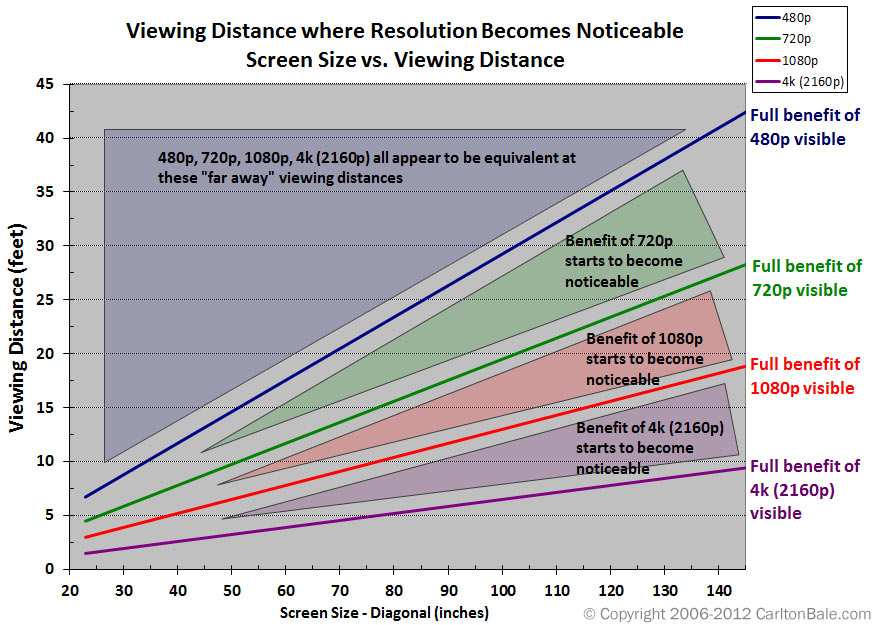

Basically, the closer and larger your display, the higher the resolution you need.

Display sizes seem to have stagnated around the 65-75" mark in most market segments with even budget offerings coming up at 50-55" these days.

I think in general... For the 9th gen we should actually try and achieve 4k@60fps before we start worrying about more, the Xbox One X and Playstation 4 Pro weren't even able to guarantee those resolutions and framerates across every title, leave the higher end stuff to the PC.

--::{PC Gaming Master Race}::--

Pemalite said:

We already have Zen 2 with cutdown caches and various chips with differing clockrates. |

"...Microsoft stated that the console CPU will be four times as powerful as Xbox One X;..."

https://en.wikipedia.org/wiki/Xbox_Series_X#cite_note-gamespot_series_x-8

https://www.windowscentral.com/xbox-series-x-specs

https://www.gamespot.com/articles/goodbye-project-scarlett-hello-xbox-series-x-exclu/1100-6472190/">https://web.archive.org/web/20191213021815/https://www.gamespot.com/articles/goodbye-project-scarlett-hello-xbox-series-x-exclu/1100-6472190/

| Eagle367 said: I mean, that's all theoretical and on paper values. But look at the law if diminishing returns. How much more effective is 4k vs 12k vs 30k? Or how much of a difference does one notice between 60fos vs 120 vs 240 vs 480? In practical terms, is there an advantage to go in that direction or are we gonna go just for the sake of going? |

It all depends on the size of the screen you want to play on, or how much of your fov is filled up with it. VR being the largest as it tries to fill up your entire fov which is up to 150 degrees per eye. Sitting 8ft away from a 150" screen (projector range for now but screens keep getting bigger as well) you have a fov of about 76 degrees. Atm 37 degrees is more common 8ft away from a 65" tv.

Recommended sitting distance for 20/20 vision is based on 60 pixels per degree. 8ft from a 65" tv puts you at needing at least 2,220 pixels horizontally, thus higher than 1080p, but lower than 4K. However you can easily see improvements at up to double that, 120 pixels per degree, since rows of square pixels are more easily detected than analog pictures. So you might even have some benefit from 8K at that distance and size and/or still need good AA.

For fps other things come into play. The human eye tracks moving objects to get a clear picture, however on a screen objects make steps over the screen which makes it hard to follow them. The solution for this jarring effect so far has been to apply motion blur. It doesn't make it sharper, it's not how the human eye works but it's easier to watch a blurry streak than a stuttering object cross the screen. To make it like real life, moving objects need to make smaller steps more often. Ideally they only move 1 pixel per step. Depending on how fast the object goes and the resolution of the screen, the required fps for a moving object can go way past 1000 fps.

Of course there are also limits to what the human eye can track. When in a moving car, the road further away is perfectly sharp, while the closer you look you get to an area where you get flashes of sharp 'texture' (where the eye temporarily tracks or scans the road) to an area where it's all a motion blur. Per object frame rate is something for the future to replace per object motion blur so the eye can work naturally. (Actually it's nothing new, the mouse pointer animating independently from a 30 fps video playing underneath is common practice, as well as distant animations running at reduced speeds) Anyway the bigger the screen, the worse the effects of lower frame rates, or the higher the fps you need to present a stable moving picture. For cinema there have always been rules not to exceed certain panning speeds to still be able to make some sense out of the stuttering presentation.

So yes, there are diminishing returns when it comes to resolution and fps. Or the opposite, achieving the last 10% of 'realism' cost more than the first 90%.

Pemalite said:

Not a dedicated chip. It only has the one chip, the main SoC.

Not entirely accurate. |

What I was hinting at is that there are more ways to do ray tracing, and dedicated hardware can help or can hinder innovation. Or rather there is not a simple switch to add ray tracing to a game by turning the chip on. Plenty other things need to be done (which will slow down the rest) to make the best use of the ray tracing cores. But it will help. Software only ray tracing would severely restrict the resolution to make it feasible. (Or needing a lot of shortcuts making it far less impressive)

Anyway as long as I can still easily tell the difference between my window and the tv, not there yet :)

HollyGamer said:

"...Microsoft stated that the console CPU will be four times as powerful as Xbox One X;..."

https://en.wikipedia.org/wiki/Xbox_Series_X#cite_note-gamespot_series_x-8 https://www.windowscentral.com/xbox-series-x-specs https://www.gamespot.com/articles/goodbye-project-scarlett-hello-xbox-series-x-exclu/1100-6472190/">https://web.archive.org/web/20191213021815/https://www.gamespot.com/articles/goodbye-project-scarlett-hello-xbox-series-x-exclu/1100-6472190/ |

Not that I didn't believe you, but cheers.

Watched the video and it is indeed 4x over the Xbox One X, but they didn't go into any specifics.

But 4x the performance of the Jaguar 2.3Ghz 8-core CPU's is a low ball in my opinion... And it doesn't explain what kind of workload. - But if they are accounting for the command processor that offloads CPU duties, then it would seem more plausible.

For example Zen+ is 137% faster than Kabini, per core, per clock cycle in integer heavy workloads, Zen 2 increases that gap considerably... Then dump higher clockrates and thread counts on top of that and things get interesting really quickly.

SvennoJ said:

What I was hinting at is that there are more ways to do ray tracing, and dedicated hardware can help or can hinder innovation. Or rather there is not a simple switch to add ray tracing to a game by turning the chip on. Plenty other things need to be done (which will slow down the rest) to make the best use of the ray tracing cores. But it will help. Software only ray tracing would severely restrict the resolution to make it feasible. (Or needing a lot of shortcuts making it far less impressive) |

Bandwidth and cache contention are very real issues in GPU's, so you are right that engaging things like Ray Tracing can introduce new bottlenecks into a GPU design that will bring down performance in other areas.

The dedicated Ray Tracing cores simply offloads the processing that would have been done on your typical shader cores, which can thus keep doing their own rasterization duties, so the performance hit is less significant.

In saying that, like all things rendering, there is a balance to be found, it will be interesting to see how Ray Tracing is leveraged next-gen considering it's one of the long sought after crown-jewels of game rendering, but it's the 10th gen console era where the technology will come into it's own I think.

--::{PC Gaming Master Race}::--

SvennoJ said:

It all depends on the size of the screen you want to play on, or how much of your fov is filled up with it. VR being the largest as it tries to fill up your entire fov which is up to 150 degrees per eye. Sitting 8ft away from a 150" screen (projector range for now but screens keep getting bigger as well) you have a fov of about 76 degrees. Atm 37 degrees is more common 8ft away from a 65" tv.

What I was hinting at is that there are more ways to do ray tracing, and dedicated hardware can help or can hinder innovation. Or rather there is not a simple switch to add ray tracing to a game by turning the chip on. Plenty other things need to be done (which will slow down the rest) to make the best use of the ray tracing cores. But it will help. Software only ray tracing would severely restrict the resolution to make it feasible. (Or needing a lot of shortcuts making it far less impressive) |

Interesting read. I guess I'm just a minimalist and don't care that much about the 10%. I mean I prefer switch over all other consoles for a reason

Just a guy who doesn't want to be bored. Also