Ok, time to put some hardware news. And because it's Friday, I'll end them with a joke:

Suck it up, Valve's going to make us wait years for Steam Deck 2

https://www.pcgamer.com/suck-it-up-valves-going-to-make-us-wait-years-for-steam-deck-2/

The Steam Deck has been a significant hit. So, a sequel seems something of a certainty. But any Steam Deck 2 with a major performance upgrade remains at least a "few years" away.

So says Steam Deck designer Lawrence Yang. Speaking to ye olde Rock Paper Shotgun (opens in new tab), what Yang said specifically was, "a true next-gen Deck with a significant bump in horsepower wouldn’t be for a few years."

Cadence Delivers Technical Details on GDDR7: 36 Gbps with PAM3 Encoding

https://www.anandtech.com/show/18759/cadence-derlivers-tech-details-on-gddr7-36gbps-pam3-encoding

When Samsung teased the ongoing development of GDDR7 memory last October, the company did not disclose any other technical details of the incoming specification. But Cadence recently introduced the industry's first verification solution for GDDR7 memory, and in the process has revealed a fair bit of additional details about the technology. As it turns out, GDDR7 memory will use PAM3 as well as NRZ signaling and will support a number of other features, with a goal of hitting data rates as high as 36 Gbps per pin.

>> That's what Nvidia's 5000 and AMD's 8000 series GPU may use.

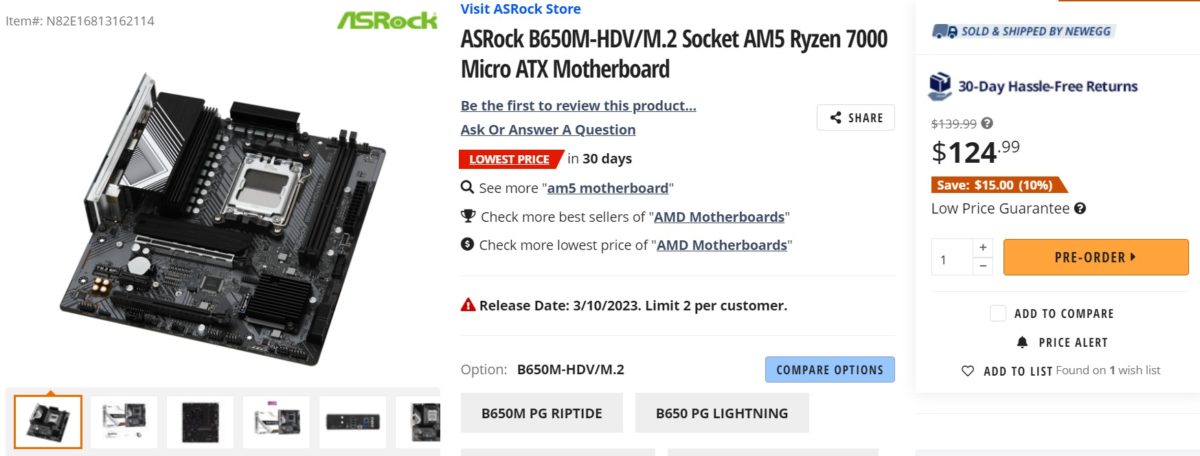

First AMD AM5 motherboard is now available below $125

https://videocardz.com/newz/first-amd-am5-motherboard-is-now-available-below-125

Cyberpunk 2077 To Showcase Truly Next-Gen RTX Path Tracing as part of RT: Overdrive Mode in GDC Presentation

https://wccftech.com/cyberpunk-2077-implement-truly-next-gen-rtx-path-tracing-utilizing-nvidia-rt-overdrive-tech/

In a session listed by NVIDIA, the company will be presenting the first real-time RTX Path Tracing demo within Cyberpunk 2077. The session titled "Cyberpunk 2077' RT: Overdrive – Bringing Path Tracing into the Night City (Presented by NVIDIA)" will include NVIDIA's Senior Developer & Technology Engineer, Pawel Kozlowski, along with CD Projekt Red's Global Art Director, Jakub Knapik. The session will take place on the 22nd of March (...)

Backblaze Reveals 2022 SSD Life Expectancy Statistics: Temperatures Are Potential Factor

https://wccftech.com/backblaze-reveals-2022-ssd-life-expectancy-statistics-temperatures-are-potential-factor/

Backblaze has revealed the newest storage drive stats report for 2022, with a singular focus on SSDs that the company utilizes for data storage boot drives within their cloud storage systems.

And now, the joke:

AMD Says It Is Possible To Develop An NVIDIA RTX 4090 Competitor With RDNA 3 GPUs But They Decided Not To Due To Increased Cost & Power

https://wccftech.com/amd-says-it-is-possible-to-develop-an-nvidia-rtx-4090-competitor-with-rdna-3-gpus-but-they-decided-not-to-due-to-increased-cost-power/

During an interview with ITMedia, AMD's EVP, Rick Bergman, and AMD SVP, David Wang, sat to discuss their goals with the RDNA 3 and CDNA 3 architectures. The most interesting question is asked right at the start of the beginning about why AMD didn't release an RDNA 3 GPU under its Radeon RX 7000 lineup that competes in the ultra high-end enthusiast segment such as NVIDIA's RTX 4090.

Please excuse my bad English.

Currently gaming on a PC with an i5-4670k@stock (for now), 16Gb RAM 1600 MHz and a GTX 1070

Steam / Live / NNID : jonxiquet Add me if you want, but I'm a single player gamer.