richardhutnik said:

And checking the prices. Say all that is true. So, you will end up getting people on here talking about THE POWA! And then average folks looke at $400-$500 range and go, "ARE YOU FREAKING NUTS!" Do people seriously expect to get sufficient numbers of sales at the $400-$500 range to be able to turn the current sagging generation software sales around? |

The 'average' folks are what's killing gaming in general - dumbed-down mainstream games with a focus on QTEs and cinematics over gameplay, short SP campaigns, truckloads of DLC/Season Passes, rehashed sequels, health regeneration in games, most games are way too easy, etc. All of this started once consoles went mainstream. If these so called 'average' mainstream gamers stopped buying consoles because they are too expensive for them, only the hardcore gamers would remain. Ironically, console gaming would be better anyway.

These 'average' consumers have no problems with buying $300 tablets and iPhones on a 2-year contract but then complain about $400-450 prices for next gen consoles. If they don't want next gen consoles, let them leave so developers focus more efforts on fewer high quality games like was the case in the past. Steam does just fine with a much smaller # of PC gamers (50 million users), which means you don't need a base of 240 million consoles to be profitable. If companies are spending $130+ million on developing a game and then $150 million on marketing it, they are doing it wrong. When small studios like Crytek and CD Projekt Red were able to make stunning games on small budgets (Crysis 1, Witcher 2), there are no excuses why great games cannot be made for a fraction of COD Blacks Ops 2 budget. The indie PC developers are thriving, which lands more evidence you can make good games for 1/10th the cost. Trine 2 - awesome game made by a small studio.

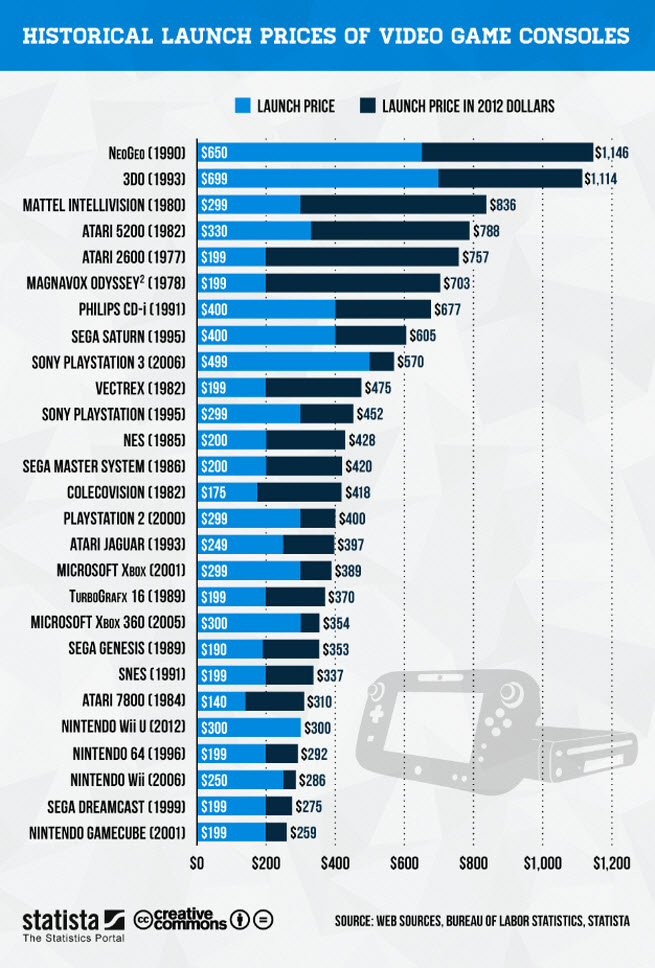

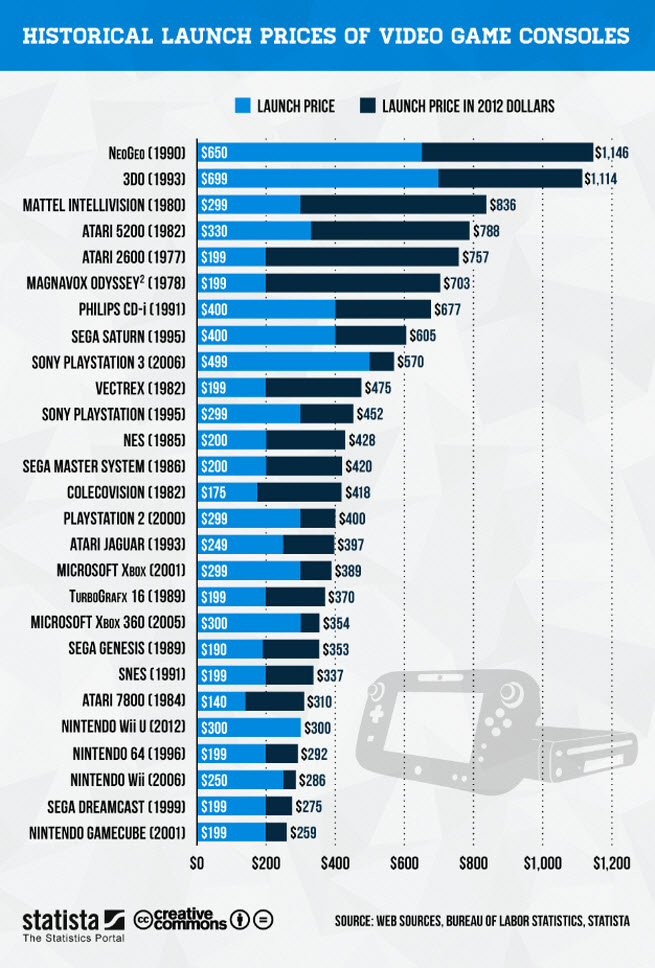

In the context of historical console pricing, $400-450 isn't that expensive assuming the hardware is next generation and there are new exciting games/IPs that take advantage of new technology.

http://www.gamasutra.com/view/news/177337/sizing_up_wii_us_price_tag_against_history.php#.URHOo6VZUeo

$400-450 would fall roughly at a mid-point relative to historical console prices adjusted for inflation.

If people don't want to pay $400-450+ for a next generation console, they can always wait 2 years for prices to drop. This is actually not a bad idea since bugs get worked out and the console's gaming library expands.

The funny part is the same people who complain about $400+ hardware don't talk about what is often an greater cost of console ownership over its useful life -- software. For instance, games like COD:BO2 cost $59.99 and then come with $30-40 of DLC. Borderlands 2 was what $59.99 and had $29.99 of Season Pass?

The average person in NA doesn't even blink at $60 a month + $199-299 2-year smartphone plans but then complains about spending $400 on a console that will last 6-7 years with 0 upgrades. The same people also pony up $ for Xbox Live Gold fees and amass a collection of 50+ videogames over 6-7 years. If someone purchased Xbox 360 on launch day and paid $40-50 a year on Xbox Live, by now they would have spent $280-350 on Xbox Live alone.

On another note, when Xbox 360 250GB bundle (a 7+ year old console) is still selling for $299.99, it's mind-boggling people are complaining about possibly paying $400-450 for next gen consoles.

http://www.bestbuy.com/site/Microsoft+-+Xbox+360+250GB+Bundle/6693058.p?id=1218782894450&skuId=6693058&st=xbox%20360%20250gb&cp=1&lp=2

I am guessing mathematics and capital budgeting decisions are not strong points of the average console gamer!

Furthermore, videogaming is among the cheapest hobbies, compared to just about anything else out there. The average family spends > $400 on attending a single Football game. What about learning to play sports like tennis, golf, skiing/snowboarding? All way more expensive than videogaming. Gun ownership/shooting range, learning how to fly a plane, sailing, traveling, photography, wine tasting/China collecting? All more expensive than console gaming.