fatslob-:O said:

| Pemalite said:

Then it all died out for awhile, probably because the PC was advancing rapidly and the Xbox 360 and Playstation 3 launched, so it was easier/cheaper for developers to ditch the tiled rendering so they can push out games faster.

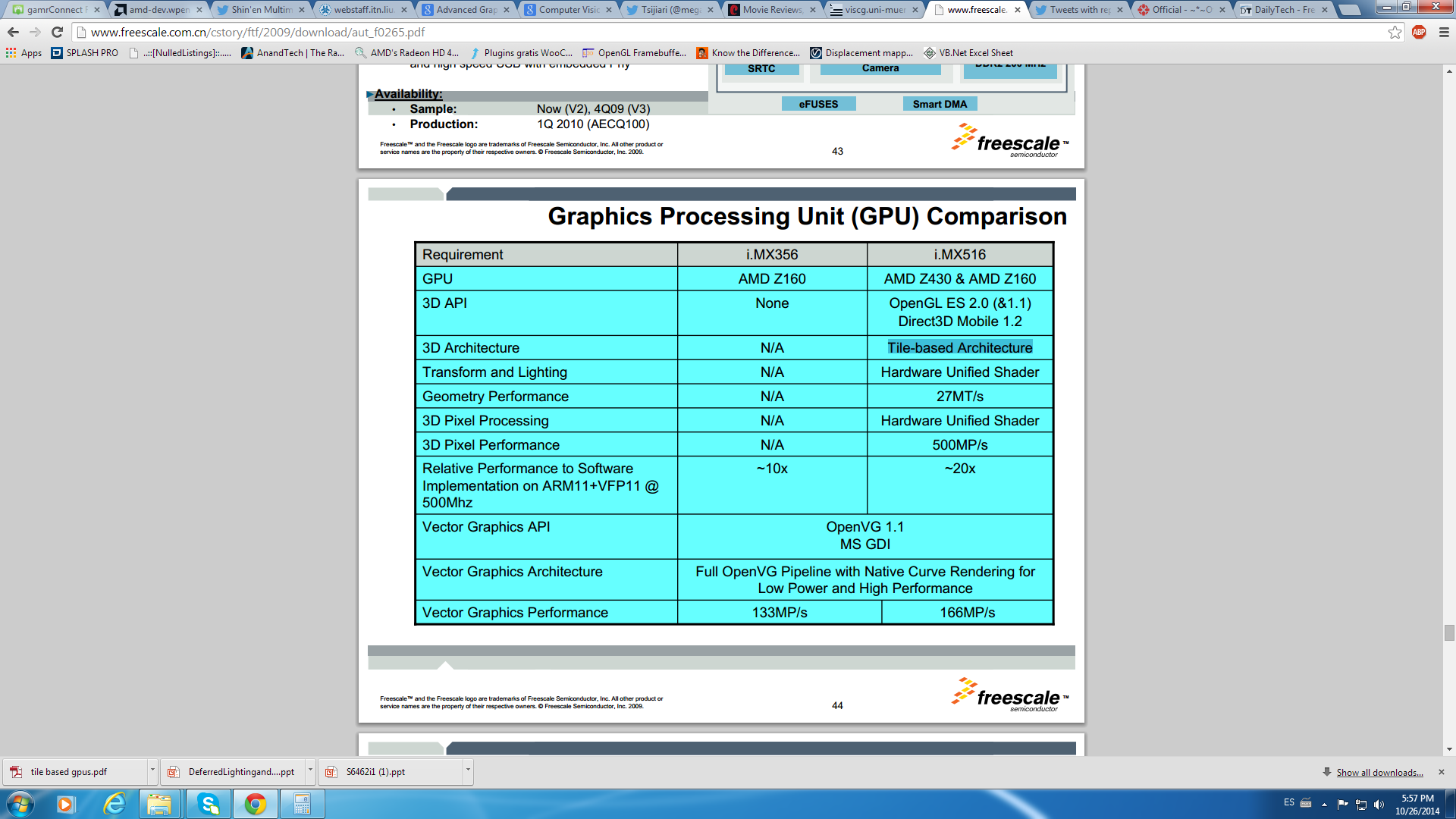

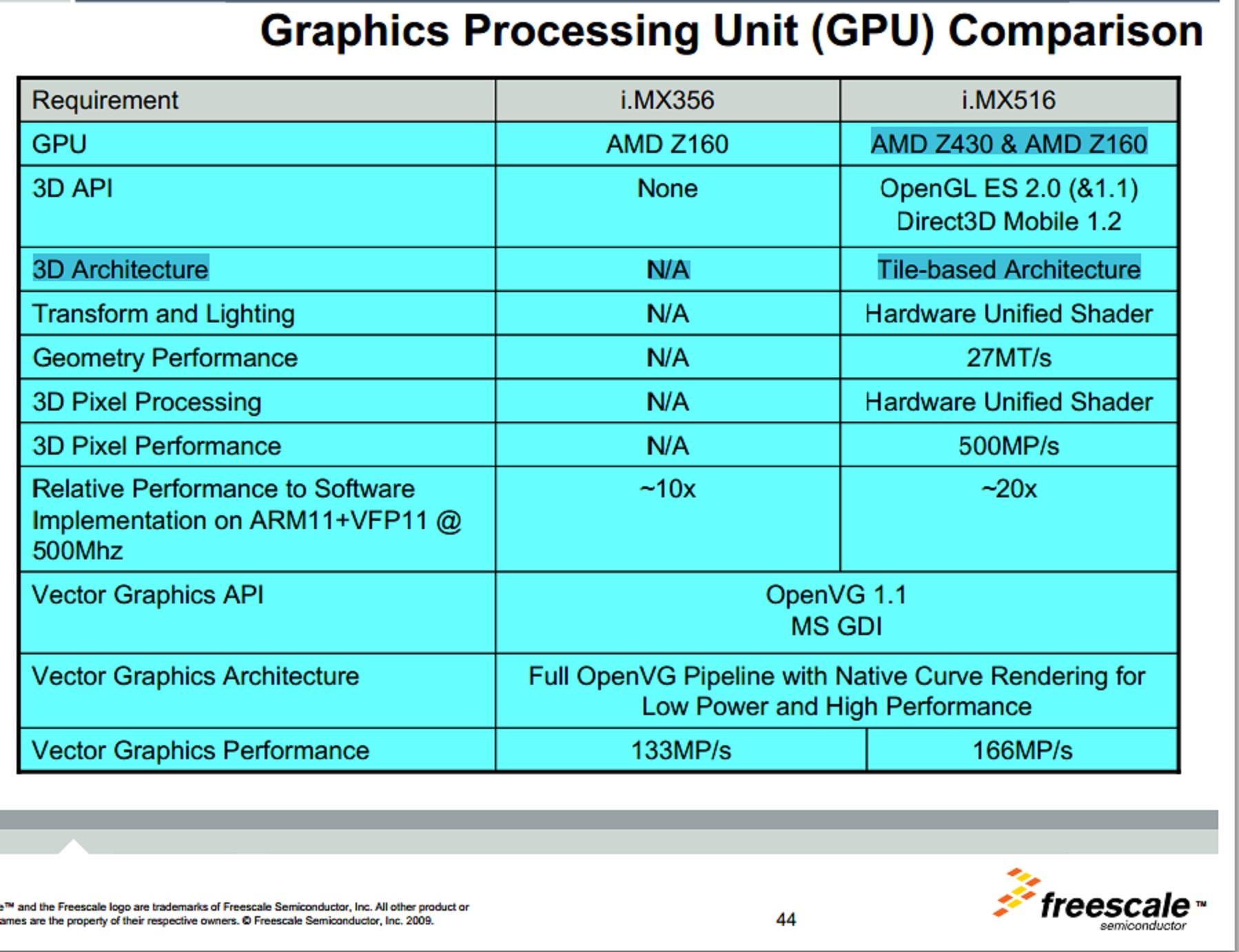

Of course, things eventually changed, mobile came storming into the market with ARM Mali, PowerVR, Qualcomm's Adreno (Aka. Mobile Radeon) all pushing tiled approaches due to power and efficiency.

Then the Xbox 360 and Playstation 3 simply got old, people still demanded better graphics, so tiled rendering was a solution once again and it seems to have continued to carry onwards, which is a good sign.

|

That's half the story but ...

They died out because they couldn't solve the hardware accelerated transform and lighting issue fast enough.

After having added shaders to their design they always made the most sense in the mobile space where bandwidth was sparse plus I don't think the Adreno features tile based rendering.

I'm not so sure that tile based rendering was the solution to push for better graphics. It's really good for the purpose of geometry binning but that's about it though. I almost forgot but tile based rendering also came back to desktops with the 2nd gen Nvidia Maxwell and Intel Haswell featuring it! Both of those GPU architectures support conservative rasterization which enables programmable binning so it's possible to do some tile based rendering and maybe AMD can support conservative rasterization too with their GCN GPUs just like how their hardware always supported volume tiled resources as well as pixel shader ordering ...

|

True! There was issues in getting TnL working with tiled based rendering.

Ironically, TnL with tiled based rendering only became possible when manufacturers abolished the TnL hardware in their GPU's and performed those functions on the shader hardware instead.

megafenix said:

its true that multipass is an option, but multipasses put to much work on the gpu comapred to single pass

http://www.orpheuscomputing.com/downloads/ATI-smartshader.pdf

"

The key improvements offered by ATI’s SMARTSHADER™ technology over existing hardware vertex and pixel

shader implementations are:

• Support for up to six textures in a single rendering pass, allowing more complex effects to be achieved

without the heavy memory bandwidth requirements and severe performance impact of multi-pass

rendering

Every time a pixel passes through the rendering

pipeline, it consumes precious memory bandwidth as data is read from and written to texture

memory, the depth buffer, and the frame buffer. By decreasing the number of times each pixel on

the screen has to pass through the rendering pipeline, memory bandwidth consumption can be

reduced and the performance impact of using pixel shaders can be minimized. DirectX® 8.1 pixel

shaders allow up to six textures to be sampled and blended in a single rendering pass. This

means effects that required multiple rendering passes in earlier versions of DirectX® can now be

processed in fewer passes, and effects that were previously too slow to be useful can become

more practical to implement

"

http://books.google.com.mx/books?id=BV8MeSkHaD4C&pg=PA64&lpg=PA64&dq=multi+pass+rendering+vs+single+pass&source=bl&ots=oUGfAJqSQO&sig=2y6jekyjXj1FpAxE8wICmzw5B1E&hl=es&sa=X&ei=vyNNVOPsPKHmiQLFvIDICQ&ved=0CDwQ6AEwBA#v=onepage&q=multi%20pass%20rendering%20vs%20single%20pass&f=false

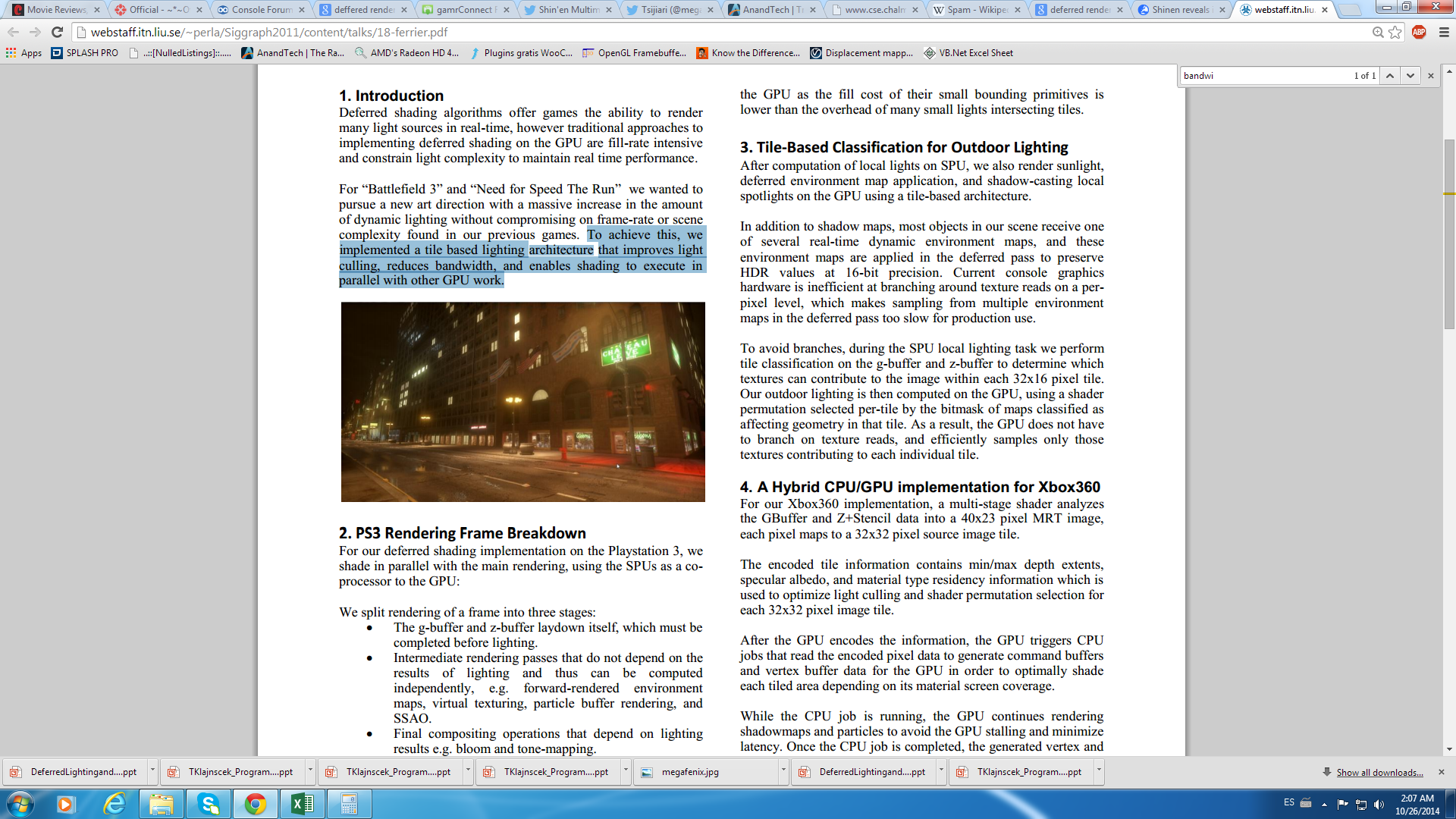

As you can read form the deveopers diary, they implemented deffered rendering using 5 spus of the ps3(there are only 8 and one of them is not available for games) and parallelism of the xbox 360 gpu+cpu work, the key adventage of g buffers is that you use few shader power but here ps3 and 360 had to put a lot pressure on the hardware to achieve it which almost nulls one of the adventages of the technique due to the lack of memory bandwidth. Since wii u edram has plenty of bandwidth it doenst need to use multipass or to much shader power to acheive the technique and has lot better performance. On 360 i could bet that besides the pressure on the gpu the 720p was done with a single buffer and not double buffering since that way you would reduce the memory consumption of the edram to 5MB and with some trickery use the rest for the deffered rendering(normally 12MB of edram would do but there are not there)

You can read here the problems developers went to implement deffered rendering on the last generation consoles, and obviously requirements like needing 5 spus out of the 8 existent ones is not very cheap, and using parallelism on the 360 gpu plus the help of the cpu isnt very cheap either

http://webstaff.itn.liu.se/~perla/Siggraph2011/content/talks/18-ferrier.pdf

In wii u you wont have to do this and the primary adventage of the deffered rendering will be available(use few shader power by trading bandwidth), triple framebuffers of 720p is only 10.8MB where in 360 10MB was barely enough for the 720p with double buffering, and as you can read in the first artlicle, developers wnated to use the deffered rendering on 360 but they needed 12MB of edram that were not present(thats why sometime later developers used the trick found in the second artlicle), in wiius case making some calculations its likely that a gbuffer would take out about 8.64MB of edram, combining that with the triple framebuffer is only about 19.44MB which leaves 12.6MB of edram plus the extra 3MB of faster edram and sram, thats why besides the triple framebuffering and gbuffer shinen still is able to fit some intermediate buffers there

As for the tiling technology, yea its something pretty cool and something tells me that shinen is using it in fast racing neo, looking at the terrain here

loos like the terrain is composed of tiles, i bet they used it since is impossible to fit the 4k-8k textures on the texture memory(even bc1 compression gives you 10MB storage for each texture) and so deviding the textures into tiles makes possible to use the texture cache or texture memory

http://books.google.com.mx/books?id=bmv2HRpG1bUC&pg=PA281&lpg=PA281&dq=tiles+textures+tessellation&source=bl&ots=6hOJ8zd7wA&sig=mtlU58XVFicKUMz5klAr4cDRX9w&hl=es&sa=X&ei=TPQ3VNKyCtKRNs7vgqAD&ved=0CFwQ6AEwCw#v=onepage&q=tiles%20textures%20tessellation&f=false

"

|

No offense Megafenix, but for the love of god, stop posting ancient, irrelevant crap.

ATI's "Smartshader" doesn't apply to GPU's today, that crap was released 13 years ago and ended 7 years ago, why you would think that Direct X 9 shader operations apply to a Direct X 11 world beats me, AMD doesn't even use VLIW anymore, let alone seperate Vertex and Pixel shader entities in the hardware.

As for your "6 textures in a single pass". - That's called single pass multi-texturing, that technology has been around for decades, shall I school you on it? Hint: It has nothing to do with the multi-pass that everyone else is talking about.

Another issue in what you copy/pasted is you claim the Playstation 3 has "8" SPU's and only 7 are available for games, well... No. The Playstation 3 has 8, one is disabled in order to increase yields and another is reserved for other tasks, that makes 6.

jonathanalis said:

Some qustions:

they promised 8k textures. they are still on that?

Xbox One also have a 32Mb ESRAM, and PS4 dont.

Why every Xbox One game has problems with 1080p and none PS4 game has?

So, this kind of ram cant compensate some raw power? |

Actually, eDRAM/eSRAM can compensate for bandwidth deficits in a console to a certain degree, it's actually the entire point of it's invention and it's historical use, even in Sony consoles.

In regards to the Xbox One and Playstation 4 specifically though, there is significantly more at play than just bandwidth differences, which is going to hamper the console, but I would suggest waiting and seeing what 343i does with Halo 5 to see what the hardware is capable of.

Also, please don't use "raw power" in reference to Ram/Bandwidth, Ram doesn't have any compute hardware, it cannot accellerate a damn thing.

If you are truly worried about the hardware, the graphics, resolution, framerates, then I suggest you do one thing... Drop all your underpowered consoles off a cliff, build a PC and join the PC Gaming Master Race.