Pemalite said:

Peh said:

Huh? There is screen tearing on Switch?

I only heard of issues that the screen will tear once while playing BotW due to some unknown causes, which also happened to me a 4 times in playing over 100 hours. But so far, there is no screen tearing like it is common on the other consoles by Sony and Microsoft.

|

The Switch's console generation is far from over you have a few thousand games to get released yet. Every console has games that will have screen tearing. (Except Scorpio via Freesync.)

Just saying how it could have been easily avoidable with a hardware solution, so you don't need to resort to things like double/tripled buffered v-sync which destroys any resemblance of responsiveness and other minor caveats.

|

Eh? What are you talking about? Afaik Nintendo uses vsync. There is no screen tearing in Nintendo consoles. Input lag is a different issue. You know that the TV also needs Freesync in order for it to work? How many TV's do this feature currently have? Btw. scorpio is not even out yet. I really don't know what kind of point you are trying to make here.

Pemalite said:

| Peh said:

With the exception of FXAA, every single use of AA does impact the performance quality and image quality. If the result is an unstable framerate and a blurry image. Then it's better to avoid AA, at all.

|

Wrong.

|

Well, that's some well reasoned argument. I just don't know how I can argue against that. ....

Pemalite said:

Peh said:

No, it washes out the textures even more and blurs the edges out. A result is a blurry image. A good use of AA is just to render the image at a higher resolution and downscale it (SSAA). This results in the best image quality, yet at the most cost of performance.

I don't know about Wii Games using AA. 3DS can only use AA by disabling the 3D. The performance used to render the 2nd screen is being translated to AA rendering instead. So, they just went with it. Xbox 360 and PS3 use AA, but this results in a lot of Jaggies. If that is your argument for using AA, then that is not a good one.

Just as a side note: Knowing that the PS3 and Xbox 360 were pretty powerful at their time, most of it's power went Polygon count and Textures, and the rest to bad image quality: Screen Tearing and bad use of AA -> Blurry and Jaggies. I don't know about PS2.

|

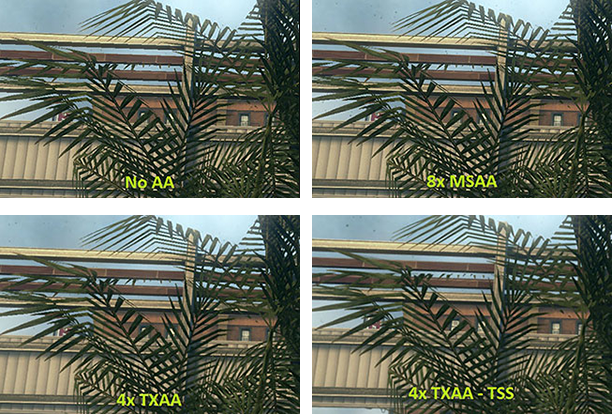

Wrong. SSAA is not the only form of "good" Anti-Aliasing. And is most certainly not a form of Anti-Aliasing I expect out of fixed-hardware of moderate capabilities. - I have already touched upon it in my prior posts. But I shall do so again.

There are forms of Anti-Aliasing which detects the edge of Geometry which is where aliasing typically occurs, it then proceeds to sample said edges of geometry and apply various patterns/filters to the affected area.

Now taking that same approach by detecting the edges of Geometry, some methods of Anti-Aliasing will render the edges of geometry at a significantly higher resolution and downscale them. It's a more efficient form of SSAA.

Thus the Anti-Aliasing isn't working on the entire image at a time. Thus it is more paletable for low-end hardware like the Switch.

Again. There is no excuses for the Switch if even the paltry hardware of the 3DS can perform Anti-Aliasing.

|

Read what I wrote:

"No, it washes out the textures even more and blurs the edges out. A result is a blurry image. A good use of AA is just to render the image at a higher resolution and downscale it (SSAA). This results in the best image quality, yet at the most cost of performance."

I was simply talking about how the best image quality can be achieved. Not what the most efficient way is. There are obviously effiecient ways to make the image appear with less aliasing. But also an efficient and good image quality will come with perfomance cost. You seem to ignore this fact.

I don't know why you still keep comparision to 3DS, because the AA on the 3DS is just a blurry image.

Pemalite said:

Peh said:

Do you own 4k devices and can you play at it? Just a question. I just want to know if you actually had any experience with native 4k gaming on PC for example. |

I have actually had a triple 1440P set-up and a Triple 1080P setup... Which is 5760x1080 and 7680x1440 (More pixels than 4k) respectively.

I currently use a single 2560x1440 display as my primary driver, so I am certainly not a High-Definition/Full High-Definition peasant.

I have used professional 4k monitors and projectors for work purposes at my last job.

|

So, the answer is no.

The issue is not on "how many monitors can you display whatever resolution", because the monitor you are using are still limited to 1440p or 1080p. What also matters is the PPI and distance to a single monitor. Even if you can display 5760x1080, 1920 and 1080 will still stay the same on each display making aliasing more obvious. The higher the resolution is by the same display size will make aliasing less noticeable, so lower AA filters need to be applied for having a good image quality. So, there are many factors to be taken care in.

Pemalite said:

Peh said:

When I am talking about CRT, I am mainly talking about TV's. Not monitors. Besides Pal, NTSC and Secam there wasn't much else during that time for TV's. A home console is being attached to a TV most of the time, so I don't know why you had to go for higher Resolution CRT's which the console was not developed for. My point still stands.

|

There are High-Definition CRT TV's. So your point is moot.

Some of them like the LG 32fs4d even had HDMI.

|

You failed the point. When a console is being developed, then the company looks at statistics at how many and what kind of devices their customerbase has and how possibly the future could look like in the next 4-5 years (simply speaking, because that task is a bit more complicated). If the majority of people are using simple CRT's be it NTSC, PAL and SECAM and the next gen of TV's are in no sight, I will focus on developing for these devices. (Not taking into consideration of stupid design choices)

Even if the LG 32fs4d which I hear the first time from it (which also doesn't matter) was available back in the 90's. How many customers do you think would have this device and would it be worth developing for? You can answer this question by your own.