JEMC said:

shikamaru317 said:

Just speculating based on the previous gen, where there was 390 and 2 main Fury cards, as well as the fact that AMD will probably want 3 competitors for 1070, 1080, and 1080 ti respectively. While there are only 2 Vega chips in production as you said, there is room for a cut-down version of the bigger Vega chip imo.

|

Last gen AMD had way too many cards on the market, with Fury, R9 Nano and R9 390X, for example, basically competed against each other. AMD would be stupid to make the same mistake again.

But I agree with you that between the 2304 SPs of the 480 and the rumored 4096 SPs of the full Vega chip, there's enough room for another two cards with 2816 and 3584 SPs respectively (those are the specs of the 390X and Fury).

|

The entire 300 series was rebadges though with Fury added on top as a "Halo" product and a test vehicle for mass produced HBM and Nano reserved for a more Niche' market.

The 390 and 390X were just re-badged Radeon R9 290 and 290X parts with the bulk of the 200 series being rebadged 7000 parts.

AMD has just stagnated, not just in terms of rebadged hardware, but also prices and that reflected in their marketshare, they are turning that around... But it will sadly take another couple of years to see the fruits of AMD's new strategy.

Even the 400 series will likely be derived by a substantual amount of rebadged hardware, especially in the low-end.

If Vega doesn't launch untill next year, it might not launch under the 400 series lineup, but rather the 500 series instead, with Polaris and general GCN 1.0/1.1/1.2 rebadges.

JEMC said:

shikamaru317 said:

Same here. 490 needs to be able to compete against the 1070 in the $350-$400 range, and while a dual 480 card would be able to match the 1070 based on 480 Crossfire benchmarks, it would also likely cost more than the 1070 and use nearly double the power, not to mention the micro-stuttering issues you get in some games with Crossfire, and the fact that some games (such as Rise of the Tomb Raider) currently don't support Crossfire at all.

|

I know our discussion is a few days old, but I've realized something that may refute the idea that the 490 is a dual GPU card with Polaris 10 chips.

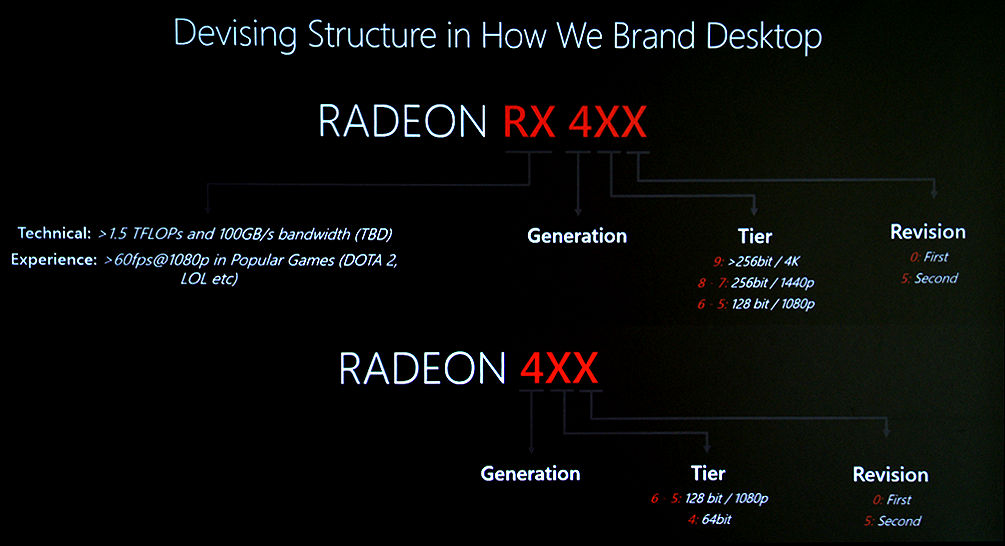

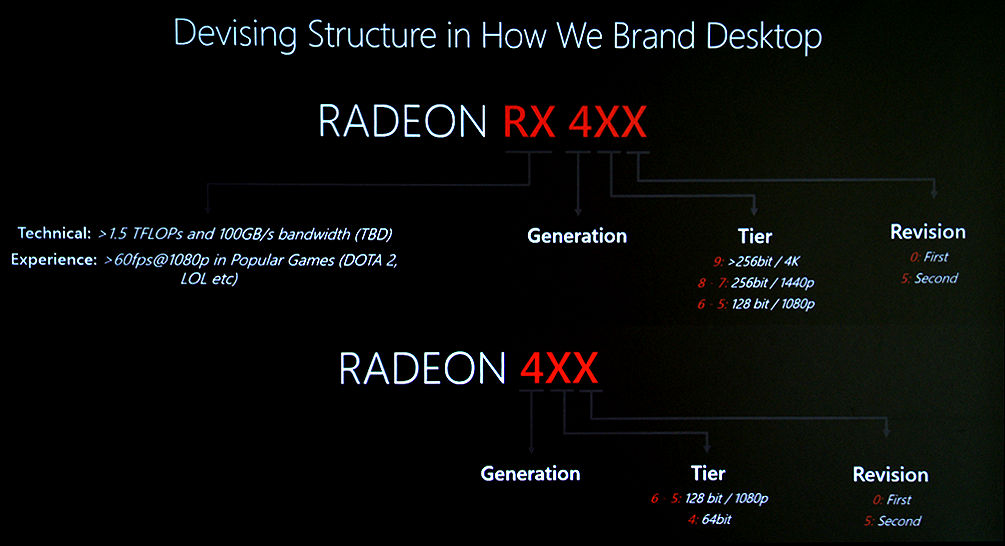

And it comes from AMD's new naming scheme, and the slide that came with it:

Realize that the tier number gets determined by the memory controller:

9: >256bit

8 - 7: 256bit

6 - 5: 128bit

4: 64bit

An hypothetical 2x480 GPUs card will still have the same 256bit controllers so, by AMD's own rule, it couldn't be named RX 490. If anything, it could be the RX 480X2.

|

AMD *could* also launch two dual-GPU cards.

With the Radeon 3000 series and 4000 series AMD actually released multiple x2 cards one based on their fastest GPU and the other on a slower GPU. Aka. Radeon 3850 X2 and 3870 X2 and 4850 X2 and 4870 X2. - Could be a return to form and we end up with both.

Alby_da_Wolf said:

About process, yes, I know, and I was meaning that maybe the whole range could still receive tweakings. We know that 480's power problem was of power management, taking too much power from PCIe instead of from auxiliary power connector, but also after fixing that, we see that power efficiency is quite lower than promised, and while it's quite normal that real world performance be lower than ideal ones, maybe we can still expect improvements not only from drivers and from improved board design, but also from the HW itself if the production process can still be tweaked.

|

Well, it goes without saying that there will be some refinement in production as time goes on that should result in some reduction in power consumption after a few revisions.

But it's not going to be anything significant...

For something more significant AMD will likely need to do another respin and that won't happen for a long time yet, if ever.

Basically the best refinement we can except is from custom cards.

Alby_da_Wolf said:

About APUs, I wasn't precise, I should have written the use of the latest AMD GPU cores in them, and not literally putting a 460 in a Zen APU, but simply putting the GPU power of a 460, obtained with the latest cores available, in it. Yes, a sideport for GDDR could be a nice idea to avoid wasting the GPU power of the higher-end APUs, although faster DDR4, and organised in quad- or even better eight-channel architecture, could at least mitigate the problem.

|

Quad-Channel and Octo-Channel carry with it increased costs due to a substantual jump in PCB traces required, which means more PCB layers and thus more engineering to route everything properly.

It's not gonna' happen. :P Not in a consumer-grade APU anyway.

DDR4 and the bandwidth saving technology AMD has implemented in GCN 1.3/4.0 will help, but it's not going to allow you to have high-end GPU performance, mid-range maybe. Low-end certainly.

Alby_da_Wolf said:

About drivers and games, thanks for all the explanations, my doubts come from the existence of games that cannot use more than one GPU, or cannot without giving more problems than benefits, maybe it could be possible to transparently offer them at least part of all the benefits offered by multiple GPU with APIs that keep where it should be stuff that is at a level low enough to be better managed by those that designed the HW than by game devs.

|

That is where Microsoft is taking Direct X 12. Game developers can build their games to support their own multi-GPU implementation, this is what nVidia has backed when it comes to SLI support for more than 2 cards in games.

But... Leaving it in the hands of developers means it's never going to catch on considering that the vast majority of PC's use a single GPU and consoles all use a Single GPU, it's a waste of resources.

At the moment though if you want more than 2 GPU's for gaming, AMD is where it is at.