Here's a bit of something to keep you busy:

*** NEW CONTEST ***

Wccftech Gives Away Plenty of 2K’s Video Games (for PC)

https://wccftech.com/wccftech-gives-away-plenty-of-2k-video-games-for-pc/

Today we're kicking off a special Holiday giveaway raffle featuring two PC codes for each of the following 2K video games:

- New Tales from the Borderlands Standard Edition

- The Quarry Deluxe Edition

- Tiny Tina's Wonderlands Chaotic Great Edition

- NBA 2K23 Jordan Edition

- WWE 2K22 nWo Edition

- PGA Tour 2K23 Deluxe Edition

There's no mention of country restrictions nor anything like that. Also, while the article says that the contest will run until December 4th (which is impossible since it launched yestarday), it will last another 11 days, with the winners announced Saturday 24th.

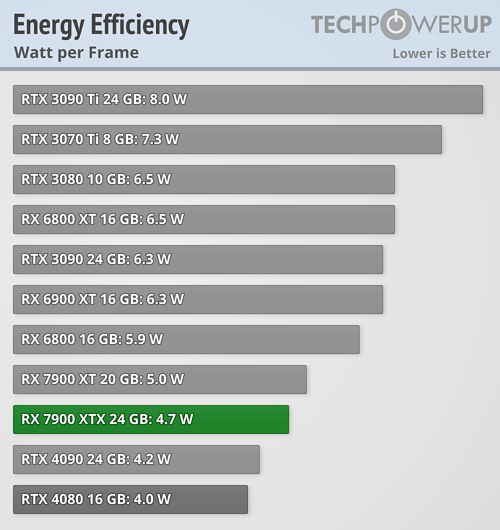

Also, before the official 7900 reviews go live later today, here's some leaks and info:

First Alleged AMD Radeon RX 7900-series Benchmarks Leaked

https://www.techpowerup.com/301992/first-alleged-amd-radeon-rx-7900-series-benchmarks-leaked

With only a couple of days to go until the AMD RX 7900-series benchmarks go live, some alleged benchmarks from both the RX 7900 XTX and RX 7900 XT have leaked on Twitter. The two cards are being compared to a NVIDIA RTX 4080 card in no less than seven different game titles, all running at 4K resolution. The games are God of War, Cyberpunk 2077, Assassin's Creed Valhalla, Watchdogs Legion, Red Dead Redemption 2, Doom Eternal and Horizon Zero Dawn. The cards were tested on a system with a Core i9-12900K CPU which was paired with 32 GB of RAM of unknown type.

Update Dec 11th: The original tweet has been removed, for unknown reasons. It could be because the numbers were fake, or because they were in breach of AMD's NDA.

AMD Allegedly Has 200,000 Radeon RX 7900 Series GPUs for Launch Day

https://www.techpowerup.com/302067/amd-allegedly-has-200-000-radeon-rx-7900-series-gpus-for-launch-day

AMD is preparing the launch of the Radeon RX 7900 series of graphics cards for December 13th. And, of course, with recent launches being coated in uncertainty regarding availability, we are getting more rumors about what the availability could look like. According to Kyle Bennett, founder of HardOCP, we have information that AMD is allegedly preparing 200,000 Radeon RX 7900 SKUs for launch day. If the information is truthful, among the 200,000 launch-day SKUs, there should be 30,000 Made-by-AMD (MBA) cards, while the rest are AIB partner cards. This number indicates that AMD's market research has shown that there will be a great demand for these new GPUs and that the scarcity problem should be long gone.

A few days ago, we reported that the availability of the new AMD Radeon generation is reportedly scarce, with Germany receiving only 3,000 MBA designs and the rest of the EMEA region getting only 7,000 MBA SKUs as well. With today's rumor going around, we would like to know if this is correct and if more SKUs will circulate. America's region could receive most of the MBA designs, and AIB partners will take care of other regions. Of course, we must wait for tomorrow's launch and see how AMD plans to execute its strategy.

Please excuse my bad English.

Former gaming PC: i5-4670k@stock (for now), 16Gb RAM 1600 MHz and a GTX 1070

Current gaming PC: R5-7600, 32GB RAM 6000MT/s (CL30) and a RX 9060XT 16GB

Steam / Live / NNID : jonxiquet Add me if you want, but I'm a single player gamer.