AMD Ryzen 7950X/7900X/7700X/7600X stock Cinebench scores leaked

https://videocardz.com/newz/amd-ryzen-7950x-7900x-7700x-7600x-stock-cinebench-scores-leaked

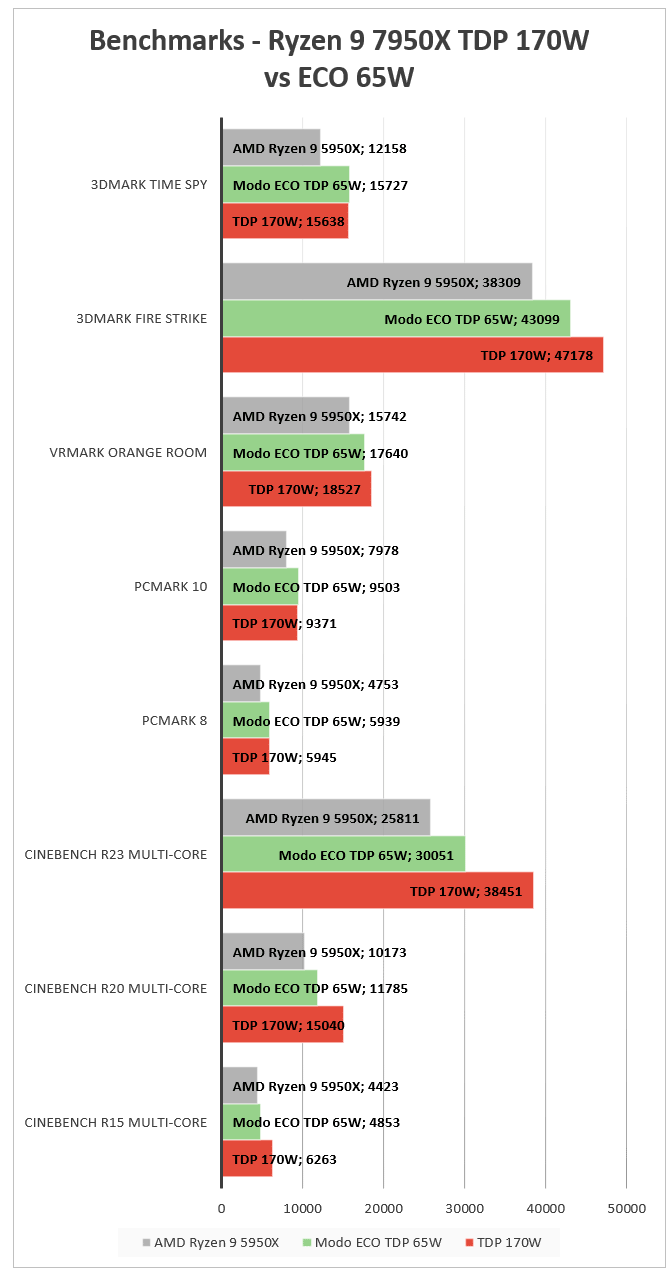

This certainly marks the end of the 5950x dominance. That CPU held its own in multi-threaded runs against 10th gen, 11th gen and 12th gen while largely making a good portion of Intel's HEDT irrelevant. While the jump to 7950x certainly looks big enough, I am personally going to continue to wait.

NVIDIA RTX 4090 Boosts Up to 2.8 GHz at Stock Playing Cyberpunk 2077, Temperatures around 55 °C

https://www.techpowerup.com/299175/nvidia-rtx-4090-boosts-up-to-2-8-ghz-at-stock-playing-cyberpunk-2077-temperatures-around-55-c

"At native resolution, the RTX 4090 scores 59 FPS (49 FPS at 1% lows), with a frame-time of 72 to 75 ms. With 100% GPU utilization, the card barely breaks a sweat, with GPU temperatures reported in the region of 50 to 55 °C. With DLSS 3 enabled, the game nearly doubles in frame-rate, to 119 FPS (1% lows), and an average latency of 53 ms. This is a net 2X gain in frame-rate with latency reduced by a third."

This is what I was referring to yesterday when I said that DLSS 3 latency should be lower than running the game natively. Certainly still not a 4k Native with Max RT on card in this game. I suspect that maybe 6000 series or 7000 series might be the ones to hit 4k 60fps natively. 55C does leave a lot of room for overclocking past 3ghz as long as Nvidia didn't cuck the power sliders.

AYANEO 2 and GEEK Ryzen 7 6800U gaming consoles to launch in December, pricing from $949 to $1549

https://videocardz.com/newz/ayaneo-2-and-geek-ryzen-7-6800u-gaming-consoles-to-launch-in-december-pricing-from-949-to-1549#disqus_thread

Very expensive considering what the GPD folks offers at a similar price

Last edited by Jizz_Beard_thePirate - on 25 September 2022