DonFerrari said:

Trumpstyle said:

It's 9x 32-bit controllers at 16Gbps speed, this gives 572GB/s memory speed at 18GB Vram, the leak also says there will be an additional 4GB ddr4 Ram.

If it's accurate or not we shall see soon, it says Sony will put out a small trailer within 2 weeks and the reveal will be at 12 Feb, this leak was also removed from reddit.

I noticed Nate4Drake missed the SSD speed (5GB/s)

|

New leak and this time PS5 being considerably stronger than XSX in most areas https://www.youtube.com/watch?v=StGUCieyvcg

|

This youtuber is basing this on from someone called Tommy Fiser at Neogaf. He is just repeating what most people has been specaluting on the forums. Like the CPU on Ps5 will be 3.2ghz and upwards 3.6ghz on Xbox series X. The faster gpu comes from the verified insider saying PS5 is more powerful.

I'm not gonna go through every point but I think no matter what Xbox series X will have more TF than PS5.

drkohler said:

Trumpstyle said:

It's 9x 32-bit controllers at 16Gbps speed

|

..........aaaaaand we're done.

Gddr controllers come in 64bit chunks. The only weird setup would be 8 2GB gddrams and 2 1GB gddrams. That doesn't make sense at all, using different memory types is just an unneccessary hassle to deal with with a measly 12% increase in size.

Why do people feel the need to toss "specs" around like there is no tomorrow? Sure by sheer chance, someone will get it right eventually, but why all this nonsense?

|

That leak is not from me and I don't believe it, I'm on 9.2TF for PS5 (36CU, 2ghz) with 256-bit bus 16GB Vram for total 512GB/s memory bandwidth. But 11TF (40CU's, 2.15ghz, 576GB/s memory speed) where Sony disable no CU is looking very strong too and I might lose this one.

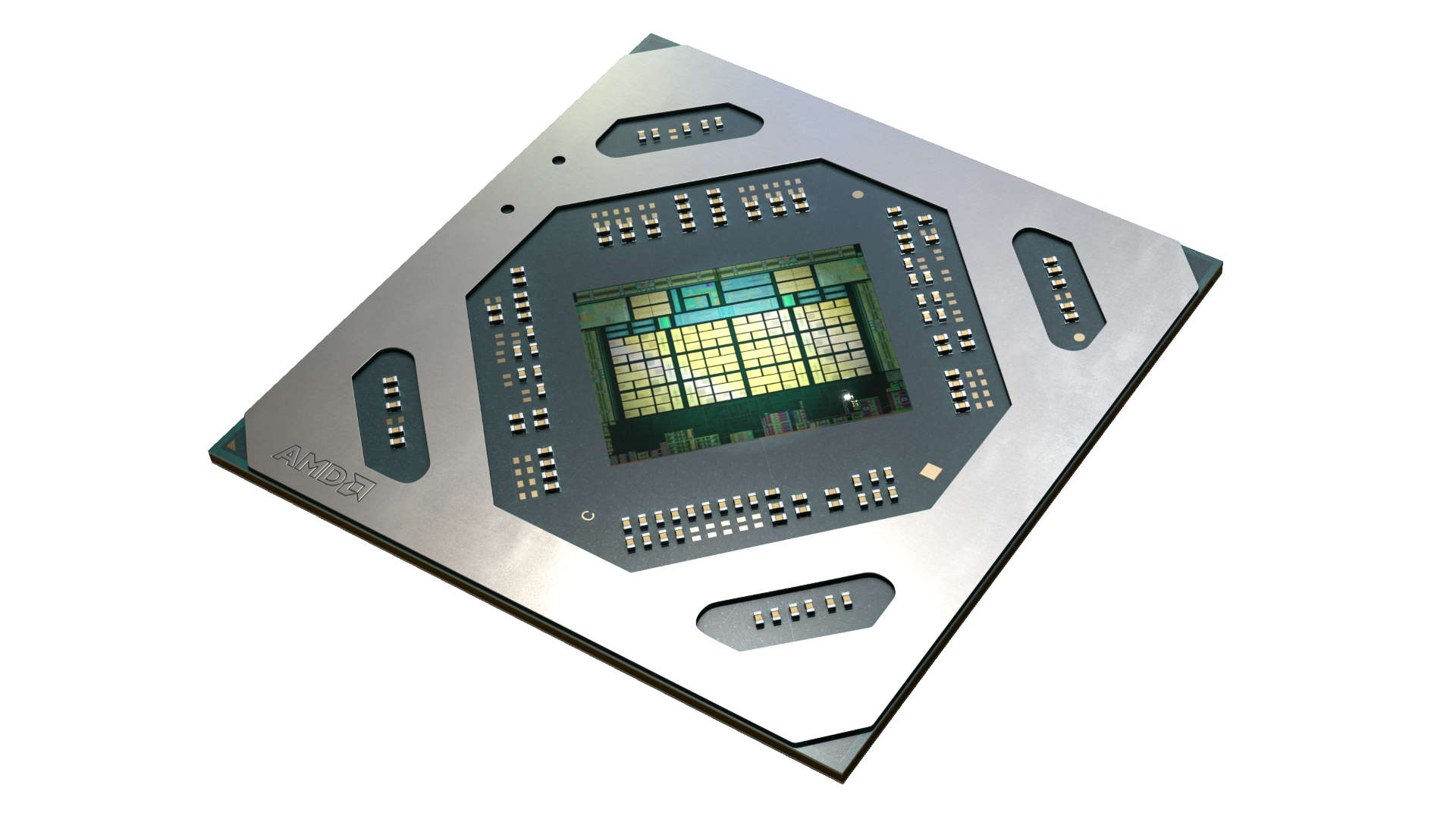

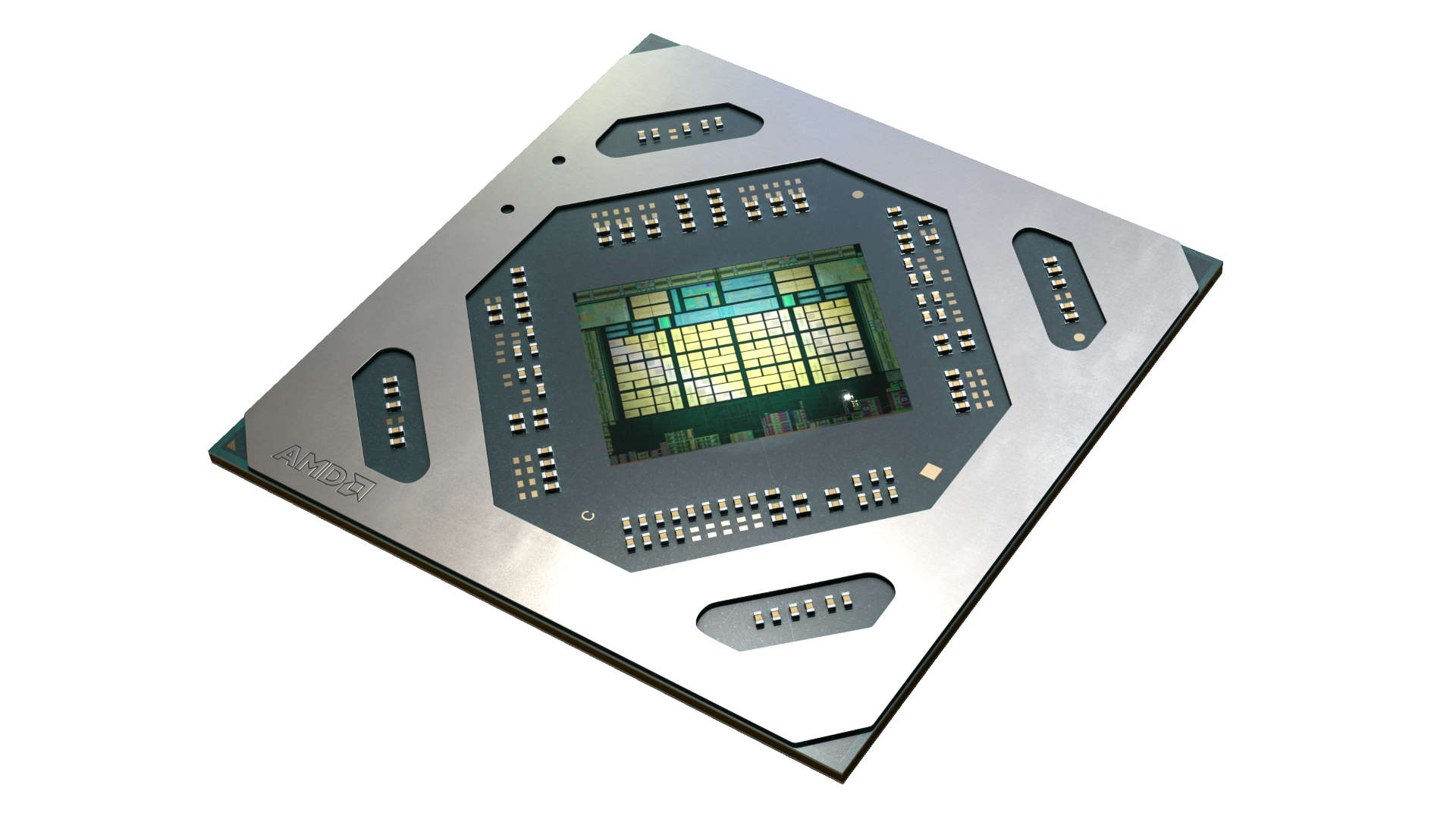

As for 32-bit controllers I'm sure Navi14 has 4 of them, you can see that clearly in this picture:

But when you building a SOC/APU you want the perfect Square/Rectangle and 9x memory controllers just doesn't make sense. You can also do 18GB Vram on 256-bit bus, by having 2 Gddr6 sticks on 3 64-bit controllers and 4 Gddr6 sticks on the last 64-bit controller.

6x master league achiever in starcraft2

Beaten Sigrun on God of war mode

Beaten DOOM ultra-nightmare with NO endless ammo-rune, 2x super shotgun and no decoys on ps4 pro.

1-0 against Grubby in Wc3 frozen throne ladder!!