DICE were present at the recently concluded GDC event where the company’s Graham Wihlidal spoke about how developers can process triangles and geometry within games efficiently thereby improving performance. The presentation is mostly focused on culling, a process wherein objects of any kind such as triangles and pixels are not part of the final image. In short, a good culling process can improve performance, specially on the PS4 and Xbox One whose CPUs are already quite outdated compared to current tech. This is especially true if culling is carried out totally on the CPU although GPU assisted processes are available.

In addition to improving the culling process on current gen consoles, DICE also revealed some interesting new information about the PS4 and Xbox One along with details that are already known. It’s fairly well known at this point that the PS4 and Xbox One have similar CPU clock speed (though it’s slightly better on the Xbox One). Furthermore, we also know that the PS4 GPU has 18 compute units compared to 12 on the Xbox One which results into better ALU operations per cycle on Sony’s machine. However we were unaware of how many ALU cycles are required by each of them so that a triangle can be rendered by each GPU.

According to DICE the number of ALU cycles required by Xbox One GPU to render a triangle is 768 ALU ops/cycle and the PS4 is 1017. The PS4 also takes the lead as far as instructions that can be processed at a time goes. The Xbox One has a limit of 368 instructions at a time and the PS4 with 508. The slides then mention a number of methods of culling that be used to improve performance. However there were a couple of things that caught our eye.

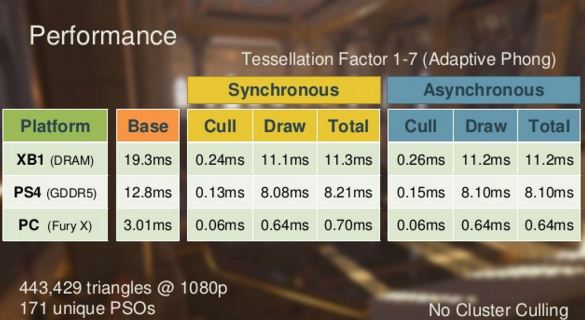

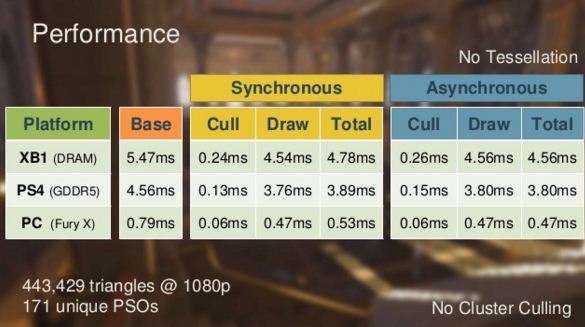

The slide above clearly shows off the advantage of having a faster GDDR5 memory on the PS4 (with and without tessellation). The cull time and the actual draw time are quite faster on the PS4. It must be noted that the test case here includes rendering 443,429 triangles at a full HD resolution of 1080p.

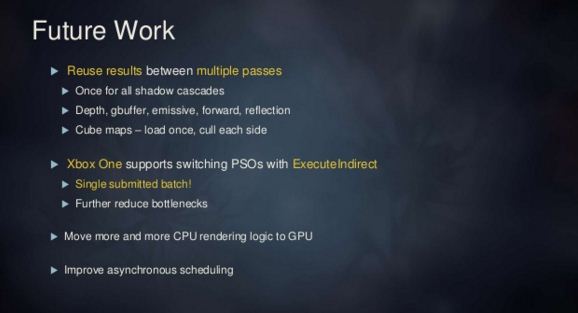

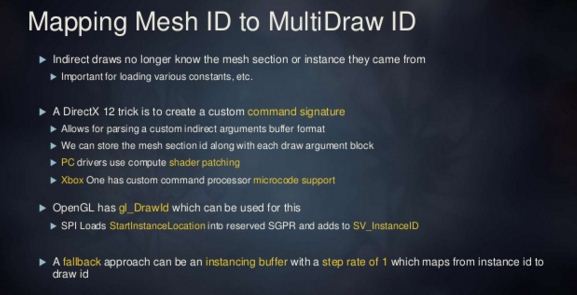

Furthermore it was also revealed that Xbox One supports ExecuteIndirect a chain command from DX12 which we have talked about before. This ensures that a single batch is required for rendering which further improves performance. This also means that the API of Xbox One is already pretty close to DX12 and it’s most likely that both are now sharing similar libraries. Another interesting revelation is the custom command processor of the Xbox One with micro-code support. Generally speaking, micro-codes are used to update the processor’s BIOS or even introduce stability and security updates along with possible performance improvements. Whether it’s something that Microsoft is already using or plans to use it in the future is unknown at this point.

Read more at http://gamingbolt.com/ps4-and-xbox-one-gpu-performance-parameters-detailed-gddr5-vs-dram-benchmark-numbers-revealed#X9joPhzLeEXIZuRM.99

The PS5 Exists.