ArchangelMadzz said:

This is only meaningful if devs can code to the metal and send direct commands to the gpu and cpu. |

They can. Apple has low-level API's.

Soundwave said:

ArchangelMadzz said:

This is only meaningful if devs can code to the metal and send direct commands to the gpu and cpu. |

It must be fairly beastly though, Apple showed it editing three native 4K video clips simulatenously ... that's unreal. While of course they are made for different tasks a PS3/360/Wii U would sputter and die trying to do that.

|

That's because of dedicated fixed function hardware, not necesarrily GPU or CPU grunt.

Fixed function hardware is inherintly more efficient and faster per-transister than other methods, which is why mobile relies far more heavily upon it.

HoloDust said:

Eddie_Raja said:

The bandwidth is far more than any other tablet PC I have ever seen. It is almost as fast as the X1's bandwidth (64 GB/s).

But it's compute capabilities will put it at near have the X1, if not a little more.

|

A9X is some 2x A8X when it comes to GPU, which puts it at somewhat under 500 GFLOPS FP32 - which is still way off XOne's 1.3 TFLOPS...unless you were referring to nVidia Tegra X1, which is rated at 512 GFLOPS.

|

There is more to performance than floating point arithmatic.

A 2 Teraflop GPU could be slower than a 1 Teraflop GPU.

Keep in mind that Mobile GPU's tend to render games differently than console or PC GPU's, they tend to go with tiled-based approaches due to their efficiency and power consumption edge.

Eddie_Raja said:

HoloDust said:

A9X is some 2x A8X when it comes to GPU, which puts it at somewhat under 500 GFLOPS FP32 - which is still way off XOne's 1.3 TFLOPS...unless you were referring to nVidia Tegra X1, which is rated at 512 GFLOPS.

|

No I am refering to the X1. Sure it's compute is nearly 1/3rd, but its bandwidth is 3/4+ that of the X1. People keep forgetting that bandwidth is half of the equation - it's what feeds the cores. The X1 is bandwidth-starved, and that is one of the main reasons it struggles to run so many games.

|

Bandwidth isn't half of the equation.

For one, you have compression both on the software side and hardware side to mitigate that.

Secondly... The Xbox One has less compute resources than the Playstation 4, thus by extension it's bandwidth requirements will also be lower.

The Xbox One will also heavily rely on a variation of tiled-based rendering due to the esram, much like the Xbox 360, which means bandwidth efficiency gains.

The PC and PS4 will likely brute-force, because they can.

Take a look at AMD's Fury GPU, despite the fact it has orders of magnitude more bandwidth than nVidia's Titan... All that extra bandwidth essentially meant nothing at the end of the day.

Eddie_Raja said:

invetedlotus123 said:

Gaming is actually getting kind big in tablets. I hope that power make developers port their games to iPad. Heck, iPad Pro could actually handle GTA V better than many pcs with proper optimization. |

Well the iPad Pro is 2-3x stronger than last gen, and about half as strong as X1. So yes anything on X1 in 900p should run on the iPad in 540-720p just fine! Would be an amazing and game changing development honestly.

|

Not exactly.

You cannot do an Apple's to Apple's comparison... (See the pun?) Because Apple uses ARM. Microsoft uses x86. Apple typically uses PowerVR for the GPU and Microsoft uses AMD. CISC vs RISC. Tiled Based Rendering vs Immediate-mode rendering.

Both have different degree's of fixed function and programmable hardware capabilities and performance and efficiency.

LurkerJ said:

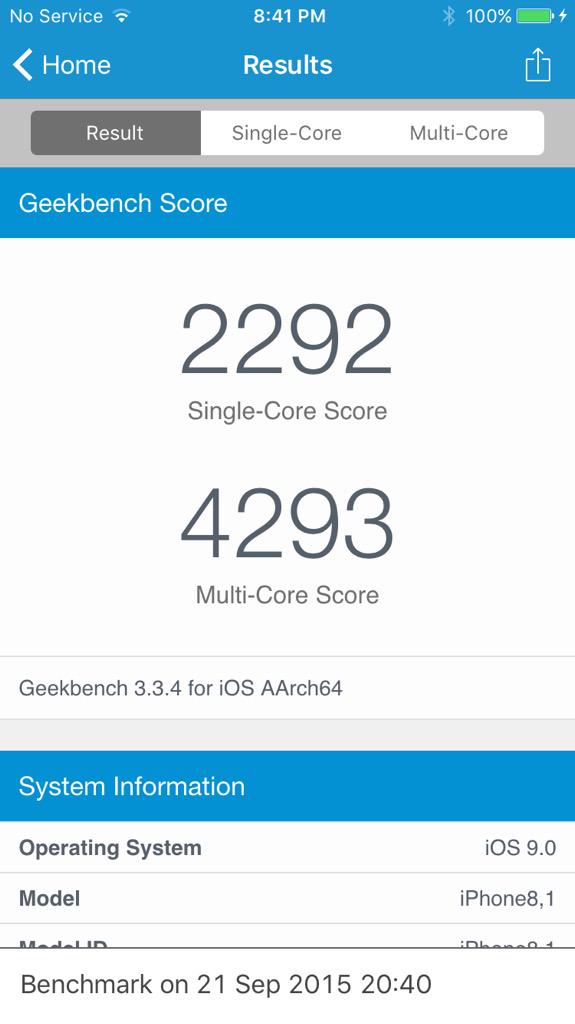

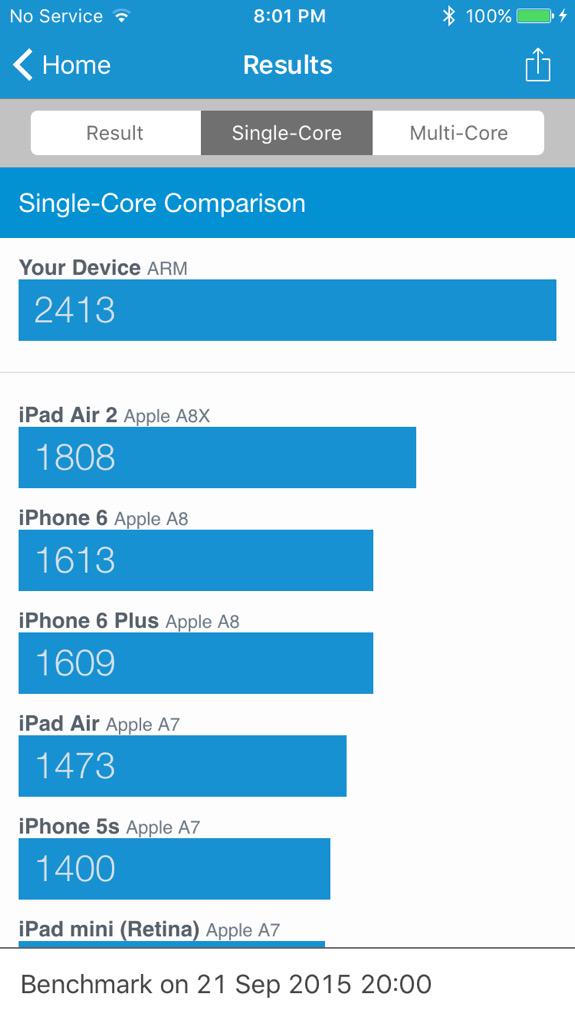

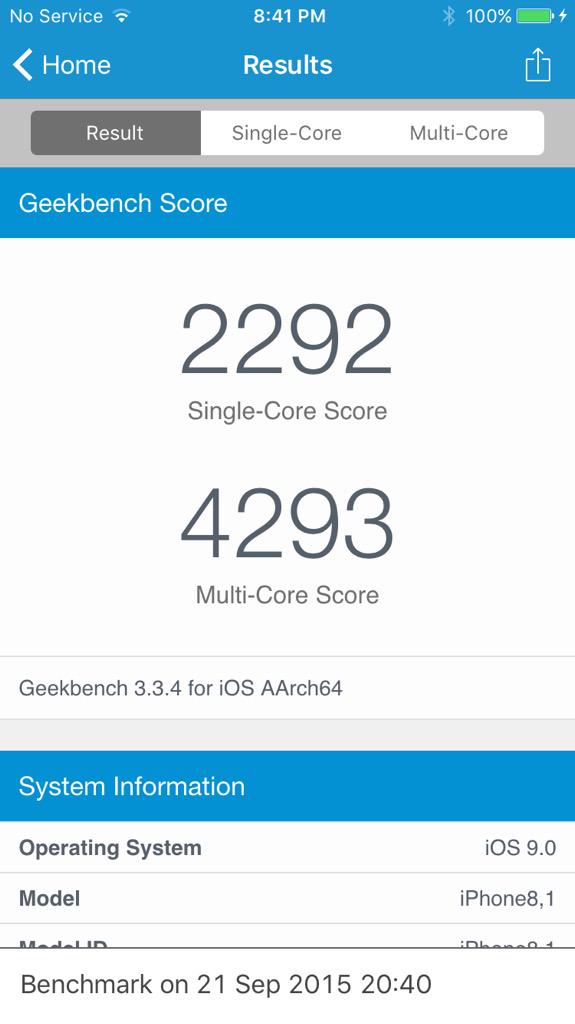

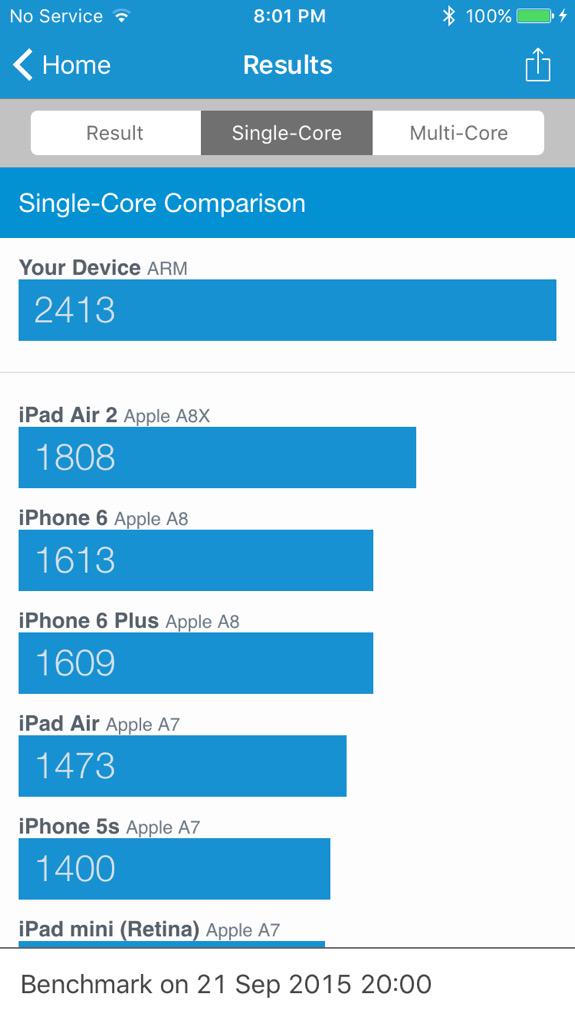

A9 processor destroys the competition with only 2 cores. It outperforms the unmatched A8X processor too, but that one is used on a device with a 6,000mAh battery. A9 is using a 1715mAh battery to power through the day with Siri "always-on-always-listening". Reviews state the battery life of the new iPhone is unaffected by any of that. To put it midly, A9 power efficiency is remarkable!

Mobile chips is one of the most exciting technologies to follow these days.

|

Indeed.

Mostly because Intel has picked most of the exciting performance/watt pieces of low hanging fruit and AMD hasn't been competitive.

To put it all in perspective though... Intel was able to scale down Intel Atom (Medfield) so that a Single Core Intel Atom was able to beat two ARM Cortex A9 Cores.

The successor to that was able to play with the iPhone 5s. - Which the iPhone 6 is a good 10-25% faster.

AMD's Jaguar is faster than Intel's Atom on a core-to-core, clock-to-clock basis by a fairly chunky amount at the expense of consuming a bit more energy... And it has allot more of those cores.

So it's easy to see that the 8-core chips in the consoles still have an edge, even if only 6 are used for gaming...

I still wish that console manufacturers would take CPU performance seriously for once though.

silvergunner said:

The Wii-U has in it a triple core G3 processor (nearly unchanged since 1998) clocked at 1.2ghz per core running 32-bit code. The A7 in the iPhone 5S is dual 1.4ghz 64-bit cores. In single threaded applications (games) this outperforms the ancient PowerPC by a long way. In multithreaded applications (operating system services) it matches if not exceeds performance.

There's also the question of heat and clock speed. The Wii-U's processor requires a cooling system (heatsink and fans) while the A-series can run without one by underclocking. If you attached a cooling system to the A7 you could possibly (in theory) push it to nearly 2ghz. Additionally the A-series have a 64-bit address bus (meaning in theory it can support more than 4gb RAM + ROM) while the G3 is only 32-bit (Maximum 4GB RAM + ROM). It's one reason why the Wii-U had no more than 2gb RAM - with the other half of the address bus dedicated to ROM and I/O they couldn't add any more memory to it. |

You mean Power7 with it's own twist, rather than merely "G3" clone with higher clocks, there has been multiple changes to the die in order to be fabricated at 45nm with it's much higher clocks.

Also, you can have 32bit and greater memory amounts of 4Gb.

You have PAE which can extend boost it to 36bit(Or more!)/64Gb of Ram on Windows Machines, even with a 32bit processor.

The 32-bit version of Windows Server 2003 for example can have 128Gb of Ram.

Besides, the limit isn't actually a Ram limit, it's an address space limit, there is a substantual difference.