Fully Connected 16-Pin Connector On The NVIDIA GeForce RTX 4090 Ends Up Melting Too

The shitty connector cases continues

GALAX’s Monster GeForce RTX 4090 HOF Graphics Cards Breaks 20 GPU OC World Records

With two of those connectors, I'd hope so

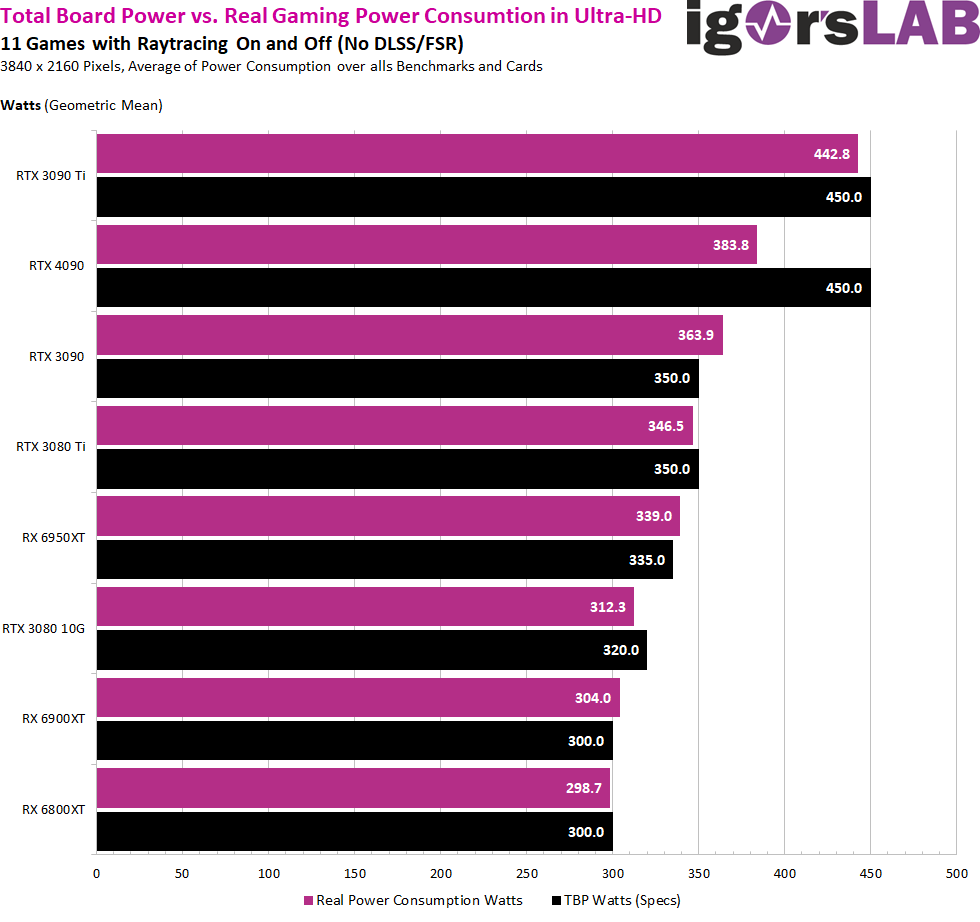

Total Board Power (TBP) vs. real power consumption and the inadequacy of software tools vs. real measurements

While 4090s are rated for 450 watts, in game they generally run at 388 watts which is below their rating. Idk why Nvidia felt the need to go to 450 watts at all tbh.

PC Specs: CPU: 7800X3D || GPU: Strix 4090 || RAM: 32GB DDR5 6000 || Main SSD: WD 2TB SN850