Pemalite said:

| The Playstation 4 and Xbox One certainly do use different API's.

Microsoft and Sony won't even use Direct X or OpenGL for their low-level API's as those are inefficient high-level API's, lower budget/less demanding games will target OpenGL/Direct X due to how easy they are to interface and work with.

But the hardware still skins the cat exactly the same. They aren't rendering things differently, the Playstation 4 GPU's despite not having Direct X is still a Direct X compliant part and developers recognize and work with that.

|

And in reality PS4 has their own tools and never used Xbox or Microsoft tools, that's because of legal stuff etc . Directx compliant or not it's not direct X, (far from it) they are just compatible and easy to port .

Even PS4 and Xbox One, they have different methods on how they stream the assets from their memory, with PS4 using GDDR5 while Xbox One used E-Sram and DDR3.

And we still don't know how far the customization is for each of the console, and how much the different is , even if they are using the exact same chip.

Obviously a dumbed down explanation in order not to confuse those with less technical backgrounds.

In saying that, it isn't some magical "full metal optimization".

Playstation 5 will be an architectural deviation from the Playstation 4, thus software level control is going to play a key part. |

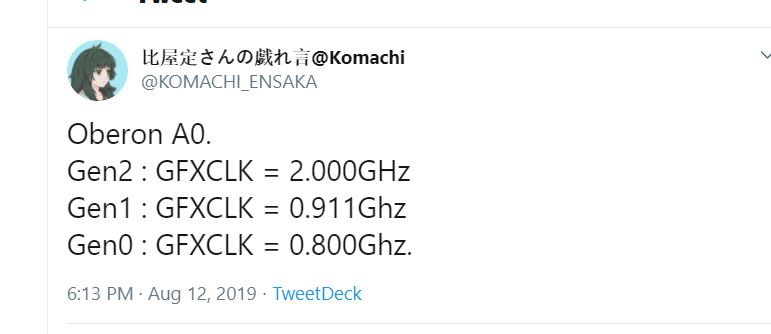

Of course it will be using software as well, but it's more traditional and less complicated then you might think, it's not even the same level like emulating consoles games on PC (PS2 PS3 emulation), it's more of hardware compatibility. Both leak confirmed that PS5 APU will run on different clock speed based on the content they are running. The logical explanation is, PS5 backward compatibility required the APU run at certain speed to match the console they emulate.

|

Sony also doesn't need it. Sony is relying on the *nix community.

If you think only clock speed is needed to retain compatibility... Well. You are highly mistaken

|

They will combined software and hardware method, but as far as we know with PS4 Pro on handling PS4 games and the benchmark Leak we got by the patent about backward compatibility, in my opinion i am pretty sure PS5 or Sony in general in handling backward compatibility using brute force method.

|

AMD does have Ray Tracing. AMD has had Ray Tracing capability for years, Ray Tracing is inherently limited by compute capabilities, you can do Ray Tracing on an Xbox 360, it just wouldn't be ideal...

nVidia's approach is different, they instead spent a large chunk of their transistor budget on specialized low-precision floating point cores to handle the task.

But that isn't the only approach that can be taken and we don't know yet if it's even the right answer, you can do integer ray tracing for example.

Now would Microsoft be begging nVidia for Ray Tracing processing cores to put on their AMD hardware? Absolutely not.

https://devblogs.nvidia.com/nvidia-turing-architecture-in-depth/

At the end of the day, the only evidence we have for anything is that RDNA2/Scarlett/Playstation 5 will feature Ray Tracing "cores". - Everything else is just assertions without evidence.

https://www.guru3d.com/news-story/geasrs-5-developper-mentions-dedicated-raytracing-cores-from-amd-in-next-gen-xbox.html

|

I know, Also I never said Microsoft will fully copying Nvidia methodes, they are just using their experience on applying their API on Nvidia RTX, and creates their own solution and also there are many method available for raytracing

The article you mentioned never mentioned or confirmed RDNA will have Raytracing, it's just confirmed that Xbox Scarlet or Next generation will have Ray Tracing on hardware level, it could be using AMD or even outside IP solution (PowrVr, Microsoft, etc)

|

Ultimately doesn't matter. The Playstation 5 will be built to adhere to Microsofts Direct X specification anyway, that is just reality since Sony decided to adopt commodity PC components.

|

I get what you mean, it's still a "Directx compliant ". But it's far from DirectX. It may have some similarities but it's still different , you can say Vulcan can run games on PC that run DirectX API, but in reality Vulkan =/= Directx. Or a PC server that use PC part but in reality they are not PC for consumer. Even if Xbox Scarlet can run what PS5 can run, In the end the result will decide. Which one is more simpler, effective and do the job better. That's will explain even if they are using the same RDNA it doesn't mean they are the same.

The same goes with PC, PS4 and Xbox comparison. Indeed they are using PC part. But using PC part does not mean it's equal to PC desktop/Laptop. or vice versa. PC is a personal computer that use directX Api to run games that all it's device purposely made for many aspect of life (working entertainment, study , etc) while console are 90% for gaming and the rest is just entertainment.

|

You are clearly missing the point again.

They could have opted for AMD's prior implementation of Tessellation rather than one that adhered to the Direct X specification, but they didn't, because what is the point? Why would you waste your time and money trying to reinvent the wheel when there is already a standardized design?

RDNA does have Ray Tracing, all GCN GPU's have Ray Tracing, they just don't have Ray Tracing "cores".

AMD has a patent on a Ray Tracing implementation, so if you think they have been standing around and doing nothing for years... Well.

https://www.tomshardware.com/news/amd-patents-hybrid-ray-tracing-solution,39761.html

Will RDNA2 have Ray Tracing Cores? We don't know yet. We don't have the evidence to support nor deny such a thing at this point, so you are right there.

|

What happen with PS4 might be different with PS5 in 2020 . We have different situation today. We still don't know the reason why Sony are not using AMD tessellation solution for PS4 nor any of Sony engineering explained that. They might opted to AMD this time or if it not meet their requirement (price, performance , time) etc Sony probably use other method.

OK, Like I said i know GCN can do ray tracing, it's just need a compute unit to calculate, even PS3 can do ray tracing. The problem is that at current state there are no RDNA available design on market has a dedicated core to run ray tracing solution. Nor we don't know if RDNA 2 will used that patent you mentioned, or even if RDNA 2 coming with the dedicated Rautarcing , we still don't know if PS5 and Scarlet will be using RDNA 2 for their GPU. But of course a costume RDNA for PS5 or Scarlet can use some of the tech from RDNA2.

|

The API is irrelevant when the GPU designs are already built and adhere to industry standards, the GPU is still doing the same task.

|

They are doing the same task .... in different method and solution

|

Read Prior. RDNA has Ray Tracing support.

|

Not dedicated ray tracing cores on the same level with RTX. Even GCN GPU based already support Ray Tracing

|

Those "special chips" were still built on established designs for another market (The PC).

|

That's true, no one said no. But not all costume design chip coming to market or make it to the PC consumer. We might have PC GPU on market that have some similarities with console GPU, and can do the same, better or less. But not all customization are needed for PC. For example we still not even get a fully AMD APU motherboard with GDDR5 as main board instead ww still using DDR3 and newest one DDR4.

|

Citation Needed.

I would need to see an AMD chip with nVidia's tensor cores integrated to believe it.

|

OK , that's my mistakes . It seems it's not a dedicated tensor cores or a dedicated cores that inside Surface . But they have some semi custom design build for Surface https://community.amd.com/community/amd-business/blog/2019/10/02/microsoft-takes-pole-position-in-laptops-based-on-amd-technology

I mean checkerboard rendering can be emulates via software, no one can argue thats. I mean Rainbow Six siege are build based on that tech but not after the rendering done using brute method like what usually happen with PC GPU that can be enabled as we like. What i need a is a build inside checkerboard instruction on Polaris. Because on software level even Nvidia GPU can also run Rainbow 6 Siege that use checkerboard.

No one builds games to the metal anymore, no one builds games in pure assembly anymore.

You can most certainly be able to build games to the metal if you have access to it on any console, it's just pointless.

|

First party and exclusives does this especially Sony. It's not pointless, a middle ware is good if you want to have general look and decent outcome with relatively cheap cost but special optimization is much better in every aspect except time consuming.

|

But we don't know if they are using PowerVR's I.P.

|

I am just guessing here, is just my opinion and my deduction based on the names "Prospero " A wizard and PowrVR Wizard fit the name and the situation at current situation regarding Raytracing . But if indeed AMD has the real concrete solution on dedicated RT cores, then they might be using the ones that AMD has.

|