Pemalite said:

megafenix said:

then wy keep the 2008 old interpolators when AMD said back in 2009 that they removed them for the hd5000 seires cause were causing the rv770 to udnerperform and decided to leave the interpolation to the stream cores?

hd5000 and hd4000 arent that different, architecture is vastly the same with minor changes and upgrades, but one of the biggest changes was the removal of interpolators and addition of irectx11 support. Rmeo the speculated interpolator on neogaf and tell me how much stream cores you would get for wii u?

and is not just candle developers that revealed that wii u is capable of directx11 features or shader model 5 with their custom api, we also have the giana sisters and armillo developers and many others

|

The interpolators didn't under-perform.

They were a waste of die-space that could be better used for something else.

The Radeon 4000 series of GPU's were stupidly potent per transister as nVidia quickly found out.

The Interpolator was moved to be performed on the shaders, again one out of efficiency as transisters spent on that fixed function unit could be better utilised for more shaders that handle all sorts of data sets.

megafenix said:

The rumor comes from this NeoGAF post examining the system’s base specs, which has not been publicly disclosed by Nintendo, but is speculated here at 160 ALUs, 8 TMUs, and 8 ROPs. The poster then tried to collect information from older interviews to clarify how they got to these specs. Taking tidbits from another forum thread about earlier Wii U prototypes, an interview with Vigil Games, and the Iwata Asks on the Wii U, the poster came to the conclusion that Nintendo must have lowered the system’s power specs to fit a smaller case.

Unfortunately, considering the process of making launch games for new consoles is a secretive and difficult process, I think there is too little information publicly available to really make these kinds of calls. Even if we take into account developers breaking NDAs, each group involved with a system launch really only has a limited amount of information. Developer kits themselves are expected to get tweaked as the specs get finalized, and the only people who would really know are too far up in the industry to say anything.

|

So by your own admission the specs you come up with are also not disclosed by Nintendo and should also be taken with a truck full of salt, good to know.

megafenix said:

sorry dude, ps4 and xbox one are also more efficnet and more modern and despite that cant even do 1080p 60fps in their ports even with all those adventages and even surpassing the raw power required for that, so why would you expect wii u to do any better with 160 stream cores?

|

Lets take a look at all the multiplatforms then shall we?

1) Battlefield 4. - Xbox One and Playstation 4 doing "High" equivalent PC settings, rather than Ultra. (WiiU?)

2) Call of Duty Ghosts. - Runs on a toaster, minor visual improvements on the next-gen twins and the PC, mostly just superficial, to be expected with such short development cycles and iterative release schedule in order to take as much money from the consumer as possible with the smallest amount of expenditure.

3) Assassins Creed: Black Flag. - Minor improvements, mostly just matches the PC version.

Xbox 360/Playstation 3 did "low" equivalent settings at 720P@30fps in Battlefield 4.

There is a stupidly large *night and day* difference, that only PC and next generation console games can genuinely appreciate, to think that you don't think there is a next gen difference is pretty telling.

megafenix said:

wii u die size also is like 96mm2 even without edram and other stuff and thats enough to hold 400 stream cores, 20 tmus and 8 rops just like the redwood xt which could be like 94mm2 with a chipworks photo instead of the 104mm2 reported by anadtech cause the wii u gpu die size was reduced from 156mm2 reported from anadtech to 146mm2 by chipworks photo

all that efficiency goes to waste on a port, already shinen explained that your engine layout has to be different for wii u or you lose a lot performance hit, emaning that your 176gigaflops also go even lower due to this

|

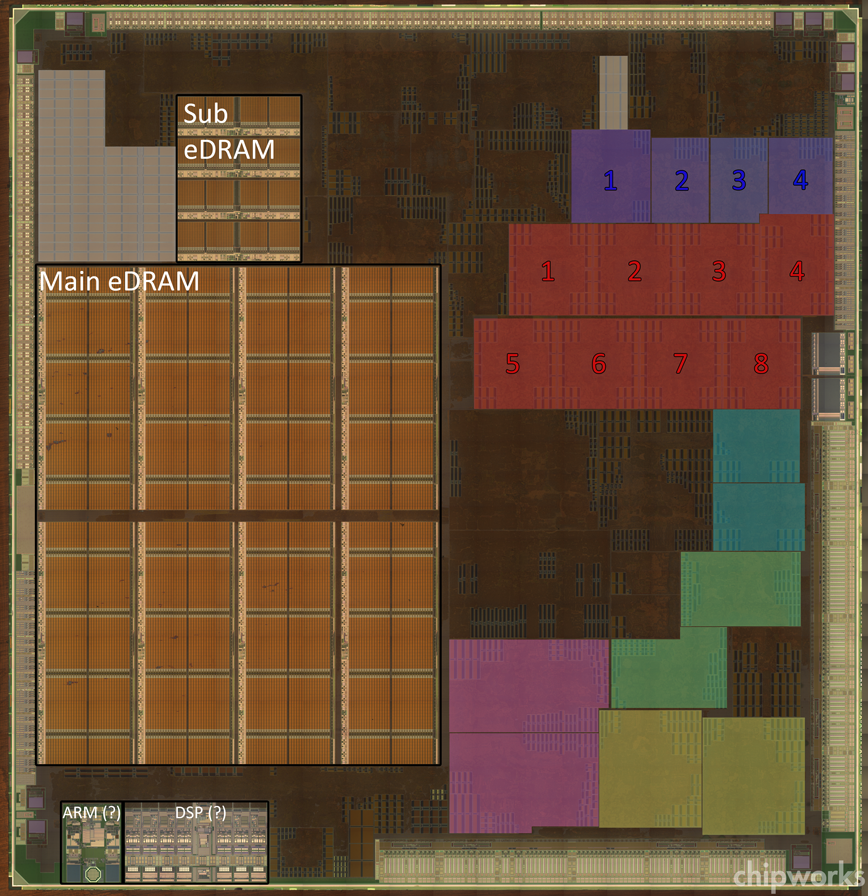

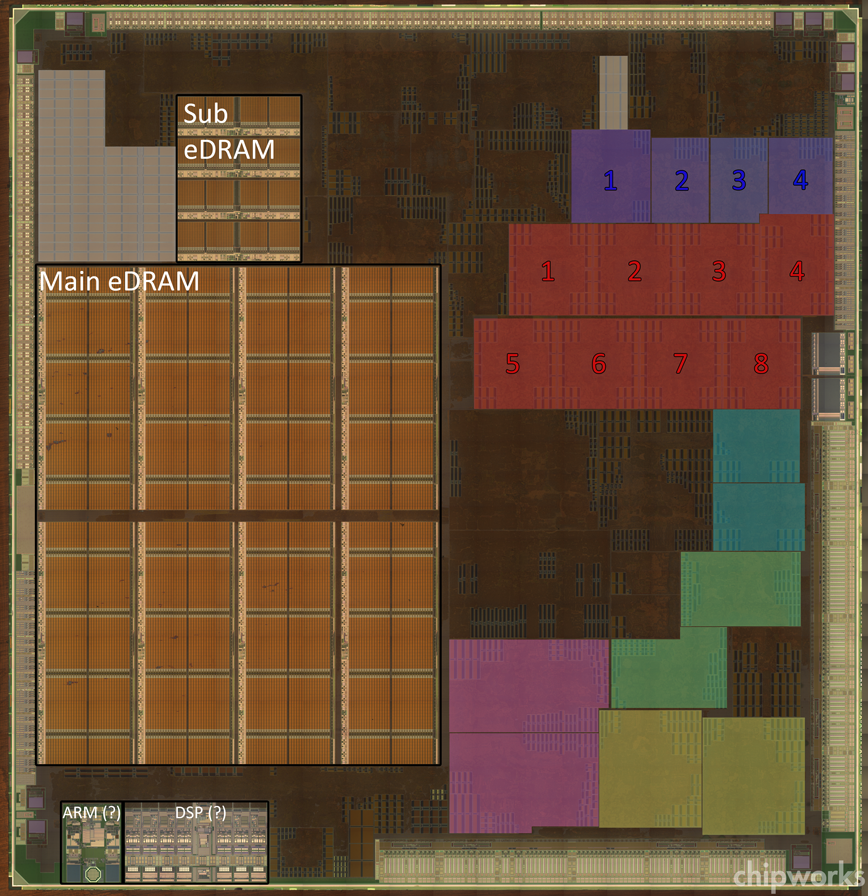

Wii U's GPU "Latte" is 146.48 mm2, 85 mm2 is what is purely available for GPU.

What makes up the rest of the die? Well. That's simple.

There is a possibility that a Dual-Core ARM A8 processor exists on the die for OS/Background/Security, an ARM7 core for instance should consume roughly 10-20 million transisters per core.

You then have an additional ARM A9 core for backwards compatability.

Then an additional ARM DSP.

And don't forget the eDRAM.

Then you have a few megabytes as SRAM to be used as a cache.

Even more eDRAM for Wii backwards compatability.

Then you need memory controllers and other tid-bits for interopearability.

Yet you seem to think that somehow magically they can fit in 400 (Old and inefficient) VLIW5 stream processors?

Heck even chipworks+neogaf doesn't even think it has that much, they think it's roughly 160-320.

However lets be realistic, the numbers you are grabbing? There is no logic, there is no solid source, heck some of the source information you post has ZERO relevance to the topic at hand.

However to put things in perspective, the Latte GPU is of an unknown design, that's 100% certain, we do knows it's based on a Radeon 5000/6000 architecture, but that's about it.

There are 8 logic blocks or SPU's. For VLIW5 there are usually 20-40 ALU's per block.

If it was based upon VLIW4 (Unlikely!) then there is the potential for 32 ALU's.

Thus 8*20 = 160.

And 8*40 = 320.

And 8*32 = 256.

These are the most logical and make the most sense any sane person would go for, why? Well. History, AMD spent allot of years refining VLIW it knows what and what does not work and what is the most efficient.

There is an absolutely tiny and I mean *tiny* chance that the WiiU is using more ALUs in every SPU, let me be clear, you won't be getting 400 stream processors.

Thus if it had 160 Stream processors you would have 176Gflops.

If it had 320 Stream processors you would have 352Gflops.

If it had 256 VLIW4 Stream processors you would have 282Gflops.

With the VLIW4(256 Stream processors) probably being faster than the VLIW5(320 Stream processors) due to efficiencies.

Red are the GPU blocks because they're a repeating patternt hat's all the same, blue is likely the back-end.

Also don't forget either that when you are building a die, you need to set aside a significant % of transisters for redundancy so you can obtain maximum yield out of a die to replace any parts that are faulty. (Examples being the Cell processor with a core disabled.)

megafenix said:

All these things point out that 176gigaflops or just 160 stream processors wouldnt be enough for a lazy port to even work on wiiu, hell even one of the secret developers admitted that despite wiiu having the capability of compute shaders with an early dev kit they didnt use the feature, that much tells you how lazy they are being so obviuosly all tht perfromance and modern architecture is going to waste when you port from an older system in must of the cases, and we expect the console to do miracles witth just 160 stream cores when not even xbox one and ps4 can do 1080p 60fps from ports of the older generation eventhough being more modern, efficient and also exceeding the raw power needed for that?, of course not. And its not just the problem that comes when porting or that developers are lazy, but also the fact that the development tools and engines were in their baby steps when the first wii u ports came out, and still the games worked fine, so summing all that up and the examples of xbox one and ps4 ports and the ps3 worst version of bayonetta due to being a port also point this out

its not only bayo 1 for ps3 being bad for being a port comapred to 360 and having half the framerate, its not just ps4 assesins creed 4 version which despite ps4 being more modern, efficent and easier to developr for cant run it at 1080p60fps and was running at just 900p30fps before the patch of 1080p30fps despite having all the adevnatge of modern hardware and being 750% more powerful than 360 in paper, we also have many other examples, ave you forgotten call of duty ghosts for xbox one? and xbox one is more modern, more effient and also 500% more powerul in paper, so why its running at just 720p?

|

I had a Radeon 6450 once which has 160 Stream processors, you would be surprised how well it runs console ports at 720P with low settings, even Assassins Creed 4. (Thankfully I replaced that POS with a Radeon 6570 in the Core 2 rig.)

But don't take my word for it...

http://www.youtube.com/watch?v=yFOrCJxnyDo

Remember the Next-Gen twins are running games graphically superior to the WiiU in every single way, they're in completely different leagues.

What you're suggesting is essentially that the difference between a WiiU and the fastest PC you can build has no performance delta because both are only running at 720P. (The Audacity!)

Since when has resolution been a defining factor around this forum in regards to hardware potency and graphics? I mean seriously? It's all but one minor part of a stupidly large puzzle that defines the graphical fidelity of a scene that's showcased on your screen.

megafenix said:

shouldnt the cache help on the framerate?, cause 360 has only 1MB and wii u has 3, so even if its slower, if the develoeprs take profit of the extra 2MB of cache there should be no framerate issues, plus one of the secret develpers at eurogamer admitted that they were being lazy, like saying that compute shaders were there but they didnt bother using them, sum that to the fact that 360 uses one core for sound, so obviously the port on wii u will do instead of using the DPS if the developers dont put effort into changing the code, not just adapting it, your geomety shaders also go to waste cause 360 doenst support them and thus the port will not do unless the deveopers change it, but they didnt bither with the compute shaders, why would they with the geometry shaders and other new stuff for better performance?

etc

|

No. Cache does NO form of processing, it just stores information, like a hard drive, just orders of magnitudes faster.

As for geometry shaders and compute shaders the Xbox 360 can deal with those, albeit it's extremely limited, it would probably translate well over to Latte as Latte is several evolutionary steps from the Xbox 360's GPU.

However, the other little tidbits of the hardware may have had an effect on things.

Anyhow, I know this is a long stretch, but do try to listen to reason instead of falling on your disproved ideas and numbers.

|