fatslob-:O said:

|

eyeofcore said:

@Bold Just how much pointless shit are you going to post just to respond to me ?

Why is it "pointless shit"? It is above all on topic and in directly related to your statements and it involved bandwidth, crucial/important difference between Wii U and Xbox 360 also it is aimed at your post/reply so I assume that you are avoiding this part that you call it "pointless shit".

|

I don't give a rats ass about about the difference between the WII U and X360! You didn't respond to the question I was asking with the pointless shit you gave me.

The problem is that this "pointless shit" is only pointless shit to you and you don't care about the difference between Wii U and Xbox 360 to get a picture and yet I did respond to your question while you are avoiding mine like a black plague!

|

eyeofcore said:

"Secret sauce" ? Really dude ? I guess you don't really know how semiconductor foundries work then, eh ? Renesas were the ones DESIGNING the the eDRAM and the who FABBED it was TSMC. If renesas were to go under it would mean shit to nintendo because they can just liscence the IP from them to continue manufacturing the WII U.

I was quoting("") the article. tell me something that I don't know... I love how you presume that and that thing about me.

Quote from article;

"As part of that restructuring Renesas, that merged with NEC Electronics in 2012, announced that it decided to close four semiconductor plants in Japan within 2-3 years, including the state-of-the-art factory based in Tsuruoka, Yamagata Prefecture (as reported by the Wall Street Journal), and this may spell trouble for Nintendo and the Wii U.

The reason is quite simple. The closing factory was responsible for manufacturing the console’s Embedded DRAM, that is quite properly defined the “life stone” of the console."

What do you understand by manufacturing?

|

I'll tell you something. You gave even more pointless shit to the discussion at hand and still haven't even went to answering my question! I'll say it once again and no more, RENESAS DOES NOT MANUFACTURE THE eDRAM ITSELF, THAT IS SUPPOSED TO BE TSMCs JOB!

You told me pure BS. Wow... You accuse me of not answering the question yet I answered it, your ignorance is shocking! Renesas does produce the the eDRAM in their own plants like NEC did for Xbox 360 in their own plants and NEC got aquired by Renesas also the reason why Xbox 360 did not had eDRAM embedded into the GPU because it was produced at a seperate factory! While for WIi U's GPU the eDRAM is embedded into GPU and that is possible if the GPU and eDRAM are manufactured at the same plant!

(picture of Wii U GPU from Chipworks)

Quote from the ARTICLE;

"Nintendo could try to contract another company to produce the component, but there are circumstances that make it difficult. According to a Renesas executive the production of that semiconductor was the result of the “secret sauce” and state-of-the-art know-how part of the NEC heritage of the Tsuruoka plant, making production elsewhere difficult. In order to restart mass production in a different factory redesigning the component may be necessary." [CONCLUSION; It is not produced at TSMC, further confirmed by Wii U GPU die]

Just because AMD's GPU were produced at TSMC does not mean that they can not be produced else where and it has been confirmed that AMD can produce their GPU's else where like at Global Foundries with AMD's APU's that contain the GPU portion!

|

eyeofcore said:

Duh. The WII U is SUPPOSED to be easier to develop for becuase it has better programmablility. (Again don't give me pointless shit in your post becuase I have followed alot of tech sites while doing some research and I'm not clueless to these subjects.)

Another thing that I already know... :/

Of course is easier, but if its a port what can you expect?

Even the PlayStation 4 version/build of Assassin Creed Black Flag doesn't look that impressive on PlayStation 4 despite how powerful it is and the fact that is even easier to develop for since it has no eDRAM, but rather just main RAM! So no matter how lazy/sloppy the developers are that should ease things a lot, yet they fail to bring impressive port/build with all of those advantages!

|

AGAIN YOU CONTINUE TO NOT ANSWER MY QUESTION AT HAND. YOU GAVE ME ANOTHER POINTLESS RESPONSE. DO YOU HAVE AN ISSUE WITH ENGLISH ?!

Yet again I did answer your freaking question then I added the "pointless" part that is not pointless, but rather connected to it what you were saying. You have a issue bro and I am afraid I can't help you. Your ignorance is out of my league to cure you bro.

You can't comprehend that people around the world have a different native language than yours and that your english is secondary not primary so they will most likely speak and write it perfectly and you even stumble sometimes so shut up. Nobody is perfect.

Deal with it... For once in your life!

|

eyeofcore said:

What about the rayman legends creator saying that the WII U is "powerful" ? The word "power" is a vague term once again.

Word power is not a vague term, nice try. What about him you say and I already pointed out that he is a graphic artist and a programmer so he is not just a game designer since he works with the hardware and he codes for it! He says that Wii U's hardware is powerful since he worked on it and programmed for it, he said that the game worked with uncompressed textures on Wii U so it takes more RAM and also more resources because more data is processed.

|

Again the word "power" is undefined. What are we talking about specifically ? Is he talking about the fillrates, shaders, or it's programmability ? No context was given and thus the meaning is lost. What does this have to do with my comment initally at hand ?!

Definition of word "Power"; the rate of doing work, measured in watts or less frequently horse power / capacity or performance of an engine or other device / the rate of doing work, measured in watts or less frequently horse power

Definition of word "Powerful"; Having great power or strength

@underlined You made a cowardly move my friend, that does not invalidates his statement and he is under NDA so he can't talk about the fillrates, shaders or the programmability so of course since he is under NDA he can not share it. Your counter argument just got denied and is labelled invalid! At least Shin'en was able to share some things;

"Getting into Wii U was much easier then 3DS. Plenty of resources, much faster dev environment."

"Wii U GPU and its API are straightforward. Has plenty of high bandwidth memory. Really easy."

"You need to take advantage of the large shared memory of the Wii U, the huge and very fast EDRAM section and the big CPU caches in the cores."

[Yes, the websites car is directly from the game. / Well, we are simply to small to build HQ assets just for promos]

@italic&underlined It has to do with your assumption that is incorrect, yet you will still continue to force it...

|

eyeofcore said:

Wow your really hurt about another man over the internet, aren't you ? Your afraid of somebody disproving you ?! *Facepalm*

You are just forcing the premise that is not correct and why I would be hurt over a person that I don't know? It is inlogical and you are trying to provoke me since you are a troll and force a rumor as a fact thus damaging your own credibility. If I was afraid then I would not be responding to you in the first place so you should be facepalming to yourself and not to me for asking these two question.

|

Then why don't you disprove the rumor at hand ?! There's more evidence for it than against it. "inlogical" ?! LMAO you really have terrible english, don't you ? It's "illogical"!

Why would I since the the person that created the thread that you should treat it as rumor, the guy said it is a rumor and a mere speculation so deal with it claiming that there is more evidence for it then against it is really a fallacy that became the "truth" thanks to people that keep fooling themself and lying themself.

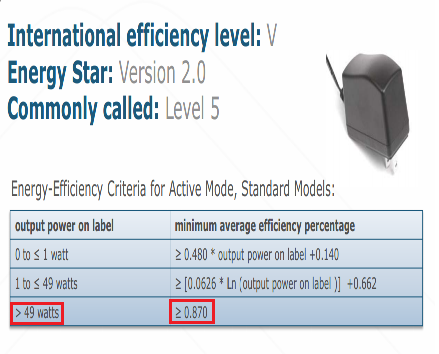

First of all the shaders or ALU's should be 80 to 90% larger for no apparent reason and there are no reasons given why they would be 80 to 90% larger plus there is like 90mm^2 of die space available/dedicated for the GPU exclusively. Wii U die size is 146.48mm^2 according to Chipworks while with protective shell it is 156mm^2 from Anandtech. Chipworks confirmed it is made on 40nm litography.

A Radeon HD 6570M VLIW5 is 104mm^2 with protective shell and without it it should be roughly 96mm^2 and its specifications are;

480 SPU's / 24 TMU's / 16 ROP's / 6 CU's with clock of 600Mhz and TDP of 30 watts

Lowering clock to by 8.5% to 550Mhz would lower TDP to 25 watts, now you will try to deny that it can not fit into 90mm^2 and that it consumes way to more for the Wii U. I can counter that easily since as you Fatslob-:O said that they can shrink/increase density by 10% by optimizing the design so it would be 86,4mm^2 and would also shave off another 10% of TDP thus it is now at 22.5 watts! Considering that Wii U's GPU codenamed Latte would be customized by Nintendo and AMD so more improvements to design and since Chipworks guy said that he can not identify which part of series and exact model this GPU is thus it proves that Wii U's GPU Latte is indeed a customized, a heavily customized GPU.

Now you will still argue that it is not possible because the Wii U consumes 30 to 35 watts maximum yet I can counter that simply by saying that Wii U has a Class A power suply with efficiency of 90% and is rated 75 watts so it can handle 67.5 watts at maximum. Since Wii U consumes 30-35 watts then we can assume that Nintendo locked some resources in Wii U's SDK/Firmware, you will try to counter that yet PlayStation 3 SDK/Firmware was updated and it freed up aditional 70MB for Games from the OS and with 3DS that allowed more CPU cycles for games also Xbox 360 SDK got updated which resulted in less usage of RAM, CPU and GPU resources with also one core not being exclusive to audio anymore like in the beginning. Shin'en said this in the interview;

"And for the future, don’t forget that in many consoles, early in their life cycle, not all resources were already useable or accessible via the SDK (Software Development Kit). So we are sure the best is yet to come for Wii U."

|

eyeofcore said:

Who the hell cares what renesas says ?! I want to see the end result!

So nobody cares about a statement from a company that designed and produces eDRAM for Wii U? It was said by a Renesas executive and not by some guy on a forum that may or may not be correct, this is straight from Renesas its self and when DualShockers wrote "secret sauce" that means that it is most likely a custom design and "state-of-the-art know-how" literally means/hints use of best of the best is being applied to the Wii U. If you want results then wait, its like chemistry the result is not instant nor designing a weapon too like mortars. It needs time to shine like on PLayStation 3, like on Xbox 360 like the Source engine.

You can not get the result right from the start... :P

By your logic we would have seen everything from start in every console/handheld/mobile/technology generation... Your logic. :/

|

I like how you ignore PC. Are you going to answer the comment directly or will you continue posting more pointless crap for me to read ?

I answered to you directly, it seems you can not comprehend that I answered to you question directly... I am sorry for ignoring the PC, did I hurt your feelings or something? When comes to devices PC is configurable while consoles, handhelds and mobile phones have basically bunch of SKU's and you can not upgrade their CPU or GPU and RAM except for N64 that had Expansion pack that doubled the amount of RAM on N64.

|

eyeofcore said:

Who gives a damn about shin'en ?! Are they really that important ?!

Same could be said for Crytek, Rare, Sony Santa Monica, Guerilla Games, Valve, ID Software, Epic Games, etc... When they were small and not really relevant also they are really that important because they use the hardware and they will try to get most out of and you forgot that these guys came from demo scene with different principles than modern day programmers.

Look at FAST Racing League and Jet Rocket that people would have think it is a game on Xbox 360 and not on Wii and Shin'en did things that other did not on Wii, including ambient occlusion in theri games. Don't doubt these guys that were in PC demo scene.

|

More pointless crap for not answering my intital concerns at hand, eh ?

Hey, I can't blame you. For you it is pointless crap while for others it is an answer and I was discussing your logic so you logic you actually think that your opinion is pointless crap. I am not trolling you, I am just stating your opinion/behaviour that you have shown

|

eyeofcore said:

More people ?! Wow man you really can't defend your own grounds.

DID I CLAIM THAT THE eDRAM ONLY HAD 35GB/s BANDWIDTH ? (Again your lack of english understanding makes everyone confused.)

Seriously?

Sorry for the misunderstanding then, so would you be so kind to tell me how much you think it has?

Can you also at the very least provide some kind of proof like a formula to calculate it?

And please, don't forget I can get about 10GB/s of bandwidth with just 1 MB of the old embedded memory on the Gamecube's GPU!

Please answer the last two questions involving bandwidth!

|

It depends on where the memory needs to flow and BTW it's 3MB of eDRAM. Kinda sad how you don't know about the hardware of a certain console from your favourite console manufacturer.

Agreed and I know that except we have two seperate pools of eDRAM not one pool if you are suggesting that it is one pool and its not and it is actually sad that you assume that a certain company is my favorite console manufacturer since only console I ever had was the original PlayStation that I got in 1999 and I am on PC/x86 platform since 2004. I admit that I had a brief contact with PlayStation 2 and PlayStation 3 at my friends house and thats about it. I've never seen in person any platform from Nintendo so I did not even touched let alone played games on Nintendo's platforms. I don't own any platform from Nintendo nor their games, your point and provokation is without any foundation so move along. Your desperation and ignorance that stinks this place.

The memory flow is not constant and what's more is that the constraints aren't clear either for every different workloads.

Everything has variables... Tell me something I did not knew already.

|