Jizz_Beard_thePirate said:

hinch said:

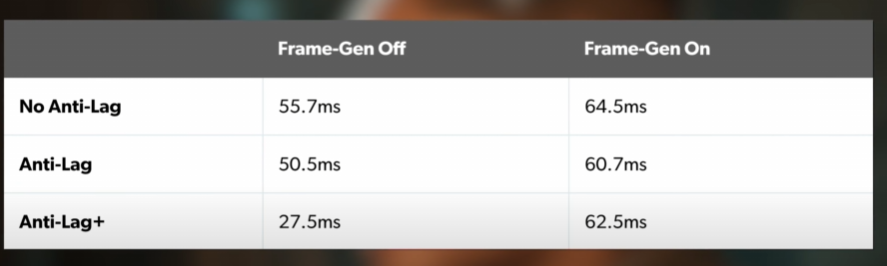

Hardware Unboxed deep dive into FSR 3.0 Not bad. A little bit scuffed launch for the tech but there are positives. Less CPU overhead vs DLSS 3.. and works decently itself for generating frames with competitive input lag (though higher than DLSS 3). Downsides.. Image quality still leaves a lot to be desired due to FSR's current upscaling capabilities. There's framepacing issues. Needs a good base framerate like DLSS 3 and there are caviats like not having Freesync and having need to turn on Vsync for a smoother experience. Doesn't really recommend in its current state. They'll improve on it but meh a lot of things to give up to use it rn. Plus you need to have upscaling turned on for FG to work properly. Then there no access to Reflex for Nvidia users and no Freesync/G-Sync support. Should've just delayed to fix those issues tbh, especially framepacing as that can't make for a good experience.. |

There is no better sales team for Nvidia products than Radeon themselves. Nvidia makes something proprietary claiming it requires specialized hardware to make it work. AMD announces they are making an open version that works on every GPU thus proving Nvidia is a big bad evil corp. AMDs solution releases and is objectively much worse than Nvidias solution. Customers see the reviews and justifies spending the premium on Nvidia products as AMD just proved Nvidias claims to be correct. Honestly AMDs tactics need to change. Their idea this entire generation seems to be "FreeSync and VRR killed Gsync so if we make an open standard for every Nvidias technology, then it should kill off the adoption and need for their tech." But the issue is Freesync and VRR isn't significantly worse than Gsync. Gsync module has it's advantages such as being able to go down to 1hz and allow for ULMB but Freesync/VRR can do the variable refresh rate just as good as Gsync if you stay with-in it's window and allows for HDMI 2.1 support which the G-sync module still doesn't. FSR 1/2/3 on the other hand effectively makes you games look and play much worse than DLSS. FSR is effectively has little to no difference than upscaling solutions that already exist and the thing is that PC gamers do not want that. Why? Because PC gamers shat on console gamers for generations for using upscaling tech similar to FSR. DLSS is different because it really is the next generation of upscaling tech that consoles and Radeon products simply do not have access to. And it's like, well if I am going to pay more than a console on a GPU, why wouldn't I get a GPU that has upscaling tech that is better than the rest? Cause god knows game devs aren't optimizing shat this generation. Hopefully Herkelmens successor finds a way for Radeon to start innovating because playing second class is not working for them in the PC space. The marketing does not work because Nvidia is the default choice and it's known that people do more research before buying the "second choice" and if reviewers keep saying, Nvidia has the better tech, people are generally willing to pay the premium for it. |

The problem is, what other choice do they have?

NVidia doesn't share their technology, so AMD needs to make it's own version every single time, otherwise they won't be competitive anymore. But since AMD is much smaller than NVidia, an open standard is the only way they could do it without falling too far back, as then other companies and people can help them catch up again.

AMD also can't really innovate right now, as they simply have their hands full with catching up. AMD would need to massively expand their GPU department to be able to innovate at the current situation, and I'm not sure there's even enough talent on the market (good or bad) to achieve this.

According to Zippia, AMD has around 15500 employees, of which of course a big part is for the CPU department and for semicustoms, and has about 1260 job openings right now. NVidia, on the other hand, has over 26000 employees, most of which are working on the GPUs. NVidia also has massively expanded it's workforce, basically doubling it since 2020 and over three times as many as 2018, so there won't be much left to hire for AMD either way.

Essay Pro

Essay Pro