Cyborg13B said:

And now let's draw comparisons to the GPU dice of the Xbox 360. At the end of my post, I reveal the Wii U GPU's transistor count. It is shockingly large! Here's the picture of the Xbox 360's GPU dice:

And the final numbers:

Wii U GPU's transistor count = 1.689 billion

Wii U GPU's EDRAM transistor count = 215.8 million transistors

Wii U GPU's logic transistor count = 1.4732 billion transistors

That's a lot of transistors for a machine that runs so slowly! And thus the reason Nintendo hid the figures from us - they don't want us to come to this conclusion.

|

Transistor Count

No, your transistor count for the GPU is way off. Even Juniper RV850 manufactured on 40nm TSMC node had just 1.04 Billion transistors with a die size of 166mm2. You are literally adding 62% more transistors out of thin air.

http://techreport.com/review/17747/amd-radeon-hd-5770-and-5750-graphics-cards/2

Specification perspective

The fastest R700 40nm GPU ever made by AMD was HD4770, codenamed RV740. That 40nm part, manufactured at TSMC, had 750mhz GPU clocks, 640 Shaders, 32 TMUs, 16 ROPs, 128-bit bus with 51.2GB of bandwidth.

http://www.anandtech.com/show/2756/2

Die size perspective

The R740 HD4770 GPU had 826 million transistors on a 137mm2 die, for a total floating point performance of 960 Gflops

http://techreport.com/review/16820/amd-radeon-hd-4770-graphics-processor

GPU size perspective

The cooler you'd need to cool down an 84W 40nm 137mm2 HD4770 inside the Wii U:

The card looks like this:

And it has a TDP of 80W, but the card used 84W in games. And a 1.04 Billion transistor 40nm Juniper HD5770 used up 112W. Therefore, your theory of 1.689 Billion transistor 40nm TSMC chip is right out the window. We also know that the Wii U's total power consumption can never exceed 80W, which means it must be slower than HD4770 since they are manufactured on the same node and AMD only significantly changed the architecture starting with GCN HD7000. Wii U's GPU also seems to lack official DX11 support, which means it is unlikely to be HD5000 or 6000 series.

Transistor Density and Impact of eDRAM

826 million transistors / 137mm2 die implies a transistor density of 6.02 million / mm2. Assuming similar transistor density, the Wii U's GPU of 150mm2 would and at 903 million. But here is the more stressing point.: We know that Wii U's GPU has eDRAM eating up a bunch of the die space, at least 30%, which suggests the actual GPU components responsible for graphics MUST be taking up less space than inside RV740 137mm2 die. Since there is no such thing as "alien 40nm tech at TSMC" that is somehow generations ahead in performance/watt to what AMD used for RV740 (HD4770) or RV850 (HD5770), there is no way Nintendo they could increased transistor density 50-60%. Additionally, there is no way with the eDRAM taking up so much of the die space, that the remaining portion of the GPU die can fit a full blown HD4770 with 960 GFLOPs on 40nm node since the Wii U's die is roughly just ~150mm2.

Impact of Neutured Specifications compared to AMD's fastest ever R700 40nm HD4770

We also know that the GPU in the Wii U is crippled by 64-bit bus with only 12.8 GB/sec memory bandwidth. And no eDRAM cannot make up for loss of 51GB/sec dedicated 1GB of GDDR5 RV740 (HD4770) had. The rumored specs are also all below 640:32:16 (128-bit) of RV740.

Finally, we also now the Wii U's GPU is clocked at 550mhz, not 750mhz of the HD4770. The power consumption of the Wii U vs. similarly sized AMD GPUs on 40nm node also suggests that the GPU has to be slower than HD4770.

Best Case Scenario

If we assume some absolutely magical scenario for the Wii U that the GPU inside Wii U is just a 550mhz downclocked HD4770 with fully intact 640 SP, 32 TMU, 16 ROP setup and the loss of memory bandwidth is offset by eDRAM, we get:

HD4770 * (550 mhz / 750mhz) * 51 VP = 37.4 VP

http://alienbabeltech.com/abt/viewtopic.php?p=41174

^ This is the absolutey best case scenario for the Wii U. If we assume HD5550, then the GPU itself starts off nearly 2x slower than HD4770. No point in even doing any math.

All of these factors automatically mean the GPU inside the Wii U has to be way slower than HD4770.

Old games like Far Cry 2 at 1080P at 30 fps. Doesn't sound promising for a next gen console.

The Tessellation Unit argument vs. Xbox 360's GPU

You really need HD5850 or faster to use tesellation in older games on the AMD side. For modern games like Crysis 2-3, Batman Arkham City/Asylum, Metro 2033, at least HD6950, and preferably GCN.

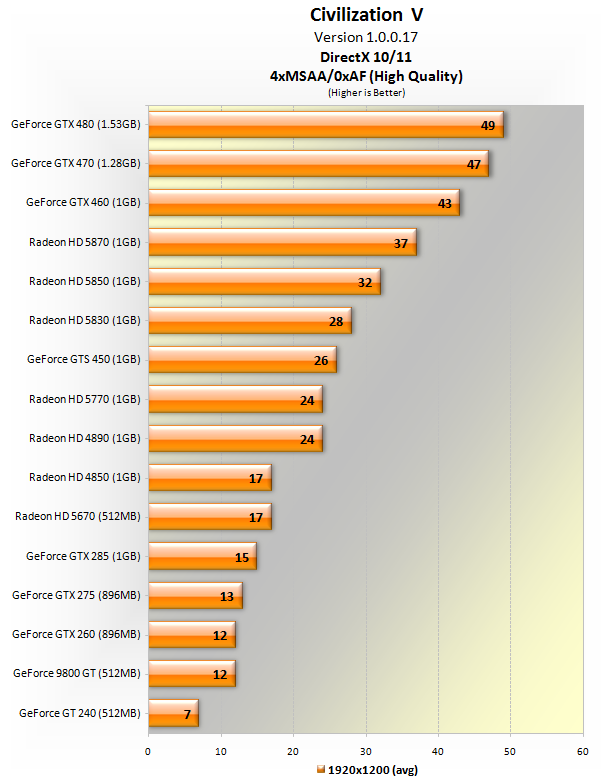

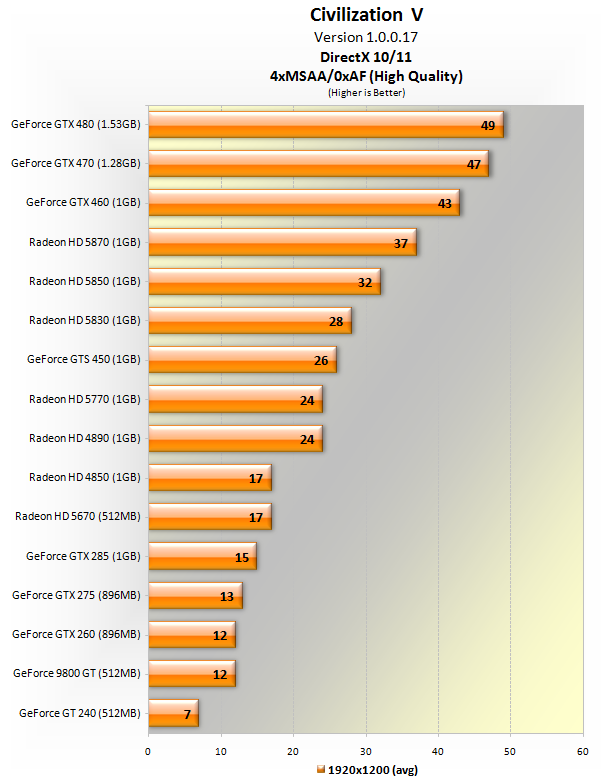

One of the early games that used tessellation on the PC was Civilization V. HD4850 which is actually slightly faster than HD4770 completely falls apart in this game, even does the way more powerful HD4890 and HD5770. In other words the extra "features" of the R700 GPU will be worthless for DX11 effects since the actual functional units that can perform them are too weak in R700 architecture. Even the same is true for all HD5000 series in modern games. This is why any next generation console that wants to have next generation graphical effects needs to have GCN architecture, or it will fail to deliver next gen visuals. Games like Trine 2 can still look great on the Wii U since those are not next generation games, but current generation PC games.

That means the Wii U might be able to pull off 2D side-scrollers with high resolution textures and effects like Trine 2 way better than PS360, but it will seriously struggle to ran games like Crysis 3 or BF4 at 30 fps with good settings. What about 2015-2016 multi-platform PS4/720 games at 1080P?

Frankly I don't even believe the GPU inside the Wii U is even 60% as powerful as the HD4770.

GPGPU Compute Argument

You can utilize the Steam Processors inside a GPU for general purpose computing. For games this entails DirectCompute or also called a Compute Shader.

"A compute shader provides high-speed general purpose computing and takes advantage of the large numbers of parallel processors on the graphics processing unit (GPU). The compute shader provides memory sharing and thread synchronization features to allow more effective parallel programming methods."

http://msdn.microsoft.com/en-us/library/windows/desktop/ff476331(v=vs.85).aspx

When you use DirectCompute in games via GPGPU, Stream Processors need a huge amount of memory bandwidth to feed them. Wii U has just 12.8GB/sec here which makes it useless for this task already. Secondly, the GPU architecture has to be designed efficiently for Compute or otherwise the workflow that's being dispatched to the Stream Processors is not efficient, or relies on sequential wavefronts rather than parallel ones. In Graphics Core Next, there are 2 dedicated Asynchronous Command Engines that allow the Stream Processors to work on parallel work loads because these 2 command engines decided on resource allocation, context switching and task priority. GCN can even executve some tasks out of order. There is no such thing in anything before HD7000 series, not on AMD or NV side. That makes every AMD GPU prior to GCN HD7000 series not suitable for GPGPU for games (they are suitable for single precision and double precision scientific work, but not GPGPU in games).

Want proof?

Dirt Showdown is one of the few newer games that uses DirectCompute / Compute Shader extensively and everything that's not GCN falls apart:

Therefore, Wii U will also be useless for GPGPU in games.

TL;DR:

1) Wii U GPU's 40nm die size at TSMC, eaten up by eDRAM, means no R500-600-700 GPU will ever beat HD4770 with a 150mm2 die

2) Gimped memory bandwidth to 12.8GB/sec is a severe limitation

3) Power consumption for the entire console is less than HD4770's by itself

4) GPU clocks are down from 750mhz of HD4770 to 550mhz

5) R700 had a tessellation unit, but it was worthless. You need HD6900 series or GCN to do tessellation in games on the AMD side.

6) The GPU inside Wii U is too weak in terms of # of functional Stream Processors (even if it was a full HD4770) or architecture to use GPGPU to accelerate graphical effects in next generation games.

==========================

Having said all that, I still think Wii U's best looking games will look better than PS360's after Wii U builds up a 5+ year gaming library.