| Pemalite said: We already have texture compression ratios of 36:1 which beats the Neural texture compression. |

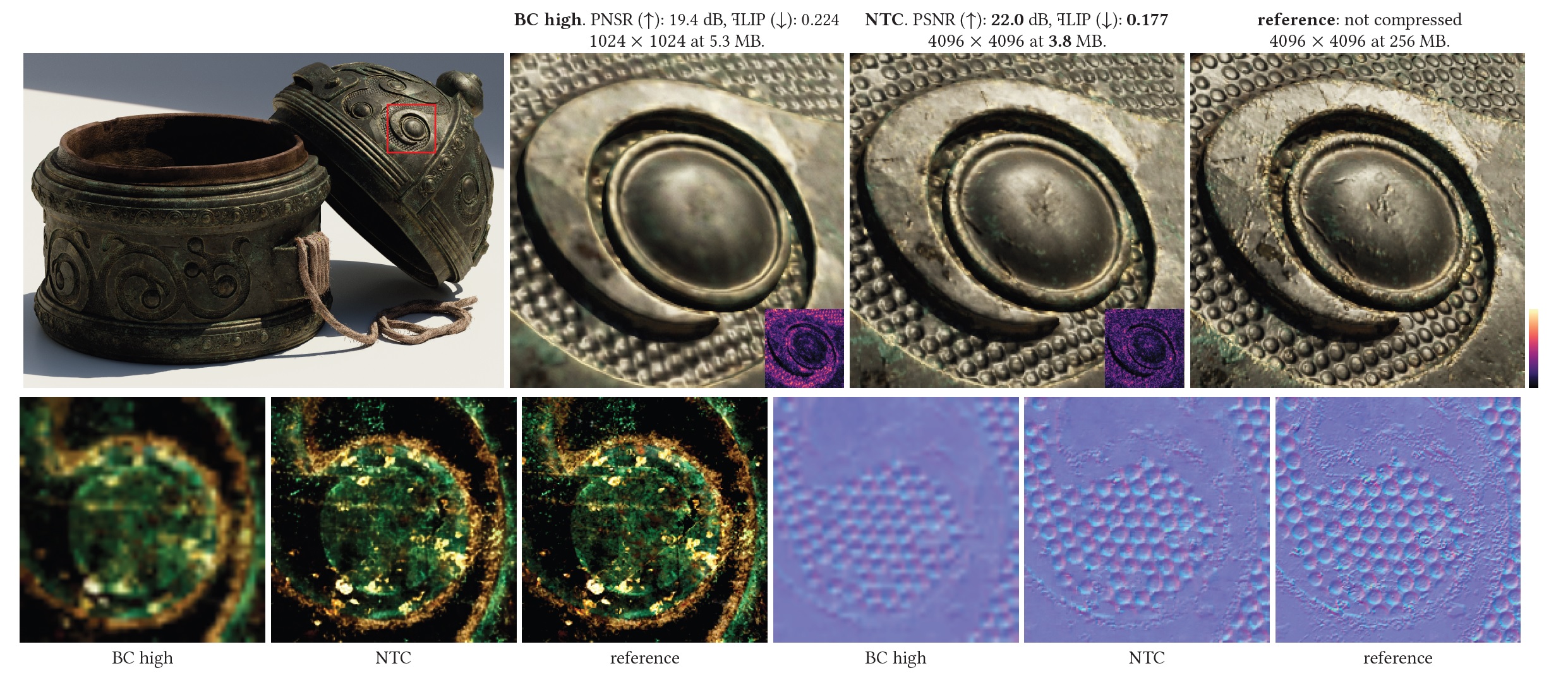

The big advantage of neural compression is that it can retain the quality very well. An example given in the paper shows us that NTC does pretty well compared to a typical block compression method (BC7), when attempting to memory-match (keep memory size as a constant.) Basically retaining much of the 4096 x 4096 uncompressed image (versus 1024 x 1024 for BC7) while using 70% of the space of the BC method. Also the compression ratio is 256 MB : 3.8 MB ~ 67:1 here. Basically NTC is getting 16 times the texels than BC7, while using less space.

From the paper

|

Using this approach we enable low-bitrate compression, unlocking two additional levels of detail (or 16× more texels) with similar Our main contributions are: • A novel approach to texture compression that exploits redundancies spatially, across mipmap levels, and across different material

Our method can replace GPU texture compression techniques, such It is a common industry practice to use |

Of course the trade-off, and why it likely doesn't have a clean packaged product implementation yet, is inference compute costs compared to BC methods. Nvidia has been steadily improving this over the years, though getting frame-time penalties lower with each iteration.

I agree though, full neural rendering with mostly generated frames is the game changer. Though advancements like this are stepping stones to that, more or less.

Last edited by sc94597 - 1 day ago