The short answer is "no."

Believe it or not, things in America aren't quite what they seem to be. The people living here in the USA don't really know much about their own country or the classification of their own beliefs. Many are just raised to demonize certain beliefs while praising another, not really knowing what each entails. The USA contains many kinds of people, and to call whichever one the "American" way is, in fact, not how America became what most people like about it.

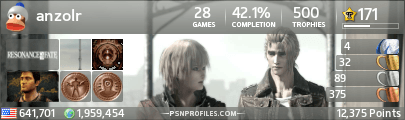

Existing User Log In

New User Registration

Register for a free account to gain full access to the VGChartz Network and join our thriving community.