Wyrdness said:

|

i already backed up my arguement a few pages ago pro go and look harder, fraud. The WII Us main memory bandwidth is 12.8 GB/s so deal with it!

http://www.notenoughshaders.com/2013/01/17/wiiu-memory-story/

Wyrdness said:

|

i already backed up my arguement a few pages ago pro go and look harder, fraud. The WII Us main memory bandwidth is 12.8 GB/s so deal with it!

http://www.notenoughshaders.com/2013/01/17/wiiu-memory-story/

F0X said:

|

A Treyarch dev on the official forums said it was harder to work with IW's code than Treyarch's.

well,got to deep into the stuff of bandwidth

as for the textures, well, i got no proves, but is interesting to note that there is another feature of the specs of the sdk of an alpha kit f wii u that has been claimed to be supported

first was multi threading rendering in project cars, then 1080p in a single pass, now its the texture sizes

http://www.nintengen.com/2012/07/wii-u-confirmed-specs-from-sdk-gpu-info.html

"

Graphics and Video

Modern unified shader architecture.

32MB high-bandwidth eDRAM, supports 720p 4x MSAA or 1080p rendering in a single pass.

HDMI and component video outputs.

Features

Unified shader architecture executes vertex, geometry, and pixel shaders

Multi-sample anti-aliasing (2, 4, or 8 samples per pixel)

Read from multi-sample surfaces in the shader

128-bit floating point HDR texture filtering

High resolution texture support (up to 8192 x 8192)

Indexed cube map arrays

8 render targets

Independent blend modes per render target

Pixel coverage sample masking

Hierarchical Z/stencil buffer

Early Z test and Fast Z Clear

Lossless Z & stencil compression

2x/4x/8x/16x high quality adaptive anisotropic filtering modes

sRGB filtering (gamma/degamma)

Tessellation unit

Stream out support

Compute shader support

"

F0X said:

|

Relax I'm only trying to stir a couple of peas ... I mean people this thread if you know what I mean.

| megafenix said: more poor excuses and bullshit from this suppodly tech guy selecting 1024 bits over 8192 or even 4096 bits when xbox used the 4096 bits alost a decade ago |

What? Are you talking memory bits? Or something more internal?

Because the origional Xbox had a 128bit memory bus with 6.4GB/s of bandwidth.

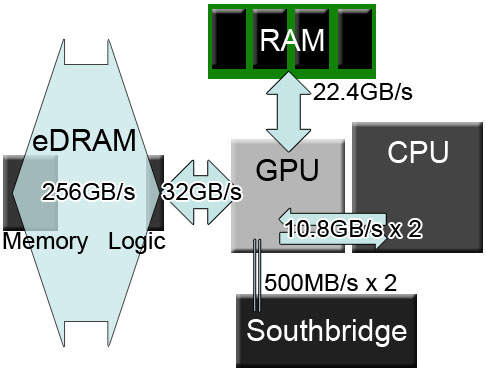

The Xbox 360 however had a 128bit memory bus with 22.4GB/s.

Where the Xbox 360 deviates is with the eDRAM.

It has 256GB/s of bandwidth inside the eDRAM which is actually 1024bit@2ghz = 256GB/s.

However, that's not interconnect bandwidth to the GPU or system memory, the bandwidth between the eDRAM and the GPU is 32GB/s.

If you want wan't a fair comparison Sandy Bridge has an internal ring bus that's 436GB/s.

The Radeon 3850's Ring bus was also 1024 bit.

The Radeon 48xx series had a 2048bit internal hub bus.

So this is actually nothing new.

However when you start talking interconnect, then it's simply not going to happen, 4096bit busses would drive up PCB/package complexity exponentially.

Basically, no part of the Origional Xbox or Xbox 360 had a 4096bit bus, unless you know something I don't know from an architectural perspective?

--::{PC Gaming Master Race}::--

curl-6 said:

A Treyarch dev on the official forums said it was harder to work with IW's code than Treyarch's. |

That's extremely strange since the WII U is easier to code for than the PS360!

| fatslob-:O said: i already backed up my arguement a few pages ago pro go and look harder, fraud. The WII Us main memory bandwidth is 12.8 GB/s so deal with it! http://www.notenoughshaders.com/2013/01/17/wiiu-memory-story/ |

Nope it was shot down Mr. Fraudulent tech expert, try again who knows maybe you'll learn something again like how you found out you know nothing on bandwidth you can even call your aunt to sit through this one with you.

Pemalite said:

|

Gee I wonder who's the poor excuse for a tech guy right now ? /sarcasm *rollseyes*

I wonder if we can't take our leave now from these peas ... I mean people, pemalite ?

Wyrdness said:

|

Denial much ?

| fatslob-:O said: Denial much ? |

See at least you're now acknowledging where you're going wrong now it's a start.