Pemalite said:

Jizz_Beard_thePirate said:

Those things take so little resources with modern hardware that it's not even worth looking into the difference. I haven't seen my 7800X3D anywhere close to 100% cpu usage during games even when paired with a 4090 even though I do run two 4k monitors that have plenty of apps, browser tabs and etc open at all times. I am sure there is a difference but it's so negligible unless you are running cpu heavy games such as the ones that you stated. But if those are your main games to play, then yea, get all the cpu cores you need similar to if you run cpu heavy applications.

But generally for many gaming related applications, the ones that take a lot of resources such a streaming will yield better results if you offload them to the gpu anyway which is one of the reasons why so many streamers use Nvidia specifically due to how good it's H264 encoders are. And the thing with a game like cyberpunk is you will be heavily gpu bound well before you will see benefits of cpu scaling come into factor. Both of those will certainly be more beneficial if you invest into a stronger gpu over cpu.

The funny thing about what's affordable and what's not is that GPUs ever since the RTX 3000/RX 6000 have been the one key thing that always seen awful prices whether it by crypto, Ai or other nonsense. CPUs have been pretty dang cheap and still are with constant sales. Ram and SSDs for the past however many years have been super cheap and only really have gotten expensive now. And for Ram prices funly enough will also be affecting GPU prices due to gpus having vram. If anything, the smartest thing to do these days would be to get a gpu with as much vram and features as possible and hold for as long as possible since with CPUs, it's competitive landscape should continue to be cheap for the foreseeable future. And when it comes time to sell that gpu, you will get a more of a return because of how gpu prices seem to get cucked every generation due to some nonsense.

|

With Ray Tracing CPU's can make a catastrophic difference, especially if the game is using Path Tracing or better... Especially if the game is using bounding volume hierarchy (BVH) which requires a heavy level of threading.

Either way, people choose the CPU's they choose based on a variety of factors, but there is definitely obvious benefits having a heavier threaded CPU with modern games as they are today, even if we ignore the future benefits.

And yes, streaming can be done on the GPU, but that isn't always the best option as some games may use the encoder/decoder for the games built in cinematic and often the CPU (Based on settings) can provide higher quality output for Twitch streams.

|

Yes Ray Tracing and Path Tracing can certainly impact CPU performance but there are a few important things to point out...

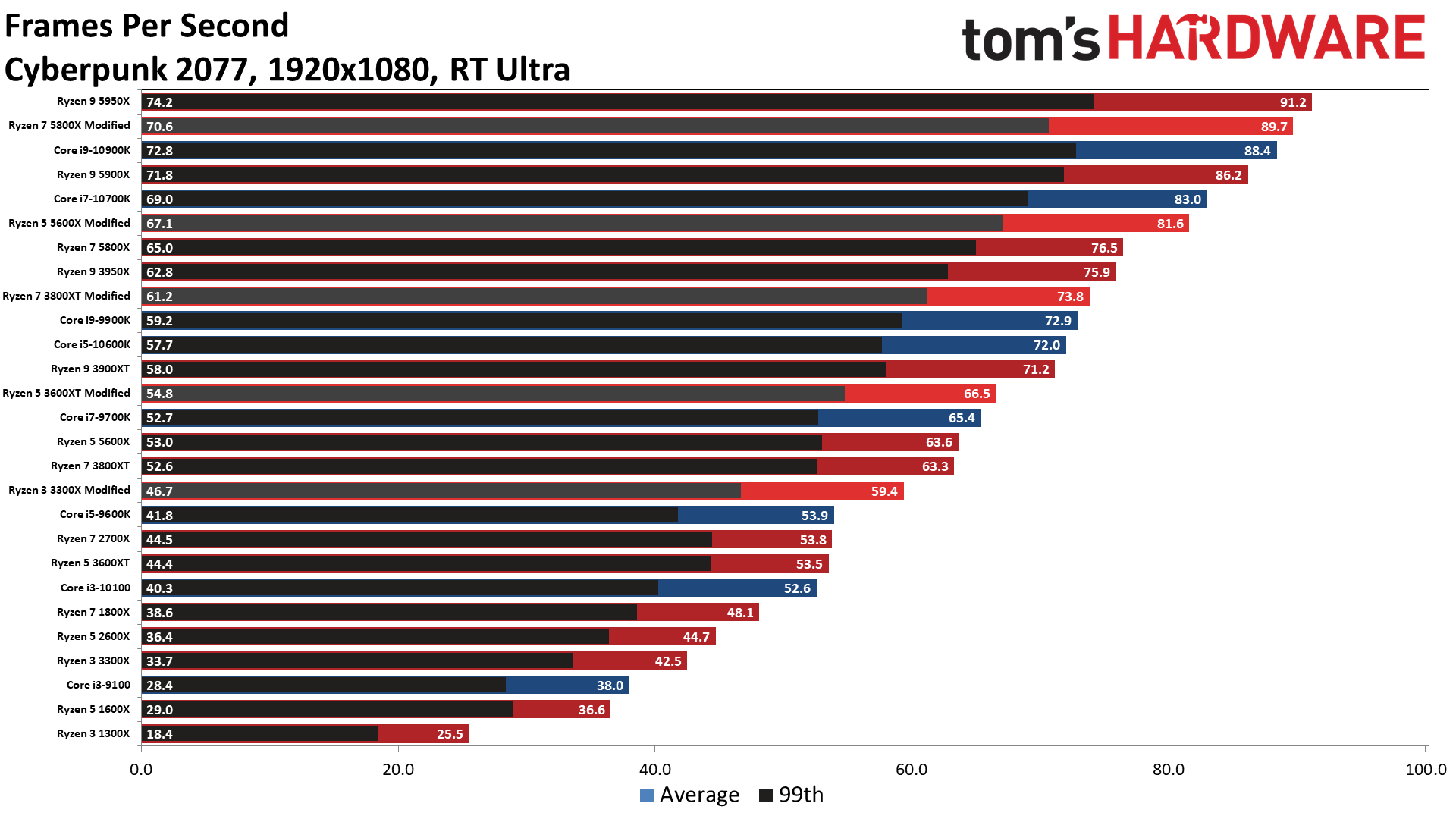

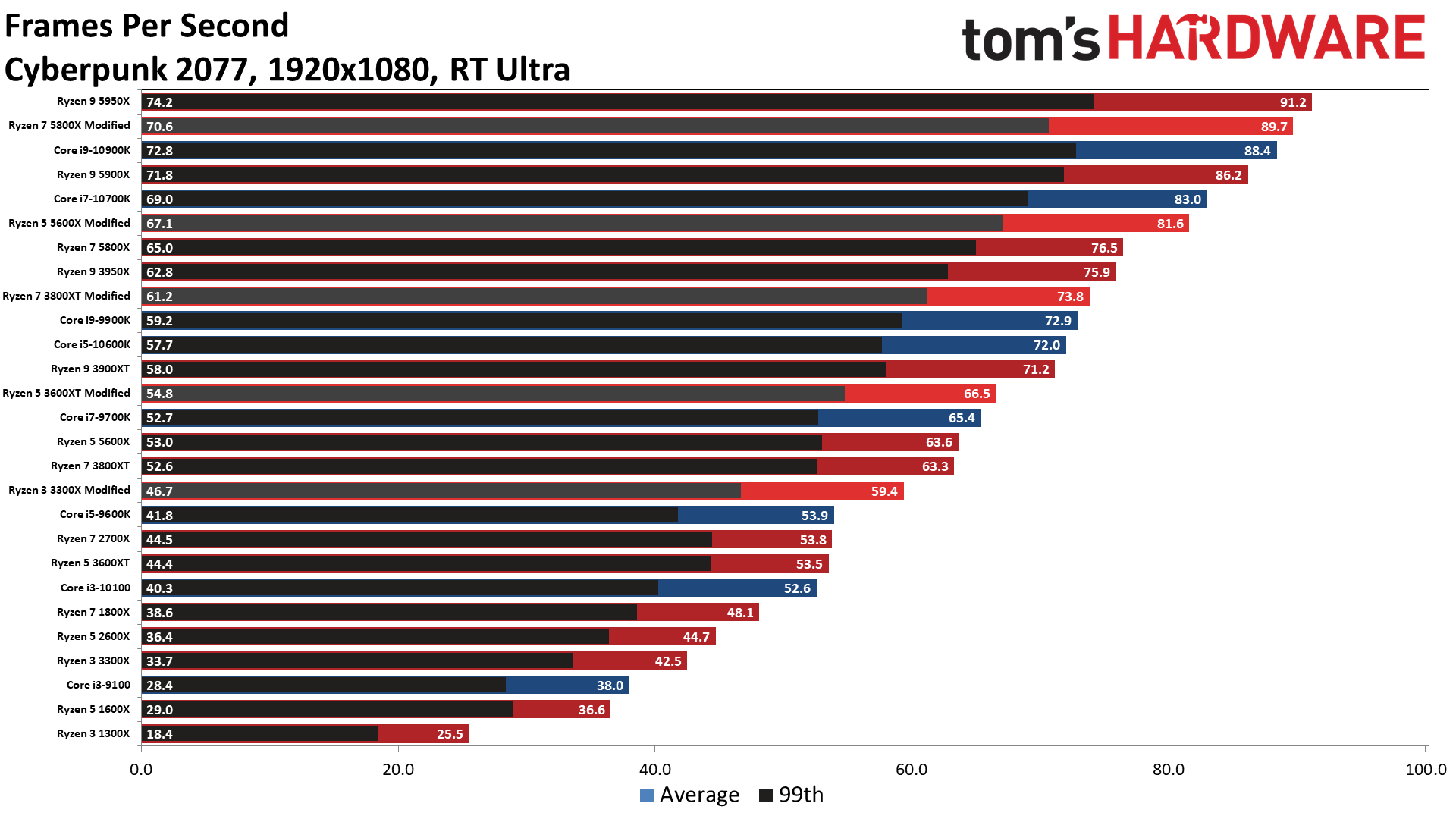

First in the link that you yourself have provided, you can see that the difference between 5800X "modified" and 5950X is only 1.5 FPS when running Cyberpunk with Ray Tracing. The only reason the 5800X shows modified is because at the time, there was a known bug with Ryzen and Cyberpunk where the game didn't use all of the threads for 6 and 8 core cpus. So Toms hardware according to the article applied a fix so that it will show the true scaling which really isn't much.

Second if you look at a more modern tests with modern CPUs such as 7700X vs 7950X and games like Alan Wake 2, you can see that 8 vs 16 cores really aren't making much of a difference. In fact thanks to Vcache with 5800X3D, it is able to gain more frames than a cpu with 16 cores like 5950x. I posted 720p to get rid of any potential gpu bottlenecks but they also have 1080p tests:

https://www.techpowerup.com/review/amd-ryzen-7-9800x3d/17.html

And third, lets say instead of getting a 5950X which had an MSRP of $800, they got a 5800X which had an MSRP of $450 and used that $350 to towards a GPU and they already had a fixed budget of $300 since that is basically the barrier to entry. So instead of getting stuck with a 3060 or 6600. They could have gotten either a 3080 ($700 msrp) or 6800XT ($650 msrp).

So basically in gaming, a person could have gotten an increase of 50% in performance in games if they put that money towards a GPU instead of doubling the cores and gotten one hell of a return if they sold it at the right time. Granted cryto shat on everyone 3 months after those gpus launched but you know, in theory and all that. But you could apply the logic with modern cpus too. 9950x msrp is $650 and 9700x msrp is $350. 9070 XT ($600) is $250 more expensive than a 9060 XT 16GB ($350) and will give around 50% increase in gaming performance.

Keep in mind, I am not saying it's wrong to buy a more expensive cpu over gpu. Everyones use case is different as you said. But for general gaming, I'd always recommend putting more money towards a gpu over a cpu.

Last edited by Jizz_Beard_thePirate - on 21 December 2025