| Bofferbrauer2 said: Deep dive into the RDNA chip: The shaders are the most interesting, as it looks a bit like a mix of hyperthreading and the way Bulldozer worked, as the chip has visibly 64CU pairs, but shares ressources between two of them so the final count of active CU is just 64. Edit: AMD just reorganized the ALUs from 1 FP and one Integer to 1 FP and one dual-function use, hence why they look similar now. Nvidia made that change with Ampere after adding the Integer ALU just prior with Turing. This is especially useful when lots of pixels need calculating, so the bigger the resolution, the bigger it's advantage. On the other hand, when the resolution gets smaller it's advantage vanishes, which is also why RDNA2+3 were stronger at lower resolutions. Also interesting is that AMD axed the L1 cache and instead doubled the size of the L2 cache and how AMD found spaces on the chip for the infinity cache, making the chip look asymmetric. Finally, he talks about how a 96CU chip with 3gbit GDDR7 could look like and be between 4090 and 5090 in performance but only consuming ~450W and just measuring around 550mm2 compared to 750mm2 for a 5090, meaning 3 8-pin connectors could suffice for that card. |

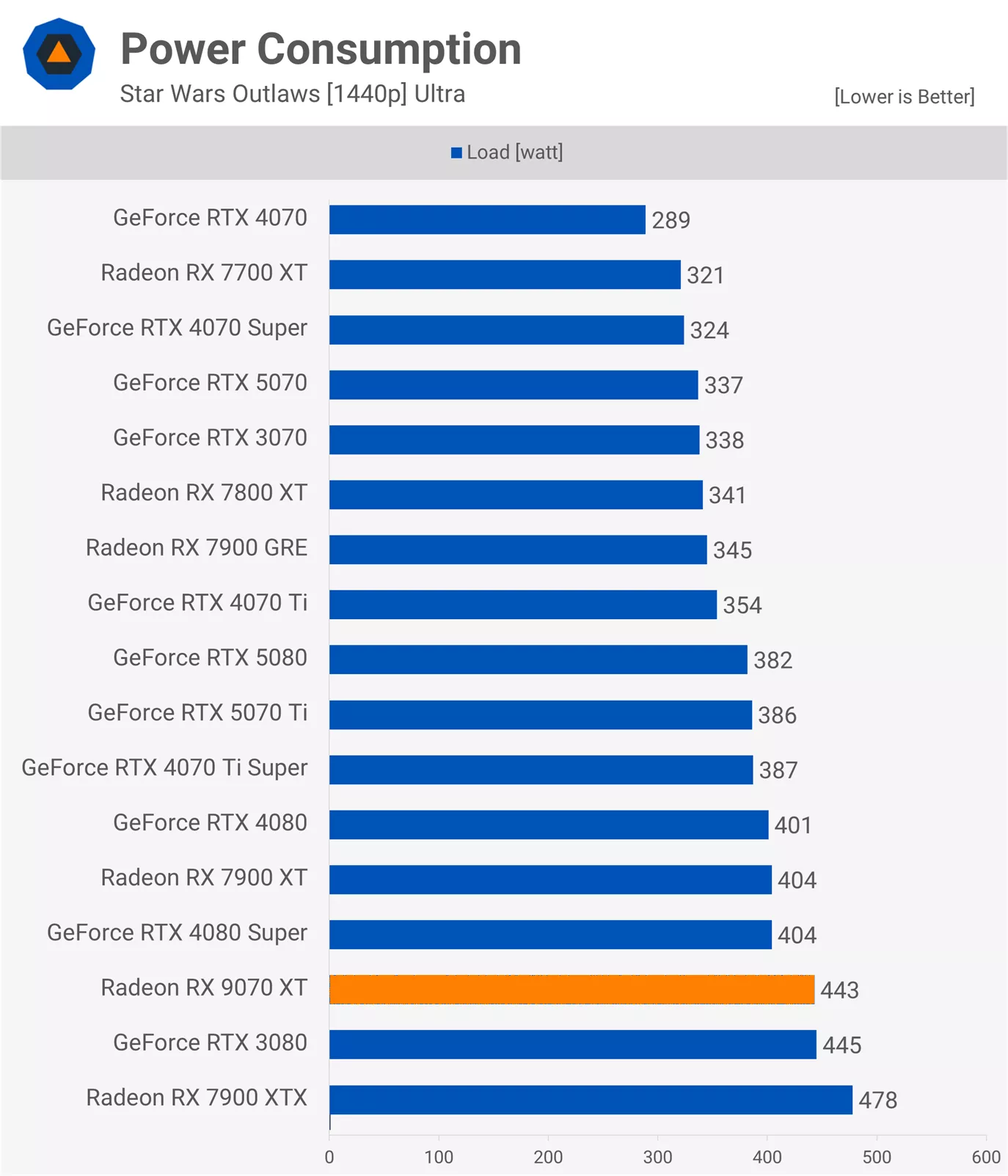

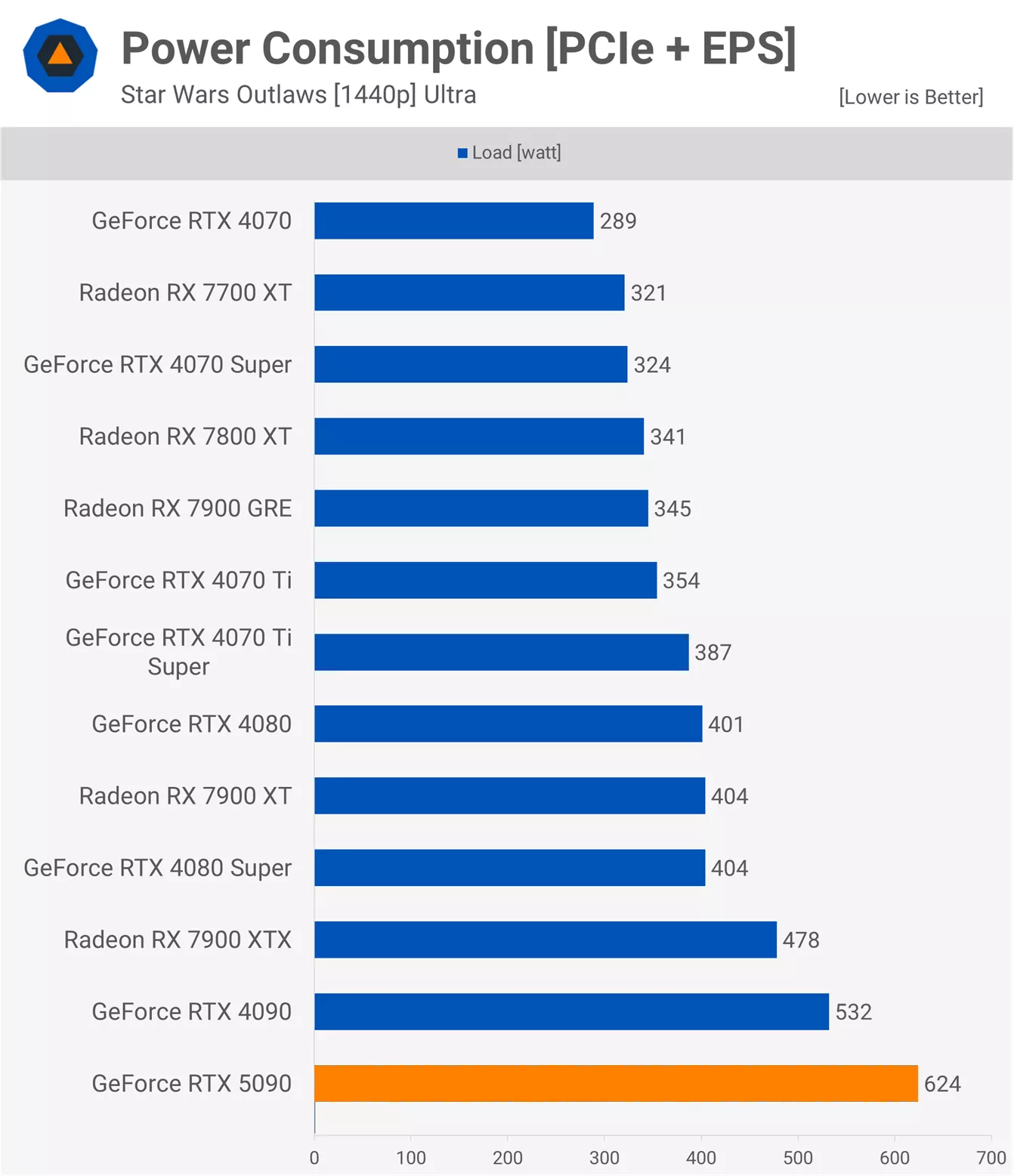

I haven't watched the video but not sure if I believe those power figures tbh. Power consumption of the 9070 XT is much more than 5070 Ti and 4080 despite slightly less die area and 9070 XT is slower than both. So around 450 watts while being faster than a 4090 has my doubts.

From Hardware Unboxed/Techspot:

https://www.techspot.com/review/2961-amd-radeon-9070-xt/

And remember, 7900XTX isn't very far off the 4090 power consumption by itself

https://www.techspot.com/review/2944-nvidia-geforce-rtx-5090/

PC Specs: CPU: 7800X3D || GPU: Strix 4090 || RAM: 32GB DDR5 6000 || Main SSD: WD 2TB SN850