Bofferbrauer2 said:

Since the gimps are the same as with the XT version, they should have a bit less of an effect on this card. The card should need less bandwidth and the PCIE x8 bandwidth should also be less of a bottleneck due to to the lower overall performance. Still bad, just not as bad as for the 6600XT. On a side note, something that surprised me when comparing this GPU to the 3060 was the TDP of the NVidia card. 170W is an awful lot for a GPU in it's performance range, even compared to previous gens of NVidia GPU. I mean, the 2060 Super, which I could only compare with the 3060Ti on the market placement (and which has a TDP of 200W btw), only consumed 5W more, while the base 2060 consumed 10W less and the 1660 even a whole 50W less. NVidia seriously needs to rein in the power consumption of their cards, as right now they're on the fast road to Thermi II and could get serious problems especially in the mobile market if AMD keeps increasing their performance per watt by 50% as announced. |

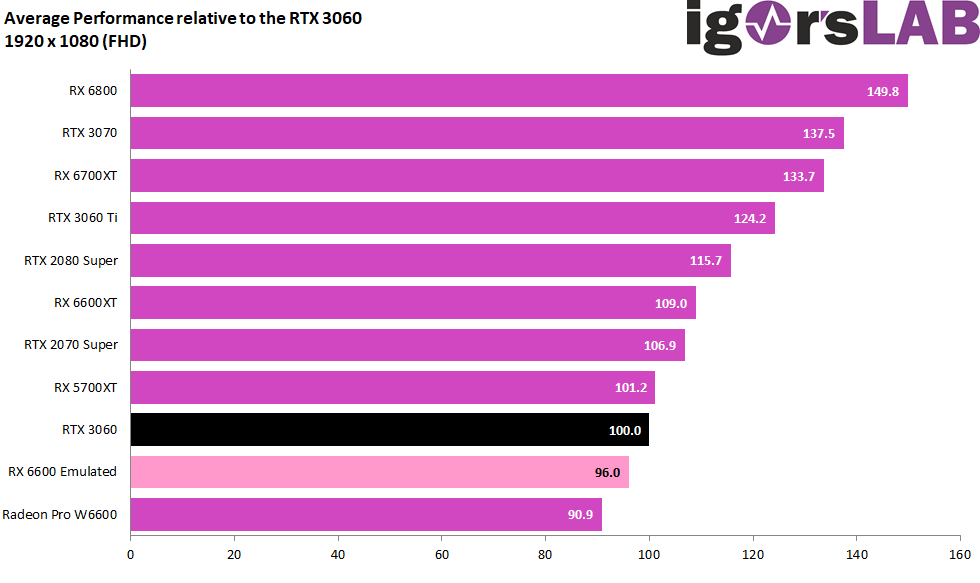

Sort of. The lower you go with these GPU ranges, the older the systems are for the target buyers. A 6600XT isn't exactly a powerful card yet switching to gen 4 has a 25% difference in performance in games like Doom Eternal. And then there's direct storage and such coming in that will really tax the pci-e lanes. It may not be as big of a deal as the 6600XT but it's still not a good thing. And thanks to the low Infinity Cache + Memory Bandwidth, the higher the resolution, the worse the performance.

TDP for both Nvidia and AMD are rumoured to go up next gen but much more so with Nvidia probably due to it being Monolithic vs MCM for RDNA 3. Personally for desktop, it doesn't really matter imo. People used to harp on Vega and GCN for taking up a lot of power but it was due to those not delivering on the performance. If Lovelace continues to deliver on the performance while having significant advantages in Ray Tracing, I don't think most people will care. But if RDNA 3 can beat Lovelace in raster and especially in Ray Tracing, then people will start harping on Nvidia for it's power consumption on top of everything else.

The biggest area the power consumption will affect things however are in laptops and handhelds. Last generation, a 2080 MaxP laptop was 10-15% slower than the desktop GPU. This generation, a 3080 laptop is 40+% slower than the desktop version because of it's TDP. I was hoping that this is where RDNA 2 would really showcase it's advantage but we have seen that wasn't the case. Hopefully next gen, RDNA 3 will really bring it to Lovelace. Intel will also be interesting in the laptop space as well but we will see.

Edited for better clarification.

Last edited by Jizz_Beard_thePirate - on 23 August 2021

PC Specs: CPU: 7800X3D || GPU: Strix 4090 || RAM: 32GB DDR5 6000 || Main SSD: WD 2TB SN850