shikamaru317 said:

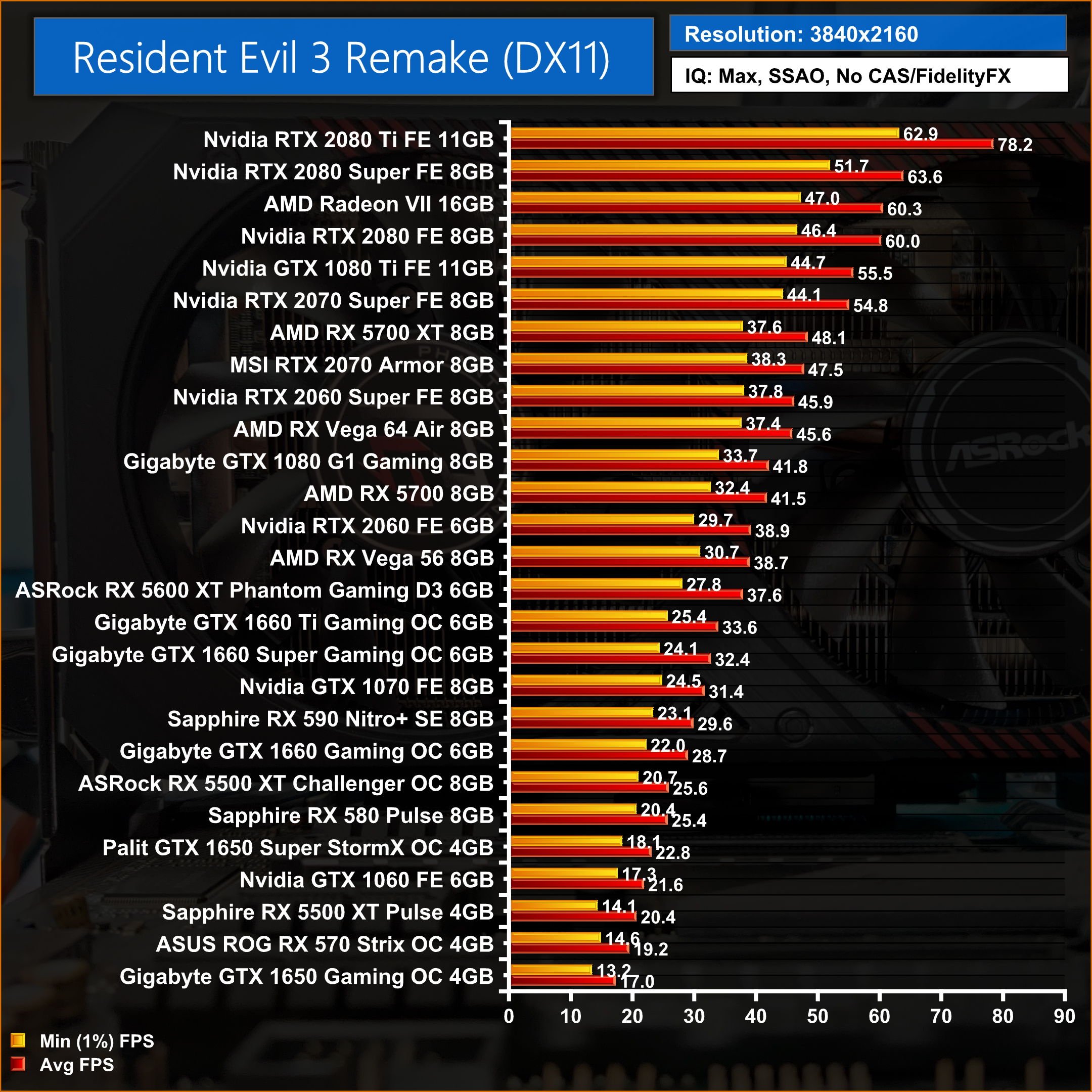

Here's where that guys statement about texture streaming falls apart for me. He claims that games struggle to utilize lots of shaders concurrently, but I'm not seeing any evidence of that when looking at PC benchmarks. XSX has 3328 enabled shader cores running at 1825 mhz. Compare that to Nvidia's Geforce 2080ti, which has a whopping 4352 shaders, more than 1000 more shaders than XSX has, running at 1350 mhz base clock, 1545 mhz boost clock. That comes out to 12.1 tflop for XSX and 11.75 tflop base/13.4 tflop boost for the Geforce 2080ti. Even though Geforce 2080ti has 1000 more shaders than XSX, games don't seem to have any trouble utilizing those shaders. Here we have a benchmark showing that the 2080ti has a 11 fps improvement over the 2080 Super, which much like PS5, has less shaders running at a higher clock speed, 3072 shaders running at 1650 mhz base, 1815 mhz boost, which comes out to 10.1 tflop base/11.1 tflop boost.

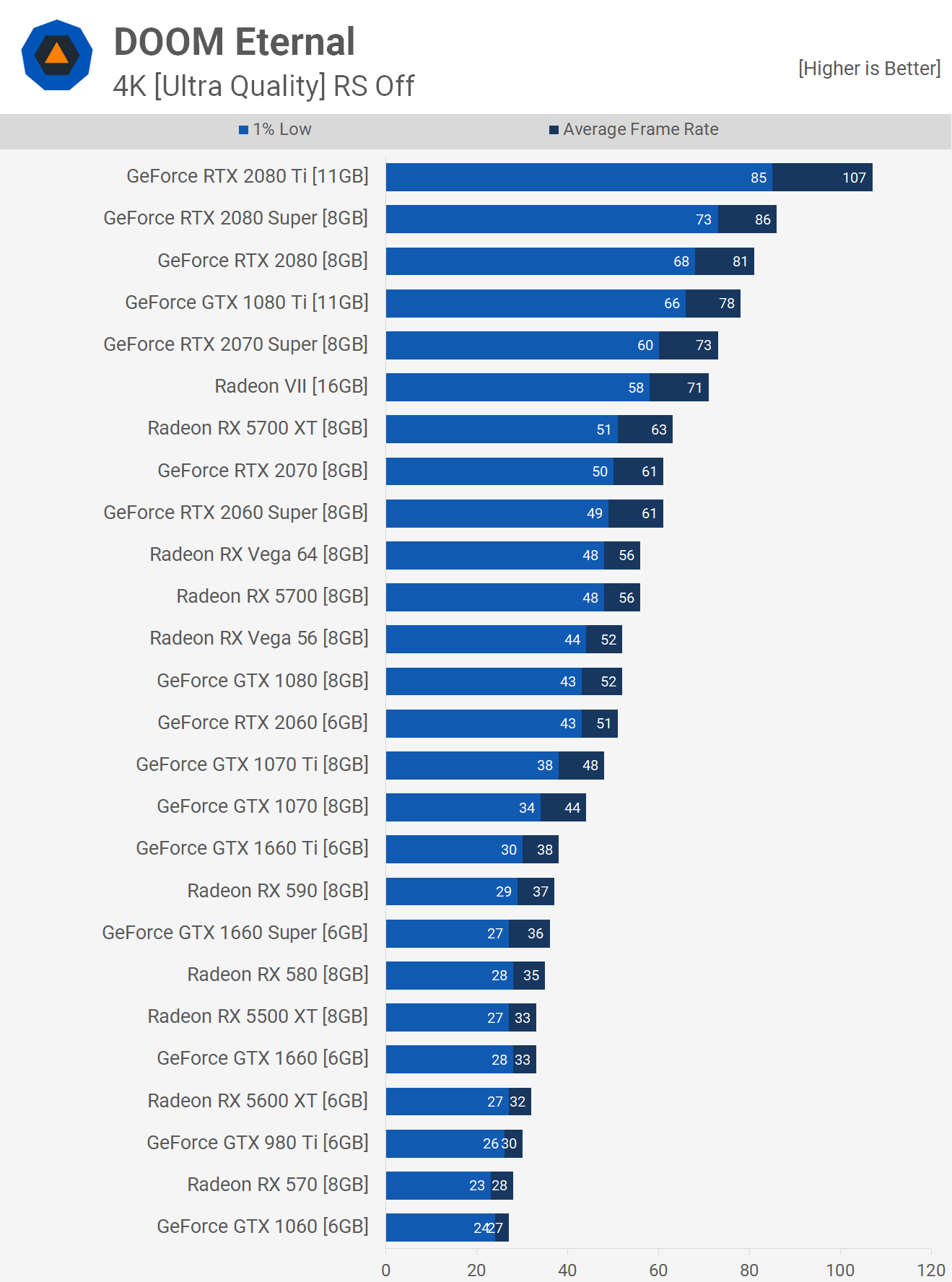

Here we have another benchmark for a different game, showing a 12 fps gap in minimum framrates between the 2080 Super and the 2080ti:

It seems to me that games don't seem to struggle with shader utilization like he claims, considering that 2080ti can make good use of 1000 more shaders than XSX has. |

Is as if you only we change one part of a system with everything else being the same, it somehow reflects only that part switched. Who would have thought. now im wondering what would happen if its a diferent combination of parts in a system. like proprietary parts that are meant to work in unison in a closed system.

It takes genuine talent to see greatness in yourself despite your absence of genuine talent.