| drkohler said: a) How do you know? |

Because of the hardware reveals.

And unlike console gamers... I have been playing around with SSD's for over a decade.

| drkohler said: b) "it's still stupidly fast however." What is stupidly faster? The ssd itself is half the speed of the PS4's. No decompressor can make that go away. So in the end, picking some data of whatever kind from the ssd is faster on the PS5, all the time. Or are you once again throwing the Teraflops of the cus around in an argument about the sdd technology inveolved? |

I think you meant PS5, not PS4.

Either way, the Xbox Series X having an SSD that is half the speed of the PS5's is by all intents and purposes still stupidly fast.

The PS5's is just twice as fast.

Let's not downplay anything here, let's be realistic of what both consoles offer.

There are some possible edge-case scenarios where the Xbox Series X could potentially close the bandwidth gap due to compression by a few percentage points, but we will need to see the real world implications of that... Because like I have alluded to prior, possibly even in another thread... Many data formats are already compressed and thus don't actually gain many advantages from being compressed again. (Sometimes it can have the opposite effect and increase sizes.)

| drkohler said: c) Yes the XSX has a hardware compander too, apparently God's gift to mankind when it comes to texture decompression. What it does to other things is anyone's guess. The big question is: What does the XSX do once the data is decompressed? In his GDC talk, Cerny spends an awful lot of time trying to explain what the problems are once you have your data decompressed. Pieces of the solution are Sony property, so not in XSX hardware. At this time, we do not know if there is additional hardware in the XSX (not unlikely as MS engineers faced the same problems as Sony so the must have had ideas, too). Anything the PS5 backend does in hardware can always be done in software should MS have chosen that path. This software requires (some of the) Zen2 cores, obviously (and you better hope there is something that avoids flooding the cpu caches with data they absolutely don't need). |

I am not religious. You seem to be getting upset over the specifications of certain machines? Might be a good idea to take a step back?

Microsoft has similar propriety technology as the Playstation 5 on the compression front and the Xbox Series X also includes a decompression block, that is what we do know, it was what was in the reveal.

https://news.xbox.com/en-us/2020/03/16/xbox-series-x-tech/

Microsoft completely removes the burden from the Zen 2 cores.

| drkohler said: d) Because we have two arguments that are consistently brought up by people who consistently show they have essentially no clue about what the real problems are. The first argument is "The 2.23GHz is a boost clock". No it isn't. Not going any further there. The second argument is "The ssd is only to make load times disappear". No it isn't. That is an added bonus but is way short of what the whole hardware/software chain has to do. |

No. It is because people are enamored with their particular brand choice and cannot see where they potentially fall short or provide constructive criticism... Or just generally treading on the logical fallacy of hypothesis contrary to fact.

The 2.23Ghz -is- a boost clock. Sony/Cerny specifically mentioned Smartshift. - Unless you are calling Cerny a liar?

https://www.anandtech.com/show/15624/amd-details-renoir-the-ryzen-mobile-4000-series-7nm-apu-uncovered/4

And I quote Digital Foundry which quoted Cerny:

""Rather than look at the actual temperature of the silicon die, we look at the activities that the GPU and CPU are performing and set the frequencies on that basis - which makes everything deterministic and repeatable," Cerny explains in his presentation. "While we're at it, we also use AMD's SmartShift technology and send any unused power from the CPU to the GPU so it can squeeze out a few more pixels.""

https://www.eurogamer.net/articles/digitalfoundry-2020-playstation-5-specs-and-tech-that-deliver-sonys-next-gen-vision

It is shifting TDP from one part of the chip to the other to boost clockrates. It's a boost clock.

If the Playstation 5 cannot maintain a 2.23Ghz GPU clock in conjunction with a 3.5Ghz CPU clock, whilst pegging the I/O, then by extension... That 2.23Ghz GPU clock is not the base clock, it is a boost clock, it is a best-case scenario.

| drkohler said: The second argument is "The ssd is only to make load times disappear". No it isn't. That is an added bonus but is way short of what the whole hardware/software chain has to do. |

Pretty sure that is not my exact statement and you are taking it right out of context.

e) Who? I'd assume each and every of Sony's first party studios will do. Those games will look at least as good as MS showpieces. Who will not? Small developers without the manpower. Bigger studios who don't care about the additional work required to bring their games up to Sony's in-house standards. No doubt those (mostly multiplat) games will look (a little? noticably?) better on the XSX thanks to the brute force advantage. Again there are a lot of what-ifs involved. If those developers are too careless, the PS5 will edge out because of the higher clock rates in every stage of the gpu. There simply is no telling without seeing the games. |

The Xbox Series X has the CPU, GPU and Memory bandwidth advantages, it is likely to show an advantage more often than not... Just like the Xbox One X compared to the Playstation 4 Pro.

In simpler titles which won't use 100% of either consoles capabilities... Those games will look identical. - And that happens every console generation, there are base Xbox One and Playstation 4 games with visual parity, right down to resolution and framerates.

Big AAA exclusives are another kettle of fish and could make things interesting... But by and large, if graphics is the most important aspect, the Xbox Series X holds the technical edge due to the sheer number of additional functional units baked into the chip design.

When the ssd techology is used properly, we'll see that old game design ideas can finally be realised now (on either console). The times of brute forcing your way through a game might be over (or not, we'll see in a year or two or three). |

Brute forcing and using design tricks to get around hardware limitations will continue to exist because the SSD is still a limitation until it actually matches the RAM's bandwidth.

Remember... We wen't from optical disks that could be measured in kilobytes per second to mechanical hard drives that could be measured in Megabytes per second... With an accompanying reduction in seek times... Did game design change massively? For the most part, not really... And we are seeing a similar jump in storage capability by jumping from mechanical drives that are measured in Megabytes per second to Gigabytes per second with an equally dramatic decrease in seek times.

People tend to gravitate towards games that they like... Developers then design games around that, hence why something like Battle Royale happened and then every developer and it's pet dog jumped onto the bandwagon to make their own variant.

Everyone copied Gears of Wars "Horde mode" as well at one point.

That's not to say that SSD's won't provide benefits, far from it.

| Intrinsic said: GPU & Resolution |

I would not be making such a claim just yet.

The Xbox Series X is a chip with dramatically more functional units... It is only in scenarios where the PS5 has the same number of ROPS, TMU's, Geometry units and so forth that it will be faster than the Xbox Series X due to it's higher clockrates... And usually those units are tied somewhat to the number of Shader groupings.

The Xbox Series X could have the advantage on the GPU side across the board... The point I am making is that until we get the complete specs set, we just don't know yet.

We do know the SSD is twice as fast as the Xbox Series X... Which is what people are clinging to at the moment as it's the only guaranteed superior metric.

| HollyGamer said: So, which computer would give the highest FPS in a game: one with a 2.0 teraflop processor in its GPU, or one with a 2.2 teraflop processor in its GPU? Now we need to look at different clock speeds, bus bandwidth, cache sizes, register counts, main and video RAM sizes, access latencies, core counts, northbridge implementation, firmwares, drivers, operating systems, graphics APIs, shader languages, compiler optimisations, overall engine architecture, data formats, and hundreds, maybe thousands of other factors. Each game team will utilise and tune the engine differently, constantly profiling and optimising based on conditions." |

Precisely, we do need to account for everything. You can have the same Teraflops, but half the performance if the rest of the system isn't up to snuff.

Only focusing on Teraflops or only focusing on the SSD is extremely 2Dimensional... And doing a disservice to the amount of engineering, research and development that Microsoft, Sony and AMD have put into these consumer electronic devices.

| LudicrousSpeed said: Barring shoddy optimization, XSX games should look better and run better. PS5 games should load a couple seconds faster. Not a large difference, though I want to see how devs use the variance in power on PS5. For all we know, the small difference on paper between 12 and 10.3 can become larger once PS5 is running demanding games or depending on how devs utilize the setup. |

It's more than just initial loading.

| Evilms said:

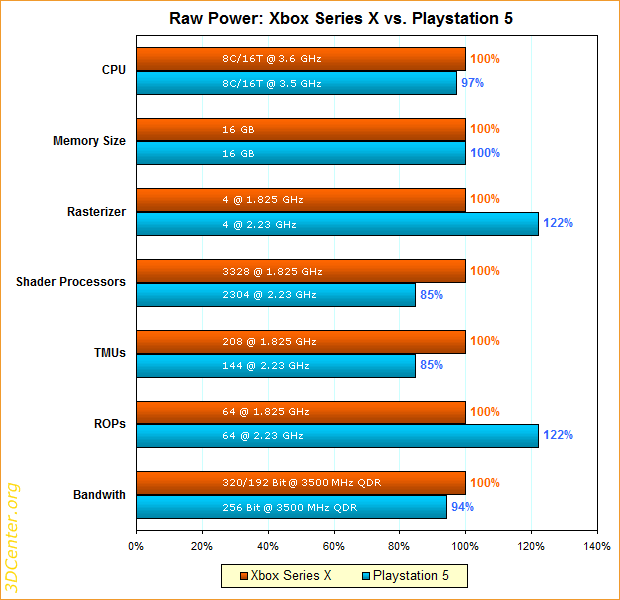

https://www.3dcenter.org/news/rohleistungsvergleich-xbox-series-x-vs-playstation-5 |

Pretty sure the TMU's, ROPS haven't been revealed yet, don't count the chickens before the eggs have hatched.

And rumor has it that the Xbox Series X could have 80 ROPS verses the PS5's 64 ROPS...

https://www.techpowerup.com/gpu-specs/xbox-series-x-gpu.c3482

In RDNA AMD groups 1x Rasterizer with every 4x Render Back ends, obviously that can change with RDNA 2, but just some food for thought.

https://www.amd.com/system/files/documents/rdna-whitepaper.pdf

Which means that we could be looking at 20 Rasterizers verses 16.

| sales2099 said: Maybe I’m not seeing that chart properly but where’s the GPU? You know the biggest advantage the Series X has on PS5? |

I think you are looking for the shader processors, they have tried to take every aspect of the GPU into account rather than a pure focus on flops.

| drkohler said: My guess is at the early stages (5-7 years ago), AMD was still struggling with cu scaling (the more cus, the less efficient the eincrease in performance was), so he went fast and small instead of wide and slow. |

AMD has "claimed" (Salts, grains, kittens and all that) that RDNA 2.0 is 50% more efficient than RDNA 1... Which was the same jump we saw between Vega and RDNA 1.

Graphics tasks are highly parallel... AMD was struggling with CU scaling because GCN had intrinsic hardware limits, it was an architectural limitation itself, we need to remember when AMD debuted GCN we were working with 32 CU's, AMD then stalled as the company's profits plummeted and AMD had to make cutbacks everywhere in order not to go bankrupt, so they kept milking GCN longer than anticipated in order to keep R&D and engineering costs as low as possible.

| DonFerrari said: Yes Sony were able to make the entire Smartshift, cooling solution, decide to control by frequency with fixed power consumption and everything else in a couple days since MS had revealed the specs? Or do we give then a couple months for when the rumours were more thrustworthy? The best you can have for "reactionary" is that Sony was expecting MS to go very high on CU count and have a high TF number and chose the cheaper route to put higher frequency, but that was decided like 2 years ago. |

Higher frequency isn't always cheaper.

The higher in frequency you go, the more voltage you need to dump into the design... And one aspect of chip yields is that not all chips can hit a certain clock frequency at a certain voltage due to leakage and so forth, which means the number of usable chips decreases and the cost per-chip increases.

It's actually a careful balancing act of chip size vs chip frequency. If you can get all the right cards in a row... You can pull off an nVidia Pascal and drive up clockrates significantly, however nVidia still had to spend a ton of transistors to reduce leakage and remove clockrate limiting bottlenecks from their design, but it paid off.

www.youtube.com/@Pemalite