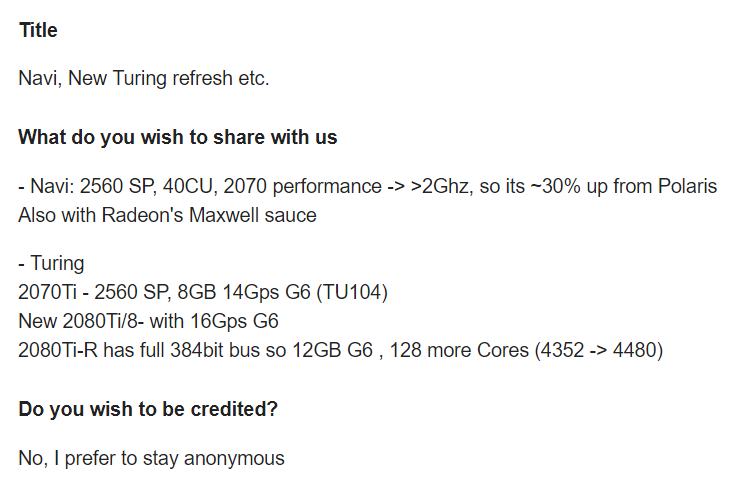

| Trumpstyle said: Guys a new REAL leak, videocardz.com is very reliable, as I have been predicting, Navi can reach 2ghz+

All those youtube rumors are fake and this one is real, it's Navi 10 and PS5 will have a cut-down navi 10 = 36 CU's clocked at 1,8ghz giving 8,3TF :) it's almost over now hehe. Edit: Looked at pc gaming benchmarks to see where performance should land. The PS5 should have around geforce 1080/Vega64 performance if this information is correct. |

It's an Anonymous source... We have no idea if it holds any credibility. Sub~40 CU's seems about what I would expect with high clockspeeds though, mainstream part and all that.

"Radeon Maxwell sauce" is bullshit though. Polaris and Vega brought many Maxwell-driving-efficiency to the table anyway... Plus Maxwell is getting old, shows how many years AMD is behind in many aspects that makes nVidia GPU's so efficient for gaming.

Also there is a larger performance gap between Polaris and the 2070 than just 30%... And the jump between the RX 580 and Vega 64 is a good 45-65% depending on application... Navi with 40 CU's will likely come up short against even Vega 56 and definitely Vega 64 and the RTX 2070.

Either way, like all rumors, grain of salt and all of that, don't take it as gospel until AMD does a revealing or we can see benchmarks from legitimate outlets like Anandtech.

| fatslob-:O said: Maybe until the next generation arrives ? It'll be way more pronounced from then on ... |

Three console generations is a bit of a stretch... Either way my stance is we can only base things on the information we have today, not future hypothetical's.

Thus the statement that consoles will drive AMD's GPU performance/efficiency on PC is a little optimistic when there are four consoles on the market today with AMD hardware and the needle hasn't really shifted in AMD's favor.

Not to say that AMD hasn't gained a slight boost out of it with development pipelines, but it just means sweet bugger all in the grand scheme of things... Which is certainly not a good thing.

I would like AMD to be toe-to-toe with nVidia, that is when innovation is at it's best and prices are at it's lowest.

| fatslob-:O said: No they really couldn't. The Wii's TEV was roughly the equivalent of shader model 1.1 for pixel shaders so they were still missing programmable vertex shaders. The Flipper still used hardware accelerated T&L for vertex and lighting transformations so you just couldn't straight add the feature as easily since it required redesigning huge parts of the graphics pipeline. Both the 360 and the PS3 came with a bunch of their own goodies as well. For the former, you had a GPU that was completely 'bindless' and 'memexport' instruction was nice a precursor to compute shaders. With the latter, you have SPUs which are amazingly flexible like the Larrabee concept and you effectively had the equivalent of compute shaders but it even supported hardware transnational memory(!) like as featured on Intel's Skylake CPU architecture ... (X360 and PS3 had forward looking hardware features that were far into the future) |

You can't really compare TEV to your standard shader model... TEV has more in line with nVidia's register combiners.

The Gamecube/Wii had Vertex Shader... But the transform and lighting engine was semi-programmable too, I would even argue more programmable than AMD's Morpheus parts back in the day.

In saying that... It's not actually an ATI designed part, it is an ArtX designed part so certain approaches will deviate.

For such a tiny chip, she could pull her weight, especially when you start doing multiple passes and leverage it's texturing capabilities.

Sadly overall, due to it's lack of overall performance relative to the Xbox 360, the effects did have to be paired back rather substantially.

And I am not denying that the Xbox 360 and Playstation 3 didn't come with their own bunch of goodies either... The fact that the Xbox 360 for example has Tessellation is one of them.

| fatslob-:O said: The Xbox 360 is definitely closer to an Adreno 2XX design than the R5XX design which is not a bad thing. Xbox 360 emulator developers especially seek Adreno 2XX devices for reverse engineering purposes since it much helps further their goals ... |

Don't think I will ever be able to agree with you on this point, not with the work I did on R500/R600 hardware.

And like I said before... Adreno was based upon AMD's desktop GPU efforts, so it will obviously draw similarities with parallel GPU releases for other markets... But Xenos is certainly based upon R500 with features taken from R600... But that just means the Adreno was also based on the R500 also.

| fatslob-:O said: That's not what I heard among the community. AMD's OpenGL drivers are already slow in content creation, professional visualization, games, and I consistently hear about how broken they are in emulation ... (for blender, AMD's GL drivers weren't even on the same level as the GeForce 200 series until GCN came along) The last high-end game I remembered using OpenGL were No Man's Sky and Doom but until a Vulkan backend came out, AMD got smoked by Nvidia counterparts ... OpenGL on AMD's pre-GCN arch is a no go ... |

When I say "wasn't to bad" I didn't mean industry leading or perfect. They just weren't absolutely shite.

Obviously AMD has always pushed it's Direct X capabilities harder than OpenGL... Even going back to the Radeon 9000 vs Geforce FX war with Half Life 2 (Direct X) vs Doom 3 (OpenGL.)

OpenGL wasn't to bad on the later Terascale parts.

I mean... Take Wolfenstein via OpenGL on id tech... AMD pretty much dominated nVidia on this title with it's Terascale parts.

Granted, it's an engine/game that favored AMD hardware, but the fact that it was OpenGL is an interesting aspect.

https://www.anandtech.com/show/4061/amds-radeon-hd-6970-radeon-hd-6950/22

| fatslob-:O said: CUDA documentation seems to disagree that you could just group Kepler with Fermi together. Kepler has FCHK, IMADSP, SHF, SHFL, LDG, STSCUL, and a totally new set of surface memory instructions. The other things Kepler has deprecated from Fermi were LD_LDU, LDS_LDU, STUL, STSUL, LONGJMP, PLONGJMP, LEPC but all of this is just on the surface(!) since NVPTX assembly is not Nvidia's GPUs native ISA. PTX is just a wrapper that makes Nvidia GPUs true ISA hidden so there could be many other changes going on underneath there ... (people have to use envytools to reverse engineer Nvidia's blob so that they could make open source drivers) Even with Turing, Nvidia does not group it together with Volta since Turing has specialized uniform operations ... |

I think you are nitpicking a little to much.

Because even with successive updates to Graphics Core Next there is some deviations in various instructions, features and other aspects related to the ISA.

They aren't 1:1 with each other.

Same holds true for nVidia.

But from an overall design principle, Fermi and Kepler are related, just like Maxwell and Pascal.

| fatslob-:O said: @Bold No developers are worried about new software breaking compatibility with old hardware but just about everyone is worried about new hardware breaking compatibility with old software and that is becoming unacceptable. AMD does NOT actively do this in comparison to Nvidia ... These are the combinatorial configurations that Nvidia has to currently maintain ... APIs: CUDA, D3D11/12, Metal (Apple doesn't do this for them), OpenCL, OpenGL, OpenGL ES, and Vulkan Platforms: Android (still releasing quality drivers despite zero market share), Linux, MacOS, and Windows (all of them have different graphics kernel architecture) Nvidia has to support ALL of that on at least 3 major instruction encodings which are Kepler, Maxwell/Pascal, Volta, Turing and they only MAKE ENDS MEET in terms of compatibility with more employees than AMD by comparison which only have to maintain the following ... APIs: D3D11/12, OpenGL (half-assed effort over here), and Vulkan (Apple makes the Metal drivers for AMD's case plus AMD stopped developing OpenCL and OpenGL ES altogether) Platforms: Linux and Windows AMD have 2 major instruction encodings at most with GCN all of which are GCN1/GCN2/Navi (Navi is practically a return to consoles judging from LLVM activity) and GCN3/Vega (GCN4 shares the same exact ISA as GCN3) but despite focusing on less APIs/platforms they STILL CAN'T match Nvidia's OpenGL driver quality ... |

End of the day... The little Geforce 1030 I have is still happily playing games from the DOS era... Compatibility is fine on nVidia hardware, developers don't tend to target for specific hardware most of the time anyway on PC.

nVidia can afford more employees than AMD anyway, so the complaint on that aspect is moot.

As for OpenGL, that is being depreciated in favor of Vulkan anyway for next gen. (Should have happened this gen, but I digress.)

| fatslob-:O said: I don't see why not ? A single channel DDR4 module at 3.2Ghz can deliver 25.6 GB/s An octa-channel DDR5 modules clocked at 6.4Ghz can bring a little under 410 GB/s which is well above a 1080 and with 7nm, you play around with a more transistors in your design ... Anandtech may not paint their outlook as bleak but Nvidia's latest numbers and attempt at acquisition doesn't bode well for them ... |

DDR3 1600mhz on a 64bit bus = 12.8GB/s.

DDR4 3200mhz on a 64bit bus = 25.6GB/s.

DDR5 6400mhz on a 64bit bus = 51.2GB/s.

DDR3 1600mhz on a 128bit bus = 25.6GB/s.

DDR4 3200mhz on a 128bit bus = 51.2GB/s.

DDR5 6400mhz on a 128bit bus = 102.4GB/s.

DDR3 1600mhz on a 256bit bus = 51.2GB/s.

DDR4 3200mhz on a 256bit bus = 102.4GB/s.

DDR5 6400mhz on a 256bit bus = 204.8GB/s.

I wouldn't be so bold to assume that motherboards will start coming out with 512bit busses to drive 400GB/s+ of bandwidth. That would be prohibitively expensive.

The Xbox One X is running with a 384-bit bus... but that is a "premium" console... Even then that would mean...

DDR3 1600mhz on a 384bit bus = 76.8GB/s.

DDR4 3200mhz on a 384bit bus = 153.6GB/s.

DDR5 6400mhz on a 384bit bus = 307.2GB/s.

That's an expensive implementation for only 307GB/s of bandwidth, considering that ends up being less than the Xbox One X... And that is using GDDR5X... And no way is that approaching Geforce 1080 levels of performance.

AMD would need to make some catastrophic leaps in efficiency to get there... And while we are still shackled to Graphics Core Next... Likely isn't happening anytime soon.

You would simply be better off using GDDR6 on a 256bit bus.

| fatslob-:O said: I doubt it, Intel are not even committing to a HARD date for the launch of their 10nm products so no way am I going to trust them anytime soon with 7nm unless they've got a contingency plan in place with either Samsung or TSMC ... |

They stuffed up with 10nm... And allot of work has had to go into making that line usable.

However, the team that is working on 7nm at Intel hasn't had the setbacks that the 10nm team has... And for good reason.

I remain cautiously optimistic though.

| fatslob-:O said: We should not use old games because it's exactly as you said, "it wasn't designed for today's hardware" which is why we shouldn't skew a benchmark suite's to be heavily weighted in favour of past workloads ... |

We should if said games are the most popular games in history that are actively played by millions of gamers.

Those individuals who are upgrading their hardware probably wants to know how it will handle their favorite game... And considering we aren't at a point yet where low-end parts or IGP's aren't capable of driving GTA5 at 1080P ultra... Well. There is still relevance to including it in a benchmark suite.

Plus it gives a good representation of how the hardware handles older API's/work loads.

| fatslob-:O said: And just like that, Avalanche Studio's Apex engine made the jump!

Not too long ago when they released Just Cause 4, AMD was significantly trailing behind Nvidia but with Avalanche's first release on Vulkan the tables are turning ... Only a couple of key engines left are holding out from this trend ... |

Good to hear. Fully expected though. But doesn't really change the landscape much.

Last edited by Pemalite - on 14 May 2019

www.youtube.com/@Pemalite