John2290 said:

Maybe. Ive not heard this but the eye doesnt work on resolutions or a pixel count, thats for sure. my time with photography I know there is a cone of focus in the center of vision that can't diferentiate 2mp held at thumb distance in less than a 5 degree arc. The eye finds it difficult at 20/20 vision to differentiate pixelxs up that close at near 8k for most people spread across 110 degrees. Of course there'll be the exception of people who are super focised and eye like a hawk who need just a tad more and as the FOV increases the pixel count may need to increase to something closer to 10k but 8 k per eye will easily be elemitinating screen door effect and putting a soft cap on resolution for 95 percent of people. After 8k there not much point in adding more pixels to screens in general (Many say 4k is too far as is) |

With VR headsets and pupil tracking it will be possible to take full advantage of how the human eye works.

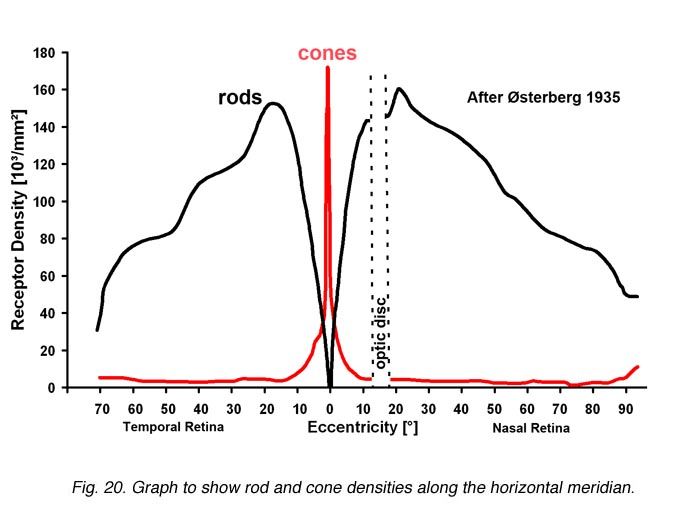

Cones are responsible for color vision in bright light and resolve detail, while rods are mostly responsible for vision in dim light with no color perception and low detail. They are mostly adapted to detect motion and draw your attention to that part of your field of view. At night you can see flashes of light from the corner of your eyes yet when you turn to them they disappear as the cones can't detect the faint light.

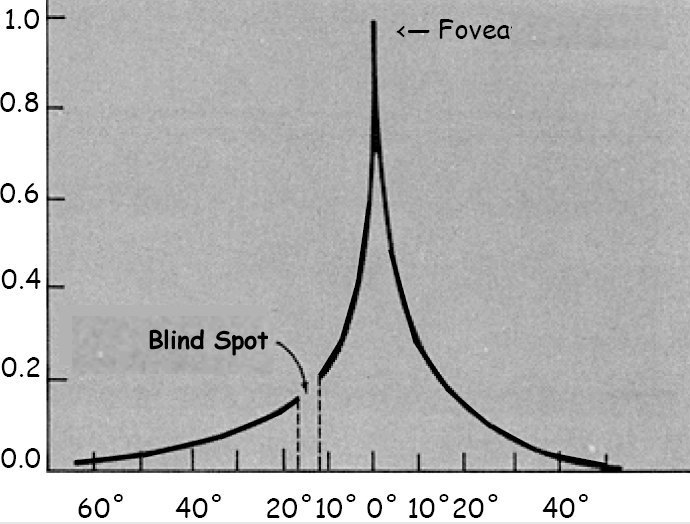

Anyway sharp 20/20 vision is only possible right in the center.

Outside a 10 degree cone (5 degrees either side) you're already down to 40% of 20/20 vision.

Outside 20 degrees less than 20% of 20/20 vision.

Rendering 110 degrees fov at full resolution is very wasteful.

20/20 vision is associated with 60 pixels per degree, yet that's the point at which you can differentiate cycles of maximum contrast, 30 cycles per degree of alternating white and black lines.

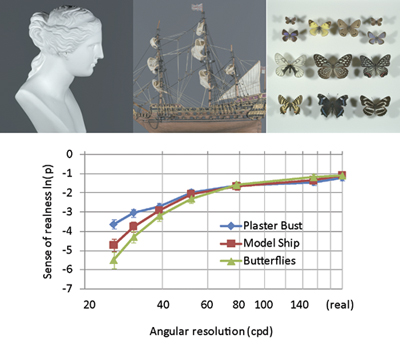

NHK did a test with people with 20/20 vision to see to what point they could tell which screen has the higher resolution, or feels more real

As you can see towards 150 pixels per degree it starts to devolve into guess work.

The higher the cpd of the display is, the more real it feels, with diminishing returns

1080p at a 30 degree fov sits at 32 cpd. 4K at a 30 degree fov doubles that. Bare in mind that's sitting max 8.8ft from a 65" tv. (1.62 x diagonal screen size) So if you sit close enough, 4K is definitely going to feel more real. Yet it's a massive waste to render the whole scene at 4K.

For VR you can for example render 5 degrees at 64cpd, 10 degrees at 32cpd, 20 degrees at 16cpd, rest at 8 cpd and create the illusion of looking at a 65" 4K display at max 8.8 ft distance (except now it's filling up 110 degrees fov), while only having to render 50% of the pixels required for the 4K display and can be reduced further with smarter rendering techniques. Of course to achieve this you still need a 14K display per eye for 110 degree fov... Yet putting a pristine image on that 14K VR display will cost half of rendering that image for a 4K tv, or the same when doubled for both eyes.

More realistically for an 8K per eye headset approaching 1080p TV experience in a headset, you'll need to render even less than half that required for a 4K display. It's just that atm we're at the very bottom of resolution where foveated rendering has the smallest gains, yet those gains will only go up as displays get higher pixel density. PSVR sits at a paltry 5 cpd, although with the distortion favoring pixel density in the center it might just reach 8 cpd in the center, 25% of 1080p at a 30 degree viewing angle or at most 270p in a 30 degree window in the center.

Anyway 8K at 150 degrees is still easily detectable by the human eye, still below 1080p at a 30 degree viewing angle. Yet even going from the current 1K per eye headset to a 2K per eye headset will be a huge gain. Long way to go before diminishing returns set in for VR. And with foveated rendering, display tech can comfortably outpace GPU power. Finally a diminishing need for more GPU grunt to get better results :)