Pemalite said:

False. |

Ahh yes 8 core is 300$, 12 and 16 core are 800$.

| The Cell was never well received. |

By who? Third parties who want to save money where ever they can? And yet they are still so greedy and put microtransactions into their latest games for this generation. Moral of the story give them Cell processor so they can think about that.instead lootboxes

|

That is completely up to the developer and how the game is bottlenecked. If you are GPU bound, then doubling the GPU performance can mean the difference between 30fps and 60fps, regardless of the CPU being used. |

Most developers arent programming that way tough,otherwise we already would have had 60fps games on consoles. There are a view games designed that way like Knack 1 and Knack 2. Evil Within 1 and 2 maybe too, dont know.

|

If you think you have ample knowledge about Cell. Then please. Describe those "extra graphics" to me.

|

Well it would be up to the developers as the Cell can render almost anything. Rendering shadows and real time lighting would be a good use. Also calculation of destructible environments.

| You are not even making any sense. You can have a GPU with more Gflops perform slower than a GPU with less Gflops. - Do you wish for me to provide some evidence for this like I have prior in other threads? Because the GPU industry is littered with examples where this is the case. |

Yeah because the optimisation is bad, AMD has the most powerful hardware right now with vega 64 performing @12 teraflops, but its still struggling to outperform a GTX 1080 ti with 10 teraflops

| The WiiU and Switch don't have good graphics. That doesn't mean the games cannot have great artistic flair, did you not watch the Digital Foundry on the breakdown of Zelda's imagry? There were a ton of graphical sacrifices that were made. But because you desire evidence... Here you go. Sub 720P resolution: http://www.eurogamer.net/articles/digitalfoundry-2017-zelda-breath-of-the-wild-uses-dynamic-resolution-scaling Drops to 20fps were a thing: http://www.eurogamer.net/articles/2017-03-31-zelda-patch-improves-framerate-on-switch Poor Texture Filtering: http://www.eurogamer.net/articles/digitalfoundry-2017-the-legend-of-zelda-breath-of-the-wild-face-off Poor Anti-Aliasing, draw distance on things like grass also leaves much to be desired, low-quality shadowing. |

AA is a GPU thing, the CPU isnt the bottleneck for this game on the Wii U.

Yeah but you know what the CPU is inside the Wii U? A 3 core 1.2 Ghz PowerPC, thats weaker than even the Xeon inside the XBox 360. Yet look at the games that still came on the system in this year.

Obviously the Wii U has other stuff like more RAM and a faster GPU but still, its impressive for a 1.2 Ghz CPU. And you know most Nintendo games run on 60fps.

|

And? All CPU's can render. But if you think that render video is somehow superior to what RSX or a modern CPU can give us... Then you are kidding yourself.

|

Its a benchmark from 2005-2007 from the PS2 era, what have you excepted? but there is even more

https://www.youtube.com/watch?v=4aOk3IpomA4#t=2m01s

https://www.youtube.com/watch?v=404wWR5mrtU

https://www.youtube.com/watch?v=MRB-zQogLeY

| Except, no. Also... Ram typically has no processing capabilities, so it doesn't actually "speed" anything up. I demand you provide evidence that Cell would provide the same or better performance than Jaguar when equipped with a powerful GPU and plentiful amount of Ram. Because the games say otherwise: Battlefield 4 has more than twice the multiplayer players (24 vs 64) in a match, that is something that is very much CPU driven, not memory or GPU. See here: http://www.bfcentral.net/bf4/battlefield-4-ps3/ And here: https://battlelog.battlefield.com/bf4/news/view/bf4-launches-on-ps4-with-64-players/ |

Yes it does, it enables the highest speed for renderings if they need it. You know i cant give evidence for that, so why you bring this up? Its not like i can buy a Cell and put a PC video card into it and try it out. But some current Gen Remasters show that they are struggling with the Jaguar, as they still cap the framerate to 30fps, like in Skyrim, the Eizio Collection or Resident Evil Remastered on PS4.

And i am pretty sure there are more ports from last gen, and even completely new remasters like the Crash Bandicot who cant escape 30fps number. Its pretty evident at this point that the Jaguars dont make a difference.

Batthelfied 4 is a multiplat on PS3, so its not comparable at all. How ever the PS3 did have an exclusive FPS game called MAG that allowed 256 players to play online at the same time. Sony got even received an award from Guinness World Records for that.

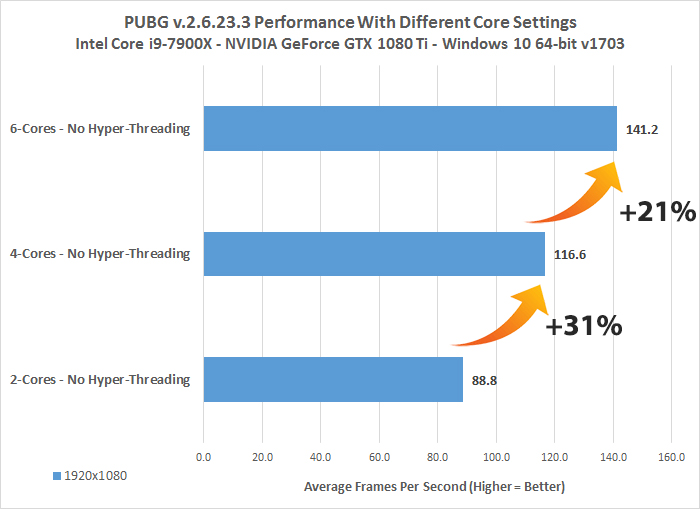

I have PUBG on PC. I have 12 threads. I can assure you, PUBG utilizes them all. |

First of that is a 2000$ CPU from Intel. Second, going from 88 to 141 fps is only a 40% increase despite adding 3 times the cores, so its showing me that more cores for this games isnt doing much of a difference. Second this 2000$ CPU you are showcasing is cloacked at 3.3 Ghz just like the Cell Processor.

So this was not my whole argument, my argument was that this game would run better on a dual or single core processor cloaked at more than 3Ghz over 8 core processor who are only cloaked between 1.6Ghz to 2.3 Ghz (PS4-XBox One X).

|

But you made the statement that XDR2 is the best. You were wrong.

|

All of these RAM arent available in 2019 and probably cost more than XDR2 Ram. Provide evidence that DDR2 can be faster than GDRR5 or XDR2 if you say it.

| I demand evidence for your baseless conspiracy theory. |

https://www.youtube.com/watch?v=ZcF36_qMd8M

|

Get back to me when they are 4k, 60fps. All those games listed would look better on PC, running high-end nVidia graphics. |

Yeah they would but guess what ? PS4 exclusives are already running on AMD hardware inside the PS4 and PS4 Pro and look artistically and technically better than anything on PC. Sure 60fps and 4K is better than 30fps and 1080p, 1440p or Checkerboarding, but that doesnt make the game engine and the developers any better. I take a game where i can play around with snow over 4K and 60fps to be honest

https://www.youtube.com/watch?v=RhxZ3ph2IwU

|

You make it sound like PC doesn't get any kind of optimization? That would indeed be an ignorant assumption on your behalf. |

Yes of course it does but so do console games and its not just at day one. Like Resident Evil Revaltions 2 for example on PS4 which run worse than the OG Xbox One now its locked 60fps, or Homefront the revolutions on all platforms, or MGSV (yes now the game runs at 60fps everywhere).

Same for PS4 Pro patches like FFXV that had various updates for the PS4 Pro over time.

On PC its also the case but its also needed often times in order to have something playable in the first place. But the difference between PC and consoles is that you have a variety of Graphicscards and processors, sure you get a patch that benefits the latest graphicscard and CPUs but not the older ones most of the the times.

But regardless of all that it doesnt answer my main point that AMD hardware for both CPUs and GPUs is running worse than Intel or Nvidia. They have the strongest GPU on the market and it still cant perform at the same level as Nvidia. Its not an issue with consoles, if Nvidia and Intel are really so much better at their job you would have seen Nvidia or Intel hardware inside consoles.

Last edited by Ruler - on 28 December 2017