I was banned yesterday and can't show to you guys all the new stufs about the PS3 Architecture I found on the web (thanks Beyond3D for the transactions and some explanations).

THE SOURCE

http://pc.watch.impress.co.jp/docs/column/kaigai/20130329_593760.html

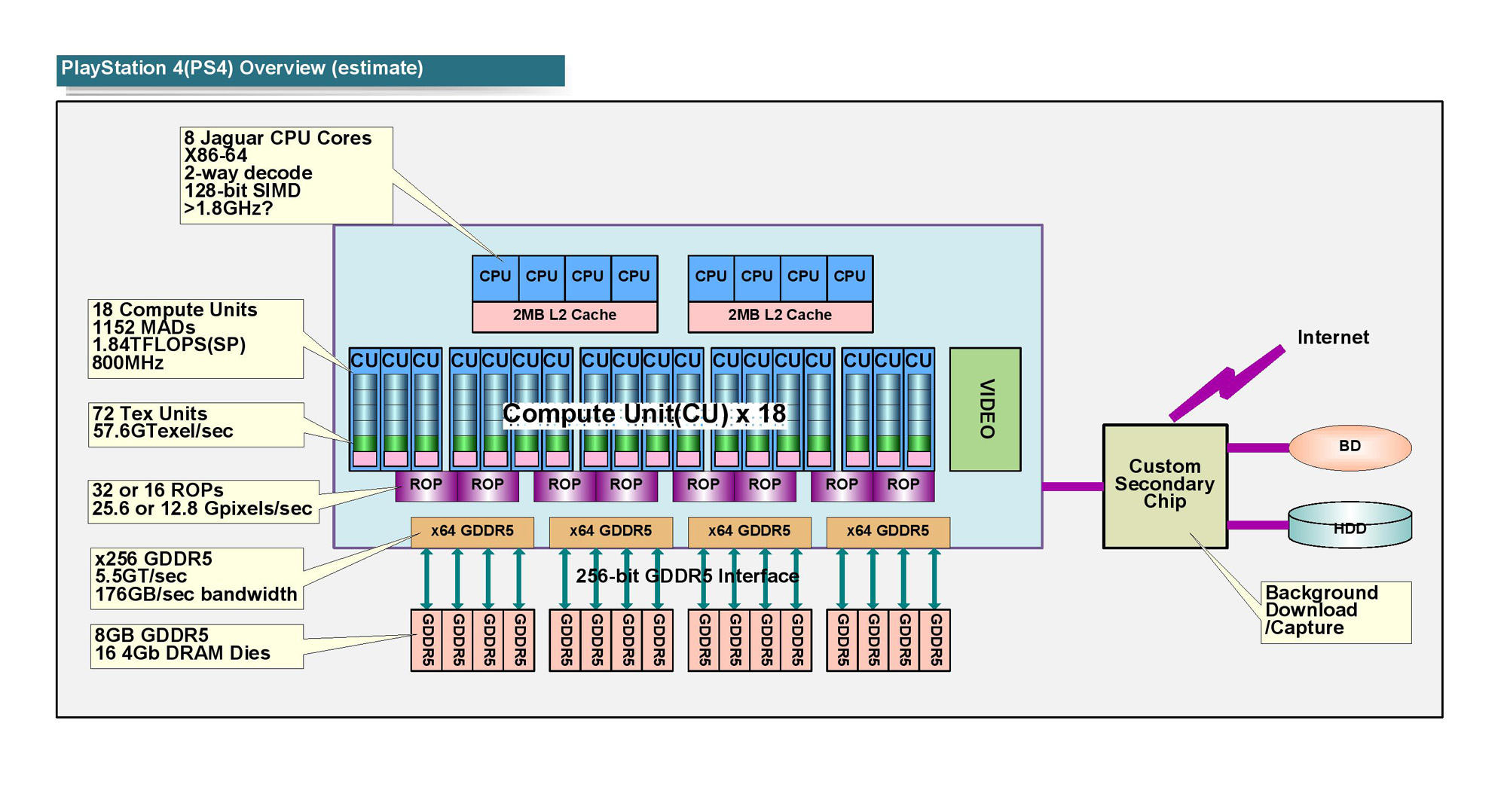

ARCHITECTURE OVERVIEW

Nothing new but there are some questions... the CPU clock (>1.8Ghz?), ROPs units (16 or 32?)... the rest of the picture is already known for everybody.

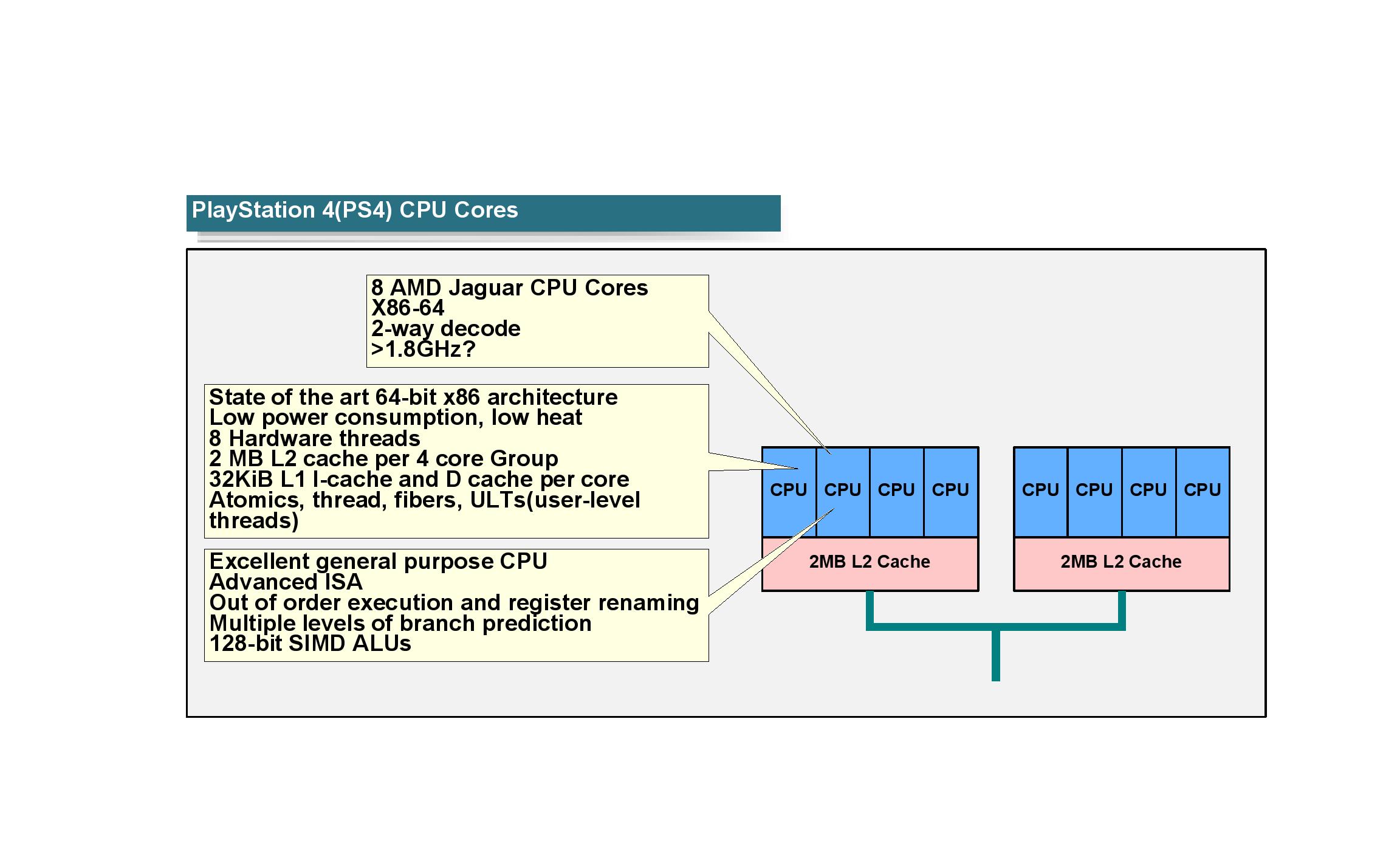

CPU

Nothing new.

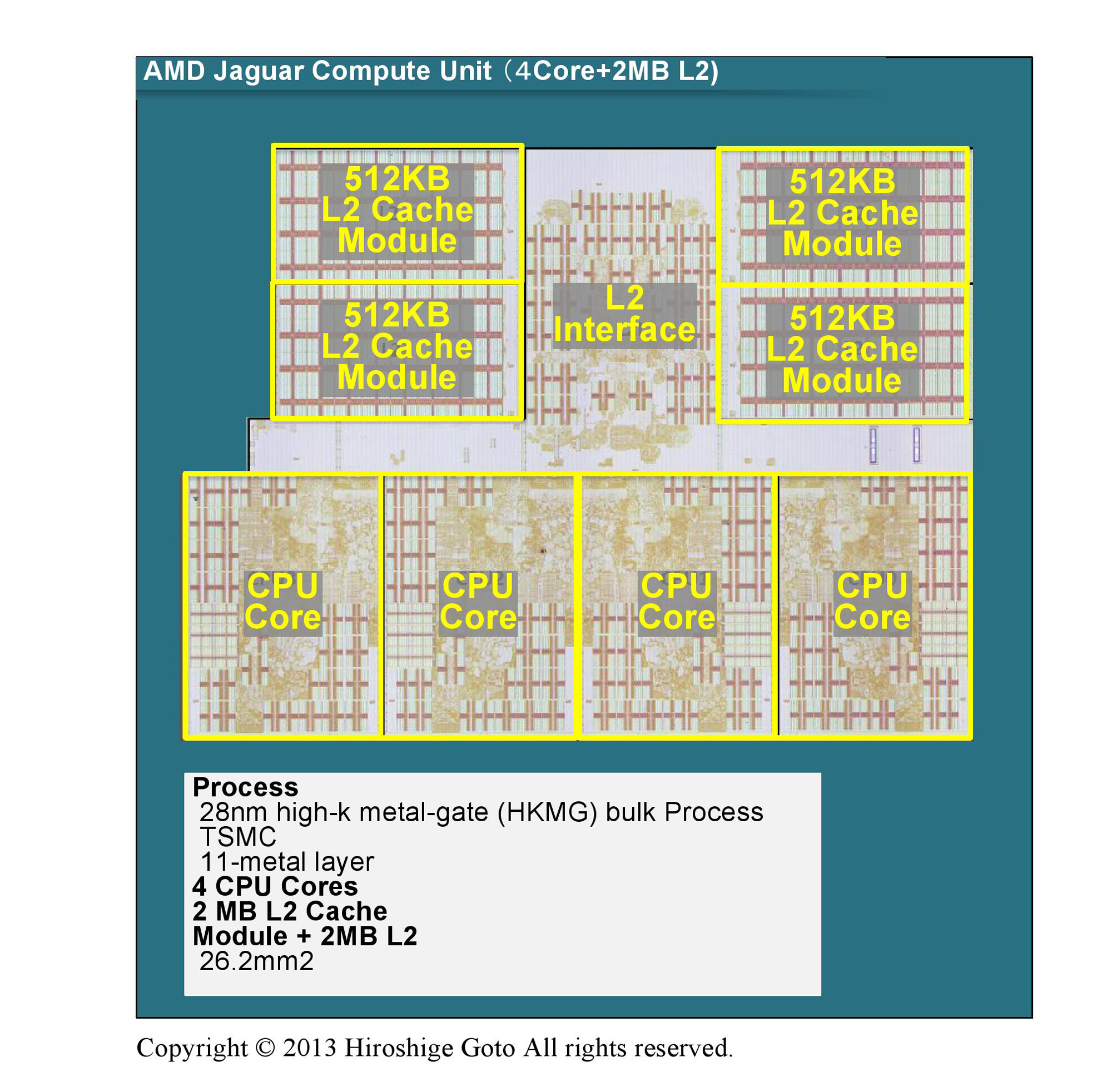

The 4-core Jaguar (the PS4 uses a 8-core version).

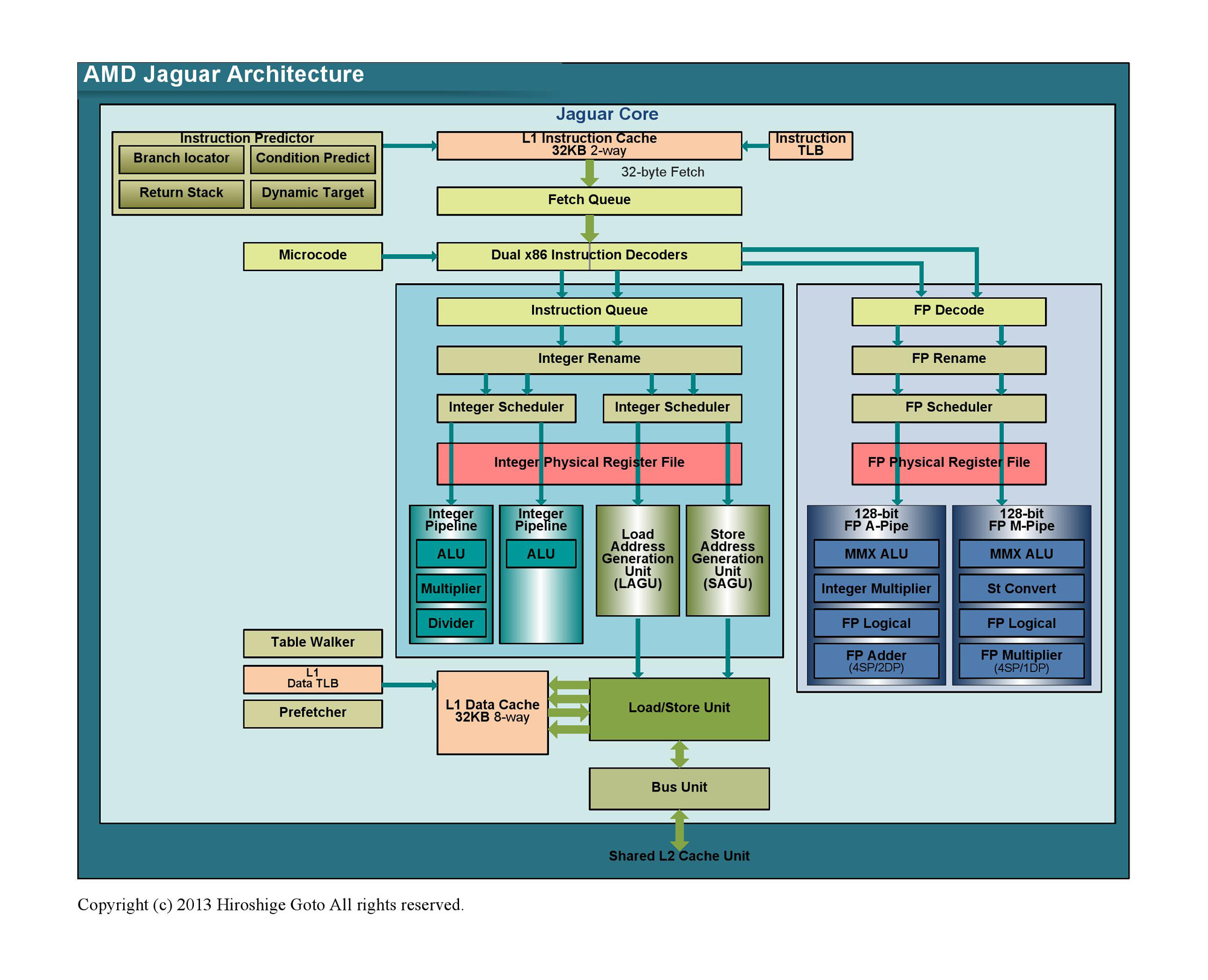

And the Jaguar overview.

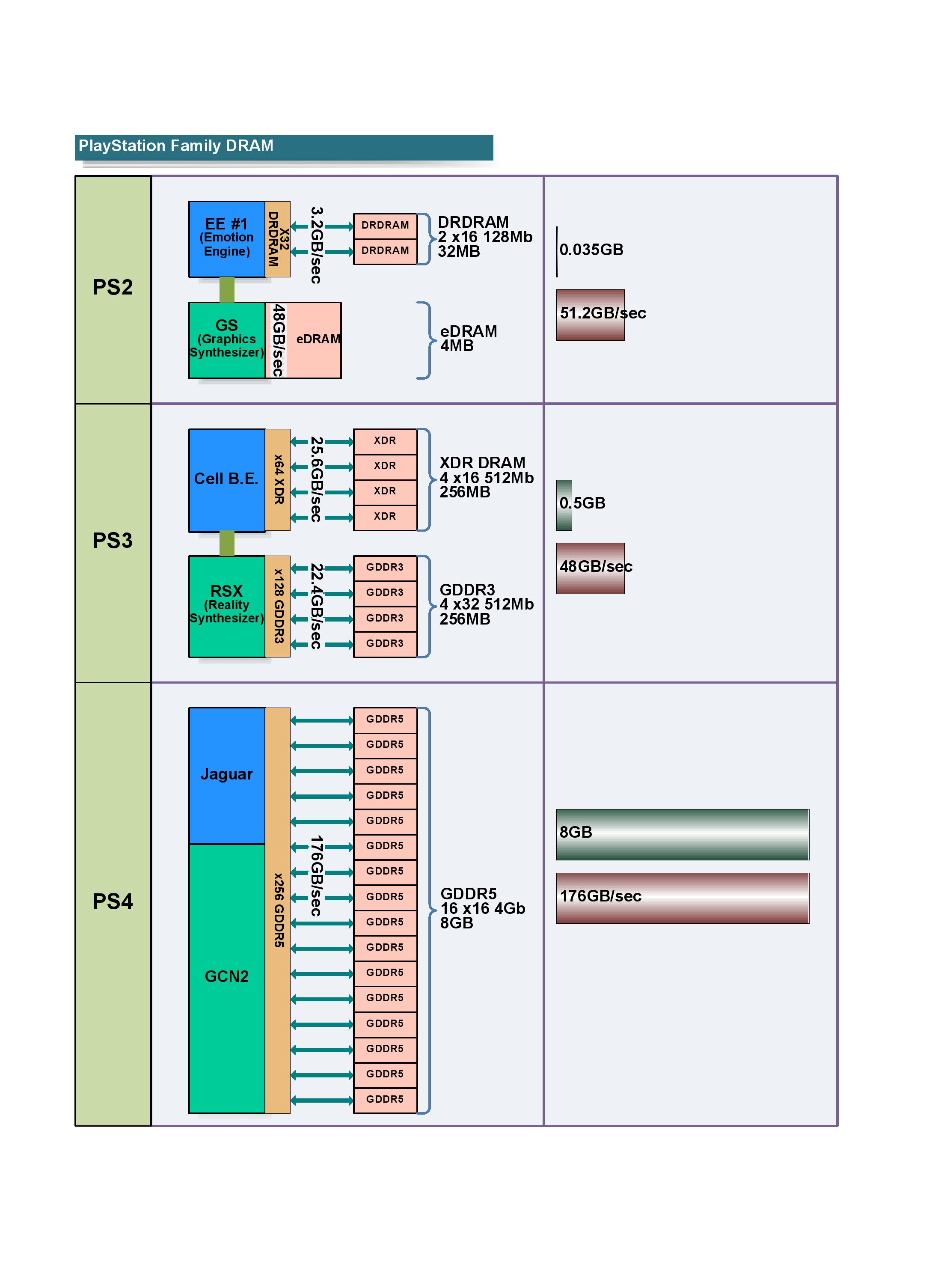

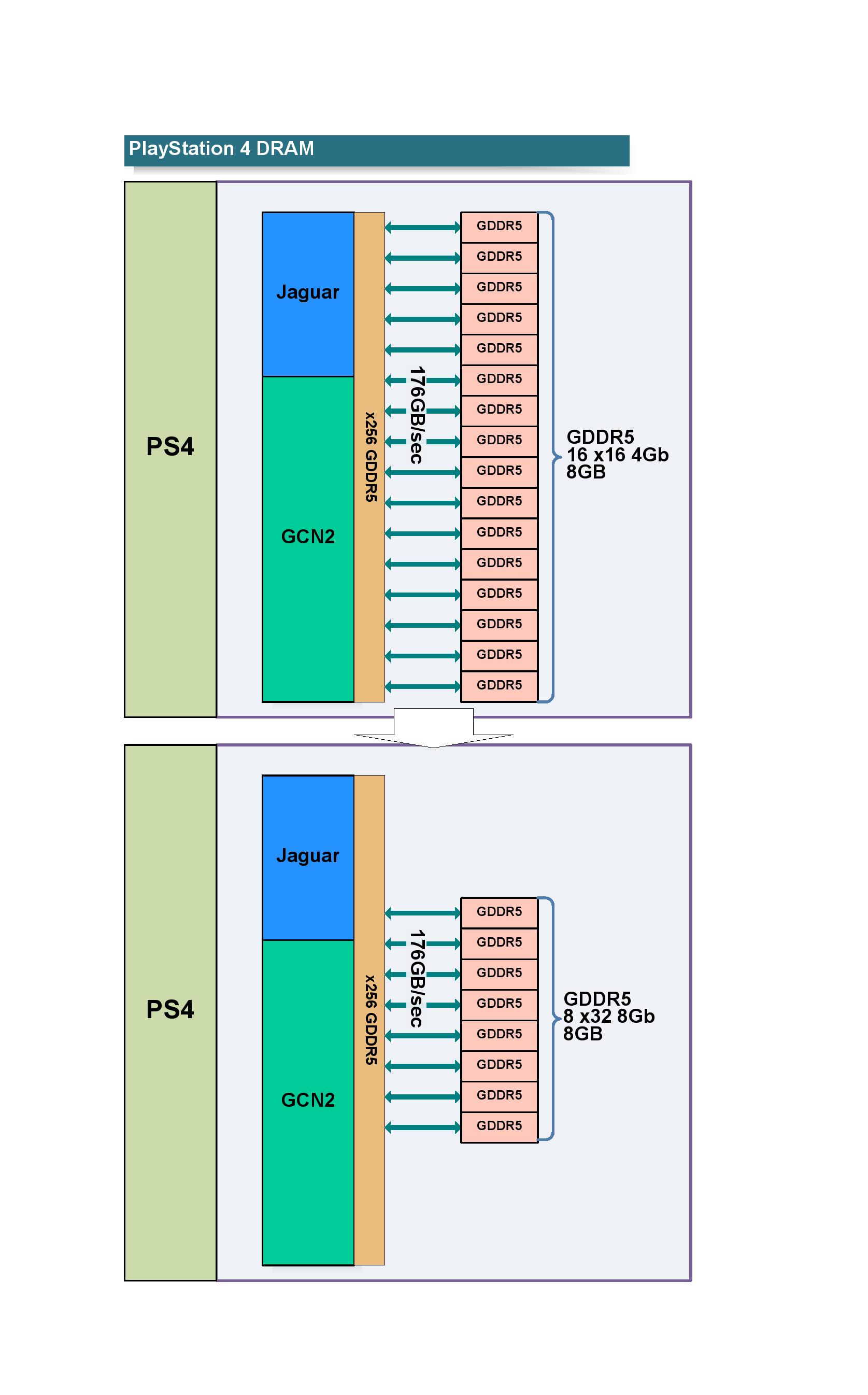

MEMORY

| However, in the case of the PS4 this time, you can be reduced to half the number of eight DRAM chips. Because of supporting both x32 GDDR5 is and x16, I can reduce the number of DRAM chips to increase the amount of width in the same interface. Seen in the interface of 256-bit of APU, and the current configuration of the product is connected to the 4G-bit GDDR5 16 in x16. It is composed of a total of 16 8GB. This is, I can switch to the configuration of eight in 8G-bit x32 product when out of GDDR5. By the way, XDR DRAM, if we have succeeded PS3, I was able to reduce to two the number of speed XDR2 DRAM chips by switching to the main memory. |

Yeap there are a discussion about if Sony will use the 4Gb GDDR5 modules (512MB each module) already in mass production since early 2013 or the new 8Gb GDDR5 modules (1GB each module) not in production yet... I think the first PS4 will use the 4Gb modules and in the future the 8Gb modules to reduce de costs... the difference is 16 modules to 8GB to 8 modules to 8GB (a lot cheaper here).

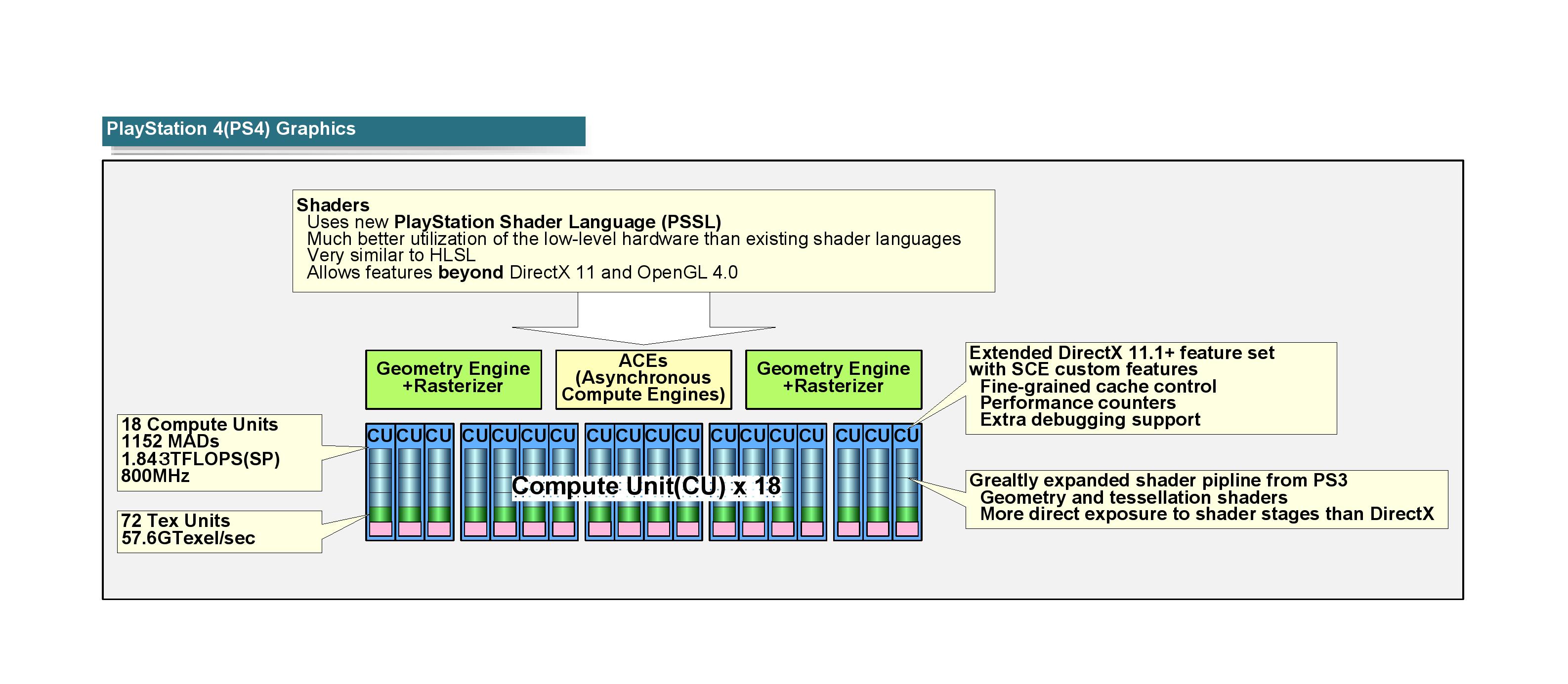

GPU

Now the most important and cools stufs... the new customized GPU with tweaks and features to be released in PC in the AMD GNC 2.0 late of this year (or early of 2014).

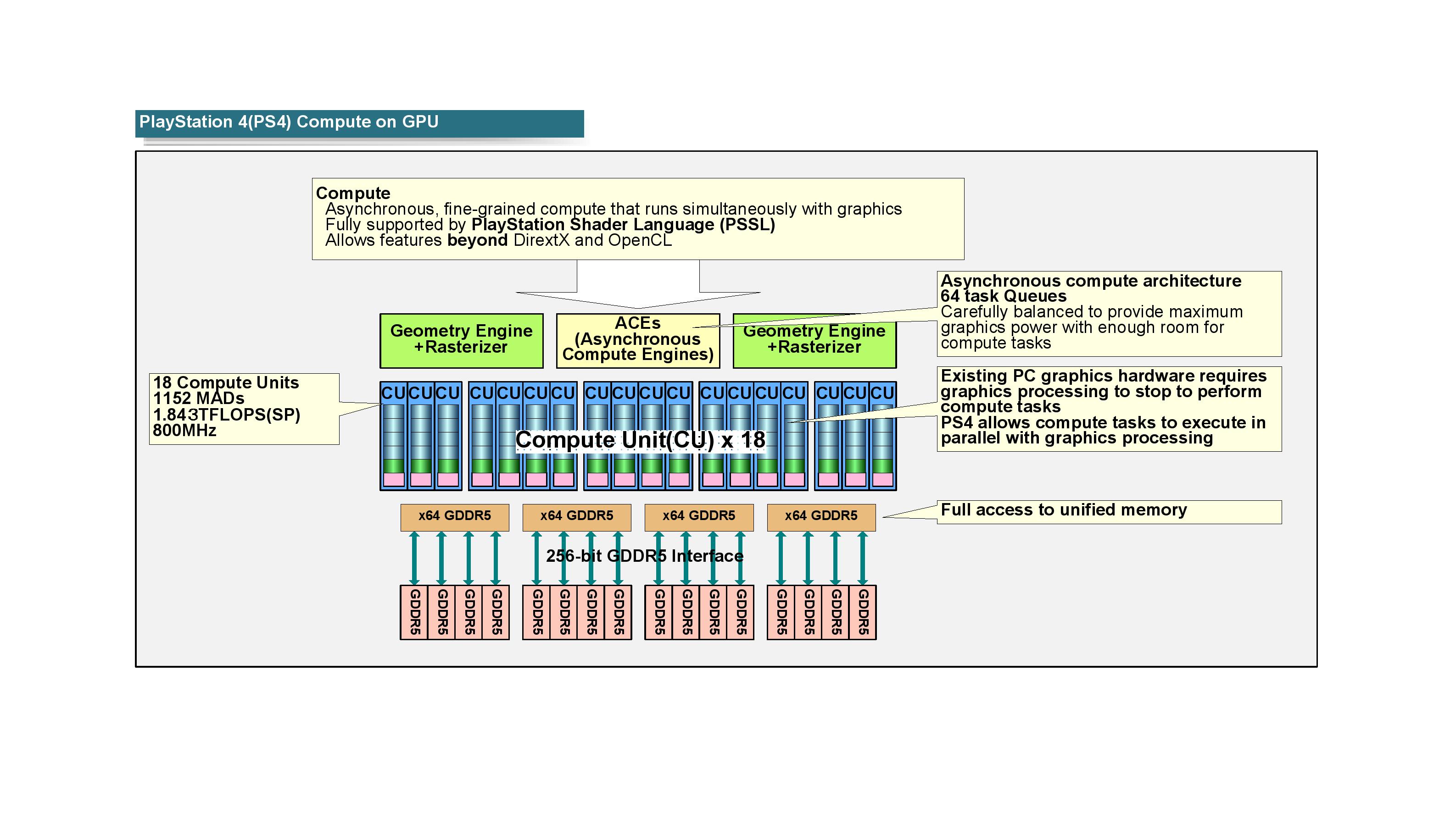

| The compute on the GPU, the granularity of the problem if the compute task is relatively small, needs to be controlled / issue comes out many tasks. Therefore, in the PS4, and to have a queue of 64 to ACEs, so that the task can be controlled with the issuance of 64. In the major difference between the normal GPU AMD, correspondingly, compute at this point is so flexible it can be used in fine-grained a GPU core at PS4. In PS3, use similar to what you saw up and running in different threads to separate each SPU has become easier. |

And about the Compute part of the GPU? That is the most amazing feature that AMD put in PS4 GPU... the GPGPU can run in parallel with Graphcs without lost performance... that is magical.

API

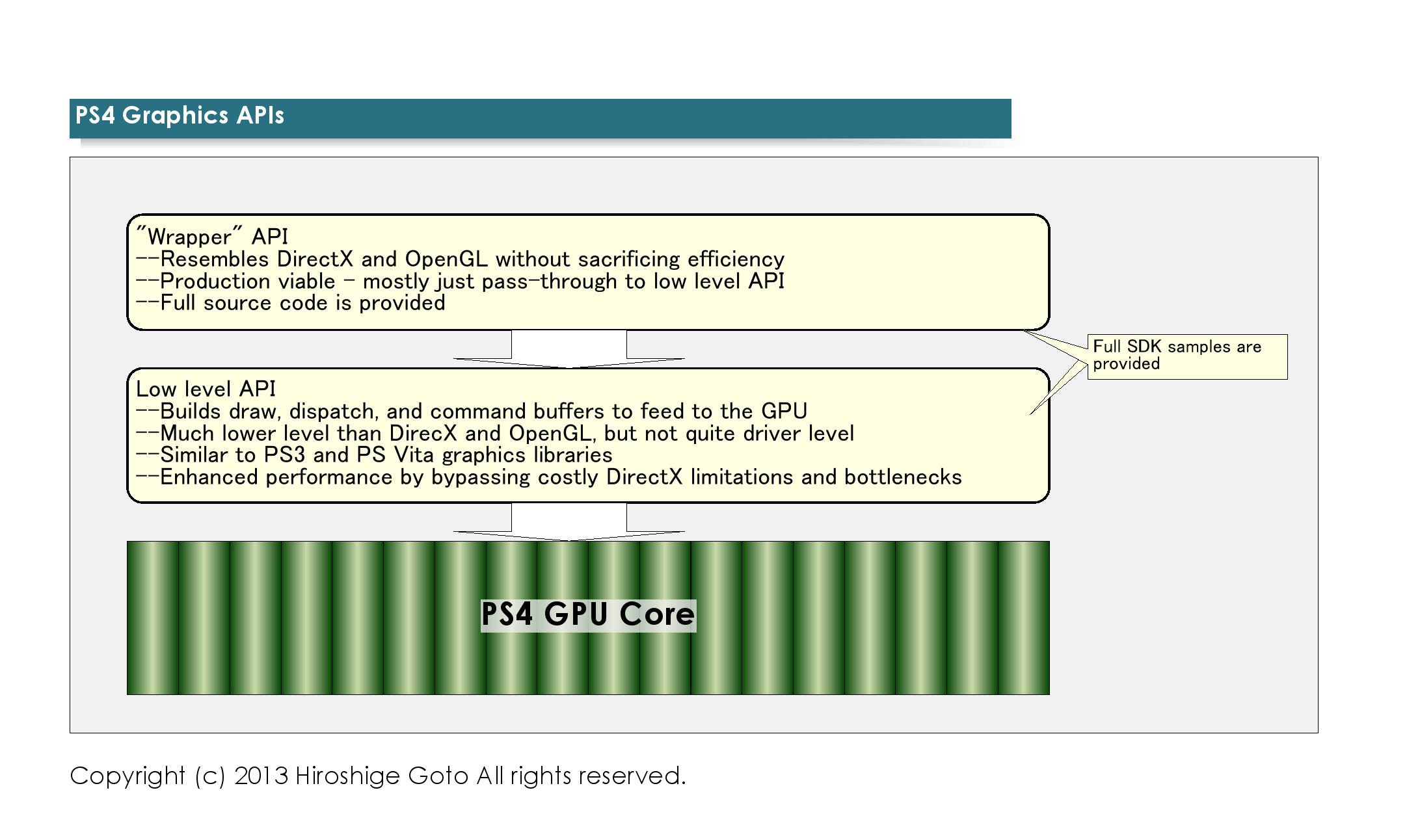

In the "Wrapped" API you can use the Microsoft Visutal Studio with DirectX or OpenGL... that is easy and traslated to the low level API automaticly... so the developer can create a game for PC with DirectX and just build it to PS4.

But the magic for graphics in into the Low Level API... this is used only for PS4 to use all the power behind the Arch... you can expect the first-party exclusives games using this API to makes the games looks in another graphical level.

MACRO COMPARISON

To finish a macro comparision between all PlayStations consoles.