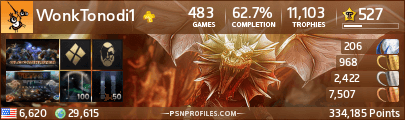

I am the author of that blog.

I simply went to metacritic and sorted games (Ps3/360/Multi) from highest ranked to lowest. I picked the 30 highest scoring ones for each. There are some games omitted, because THERE WAS NO EDGE REVIEW of them, such as god of war collection or street fighter IV. Everything that had an Edge review I picked (seems I forgot Infinite Undiscovery but I'll add it no problem, it won't change the graphs) I am human and made this all by myself, errors may crop up.

I simply computed the difference and made the charts. So I did not pick the games at random, I picked them from highest metacritic ranking to lowest.

Metacritic is the most objective method of "ranking" games, since the really high and low reviews get lower weights in the average, at least that's what metacritic says. If you can find a better yardstick, I'll compare it to that.

You'll be asking why just top 30 games, because those are the games that sell the most, get advertised the most, in the spotlight the most. Not many people read the review of Battlestations Midway or Folklore (both are included because they are in the top 30), compared to ODST and God of War 3.

I could easily randomly sample 20 games, and with 20 games out of 100 or so exclusives, it'll make a 99% confidence interval less than one point. However neither I or many others care about games that are worse than that, based on sales. So choosing top 30 is valid. I chose the top 30 THAT EDGE REVIEWED. It's hard to compare metacritic to edge when there is no edge review is there?

Existing User Log In

New User Registration

Register for a free account to gain full access to the VGChartz Network and join our thriving community.