Pemalite said:

When you conclude that "Gigaflops" is the single defying denominator that determines a devices performance... Then you have already lost the argument.

|

""

Er, the amount of "Gigaflops" has absolutely ZERO correllation with Tessellation performance, Tessellation does not and will not rely on the shader hardware to actually be performed.

GPU's have fixed function hardware that is dedicated to doing tasks such as Tessellation.

However, the thing with the WiiU is that it's using AMD's 6th generation Tessellator, which is woefull in it's geometry performance.

""

seriously?

you realize that tesselation must be used in conjuction with dispalcement right?

here

https://developer.nvidia.com/content/vertex-texture-fetch

"

Vertex Texture Fetch

DOWNLOAD

| FILE | DESCRIPTION | SIZE |

|---|---|---|

| Vertex_Textures.pdf | With Shader Model 3.0, GeForce 6 and GeForce 7 Series GPUs have taken a huge step towards providing common functionality for both vertex and pixel shaders. This paper focuses specifically on one Shader Model 3.0 feature: Vertex Texture Fetch (PDF). It allows vertex shaders to read data from textures, just like pixel shaders can. This additional feature is useful for a number of effects, including displacement mapping, fluid and water simulation, explosions, and more. The image below shows the visual impact of adding vertex textures, comparing an ocean without (left) and with (right) vertex textures. |

"

you relize that vertex fetches are related to power right?

here

http://moodle.technion.ac.il/pluginfile.php/126883/mod_resource/content/0/lessons/Lecture7.x4.pdf

"

"

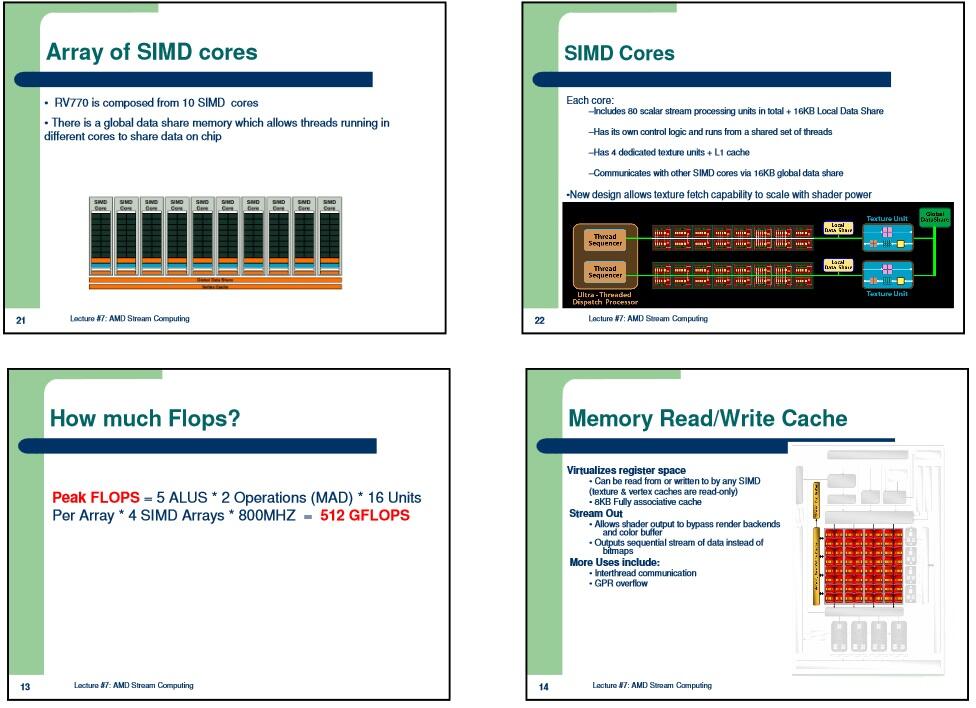

never heard of tesselation stages right?

vertex and pixel shaders, and how does amd handle the vertex and pixel shaders?

tthough the SIMD CORES, and simd cores have stream processors and at certain clock speed that gives us?

gigaflops

bandwidth is also related to shader power, if there is not enough bandwidth you cant handle the shader power, you think nintendo would put so much bandwidth for 176gigaflops?

no

here

http://www.openglsuperbible.com/2014/01/24/memory-bandwidth-and-vertices/

"

Memory Bandwidth and Vertices

Memory bandwidth is a precious commodity. As far as graphics

cards are concerned, this is the rate at which the GPU can transfer data

to or from memory and is measured in bytes (or more likely, gigabytes)

per second. The typical bandwidth of modern graphics hardware can range

anywhere from 20 GB/s for integrated GPUs to over 300 GB/s for

enthusiast products. However, with add-in boards, data must cross the

PCI-Express bus (the connector that the board plugs into), and its

bandwidth is typically around 6 GB/s. Memory bandwidth affects fill rate

and texture rate, and these are often quoted as performance figures

when rating GPUs. One thing that is regularly overlooked, though, is the

bandwidth consumed by vertex data.

Vertex Rates

Most modern GPUs can process at least one vertex per clock cycle.

GPUs are shipping from multiple vendors that can process two, three,

four and even five vertices on each and every clock cycle. The core

clock frequency of these high end GPUs hover at around the 1 GHz mark,

which means that some of these beasts can process three or four billion

vertices every second. Just to put that in perspective, if you had a

single point represented by one vertex for every pixel on a 1080p

display, you’d be able to fill it at almost 2000 frames per second. As

vertex rates start getting this high, we need to consider the amount of

memory bandwidth required to fill the inputs to the vertex shader.

Let’s assume a simple indexed draw (the kind produced by glDrawElements)

with only a 3-element floating-point position vector per-vertex. With

32-bit indices, that’s 4 bytes for each index and 12 bytes for each

position vector (3 elements of 4 bytes each), making 16 bytes per

vertex. Assuming an entry-level GPU with a vertex rate of one vertex

per-clock and a core clock of 800 MHz, the amount of memory bandwidth

required works out to 12 GB/s (16 * 800 * 10^6). That’s twice the

available PCI-Express bandwidth, almost half of a typical CPU’s system

memory bandwidth, and likely a measurable percentage of our hypothetical

entry-level GPU’s memory bandwidth. Strategies such as vertex reuse can

reduce the burden somewhat, but it remains considerable.

High Memory Bandwidth

Now, let’s scale this up to something a little more substantial. Our

new hypothetical GPU runs at 1 GHz and processes four vertices per

clock cycle. Again, we’re using a 32-bit index, and three 32-bit

components for our position vector. However, now we add a normal vector

(another 3 floating-point values, or 12 bytes per vertex), a tangent

vector (3 floats again), and a single texture coordinate (2 floats). In

total, we have 48 bytes per vertex (4 + 12 + 12 + 12 + 8), and 4 billion

vertices per-second (4 vertices per-clock at 1 GHz). That’s 128 GB/s of

vertex data. That’s 20 times the PCI-express bandwidth, several times

the typical CPU’s system memory bandwidth (the kind of rate you’d get

from memcpy). Such a GPU might have a bandwidth of around 320 1 GB/s. That kind of vertex rate would consume more than 40% of the GPU’s total memory bandwidth.

Clearly, if we blast the graphics pipeline with enough raw vertex

data, we’re going to be sacrificing a substantial proportion of our

memory bandwidth to vertex data. So, what should we do about this?

Optimization

Well, first, we should evaluate whether such a ridiculous amount of

vertex data is really necessary for our application. Again, if each

vertex produces a single pixel point, 4 vertices per clock at 1 GHz is

enough to fill a 1080p screen at 2000 frames per second. Even at 4K

(3840 * 2160), that’s still enough single points to produce 480 frames

per second. You don’t need that. Really, you don’t. However, if you

decide that yes, actually, you do, then there are a few tricks you can

use to mitigate this.

- Use indexed triangles (either GL_TRIANGLE_STRIP or GL_TRIANGLES).

If you do use strips, keep them as long as possible and try to avoid

using restart indices, as this can hurts parallelization. - If you’re using independent triangles (not strips), try to order

your vertices such that the same vertex is referenced several times in

short succession if it is shared by more than one triangle. There are

tools that will re-order vertices in your mesh to make better use of GPU

caches. - Pick vertex data formats that make sense for you. Full 32-bit

floating point is often overkill. 16-bit unsigned normalized texture

coordinates will likely work great assuming all of your texture

coordinates are between 0.0 and 1.0, for example. Packed data formats

also work really well. You might choose GL_INT_2_10_10_10_REV for normal and tangent vectors, for example. - If your renderer has a depth only pass, disable any vertex attributes that don’t contribute to the vertices’ position.

- Use features such as tessellation to amplify geometry, or techniques

such as parallax occlusion mapping to give the appearance of higher

geometric detail.

In the limit, you can forgo fixed-function vertex attributes, using

only position, for example. Then, if you can determine that for a

particular vertex you only need a subset of the vertex attributes, fetch

them explicitly from shader storage buffers. In particular, if you are

using tessellation or geometry shaders, you have access to an entire

primitive in one shader stage. There, you can perform simple culling

(project the bounding box of the primitive into screen space) using only

the position of each vertex and then only fetch the rest of the vertex

data for vertices that are part of a primitive that will contribute to

the final scene.

Summary

Don’t underestimate the amount of pressure that vertex data can put

on the memory subsystem of your GPU. Fat vertex attributes coupled with

high geometric density can quickly eat up a double-digit percentage of

your graphics card’s memory bandwidth. Dynamic vertex data that is

modified regularly by the CPU can burn through available PCI bandwidth.

Optimizing for vertex caches can be a big win with highly detailed

geometry. While we often worry about shader complexity, fill rates,

texture sizes and bandwidth, we often overlook the cost of vertex

processing — bandwidth is not free.

"

or maybe you prefer this explanation

http://www.ign.com/articles/2005/05/20/e3-2005-microsofts-xbox-360-vs-sonys-playstation-3?page=3

"

Bandwidth

The PS3 has 22.4 GB/s of GDDR3 bandwidth and 25.6 GB/s of RDRAM bandwidth for a total system bandwidth of 48 GB/s.

The Xbox 360 has 22.4 GB/s of GDDR3 bandwidth and a 256 GB/s of EDRAM bandwidth for a total of 278.4 GB/s total system bandwidth.

Why does the Xbox 360 have such an extreme amount of bandwidth?

Even the simplest calculations show that a large amount of bandwidth is consumed by the frame buffer. For example, with simple color rendering and Z testing at 550 MHz the frame buffer alone requires 52.8 GB/s at 8 pixels per clock. The PS3's memory bandwidth is insufficient to maintain its GPU's peak rendering speed, even without texture and vertex fetches.

The PS3 uses Z and color compression to try to compensate for the lack of memory bandwidth. The problem with Z and color compression is that the compression breaks down quickly when rendering complex next-generation 3D scenes.

"

you see?

bandwidth is important also fro cmpute power, why would nintnedo put so much bandwidth if the system wouldnt require it?

why ports run in wiiu if its less powerful and even accounting the more efficient architecture you still have the issue that is a port not a ground up game, the port is lazy, developers not ued to the new hardware and using a engine that wasnt optimized for wiiu just made compatible. you can clearly see how much a port hurts performance in bayoneta of ps3 and assesins creed 4 for ps4, wii u i no different

developers already mentioend that wii u is about 50% ore powerful than 360 and ps3 and thats was based on an early dev kit cause the final one came out in 2012 and the article was made at the middle of 2011

crytek already said that wii u power is on par with the 360 and ps3

shinen mentioend that wii u is powerful machine more than 360 and ps3

and so goes

the moment bayo for ps3 had half the framerate than 360 because was a port and the fact that develoeprs struggled to achieve 1080p 30fps in assesins creed 4 for ps4 and previously had it at 900p also tells you hat no matter how modern is the new harwdare and more powerful, alway making a port from an older machne will limit your new system, and ps4 is more modern and easy due to the x86 and having no edram for developers to have hard times in development and still runned at 900p 30fps eventhough ps4 is 7.5x mor powerful than xbox 360 and for 108060fps you would require less than half that power to achieve 1080p 60fps and even with the patc is running at 1080p 30fps

if the performance of the ps4 was hurt due to those issues with the port, then why would wii u would be so diferent?

sorry dude, you already lost the argument, nintndo makes balanced systms, they aint gonna put bandwidth going to waste

563.2GB/s or a terabyte of bandwidth isnt impossible, already gamecube had 512bit of bus width with 1MB of edram, so why wii u being more than a decade more modern could not pack 1024bits(563.2GB/s for the 8 macros) or 2048bits(more than a terabyte for 8 macros) in a block of 4MB?

do the math