Asus AXE7800 review - Read the fine print to make sure it works for your use case

Why do these routers have so many antennas?

Wifi 6 and Wifi 6E especially needs antennas for all the additional bands and channels. In the past, you only needed dual band but with Wifi 6E, you really need triple band because of the new 6GHz band. If you buy a dual band Wifi 6E router, you would have to pick and choose which 2 of the 3 bands (2.4 Ghz, 5Ghz and 6Ghz) you want to keep enabled. But at that point, it's likely better to buy a dual band Wifi 6 over a dual band Wifi 6E which I'll explain below.

What is Wifi 6E and is it worth it?

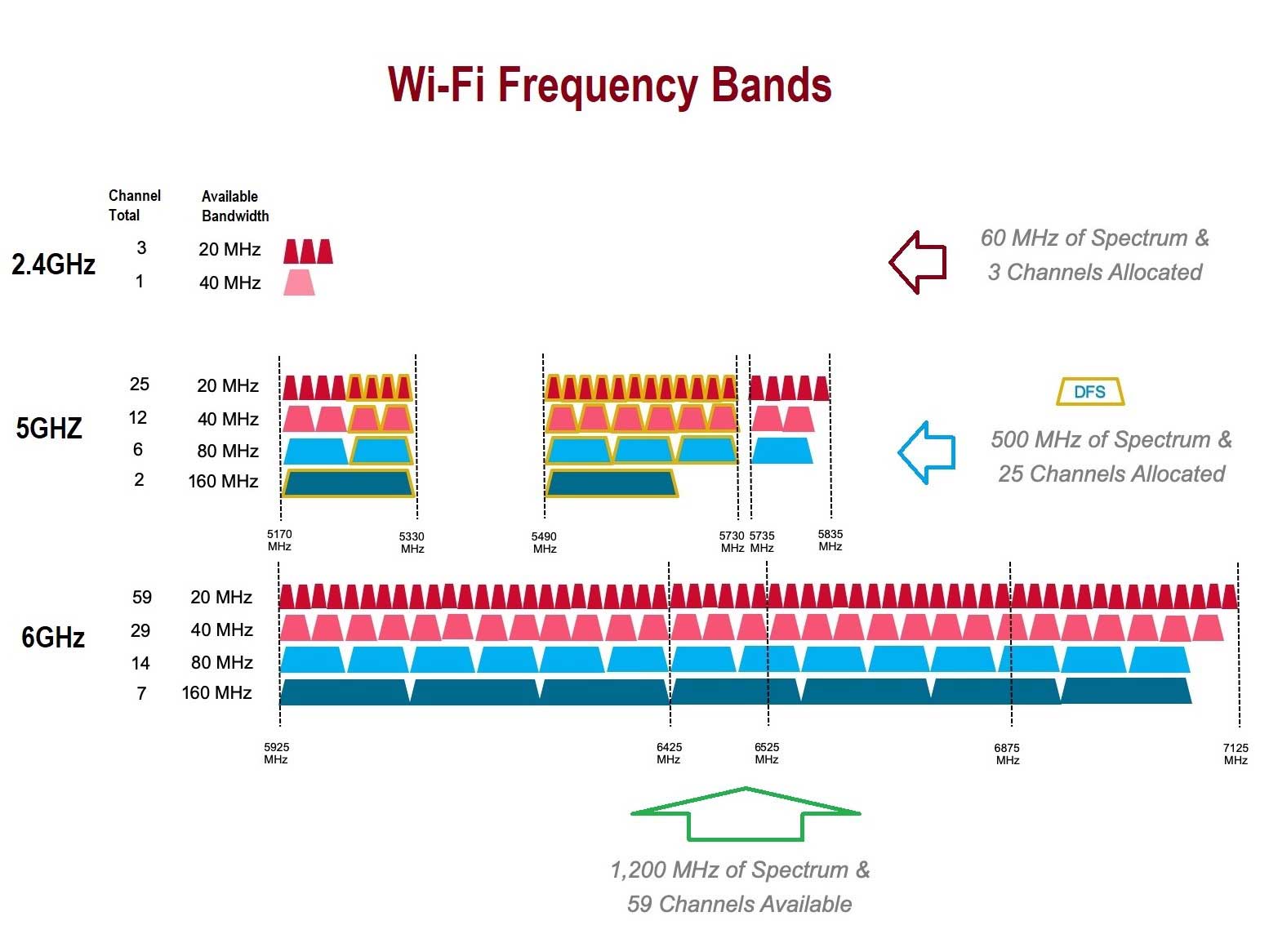

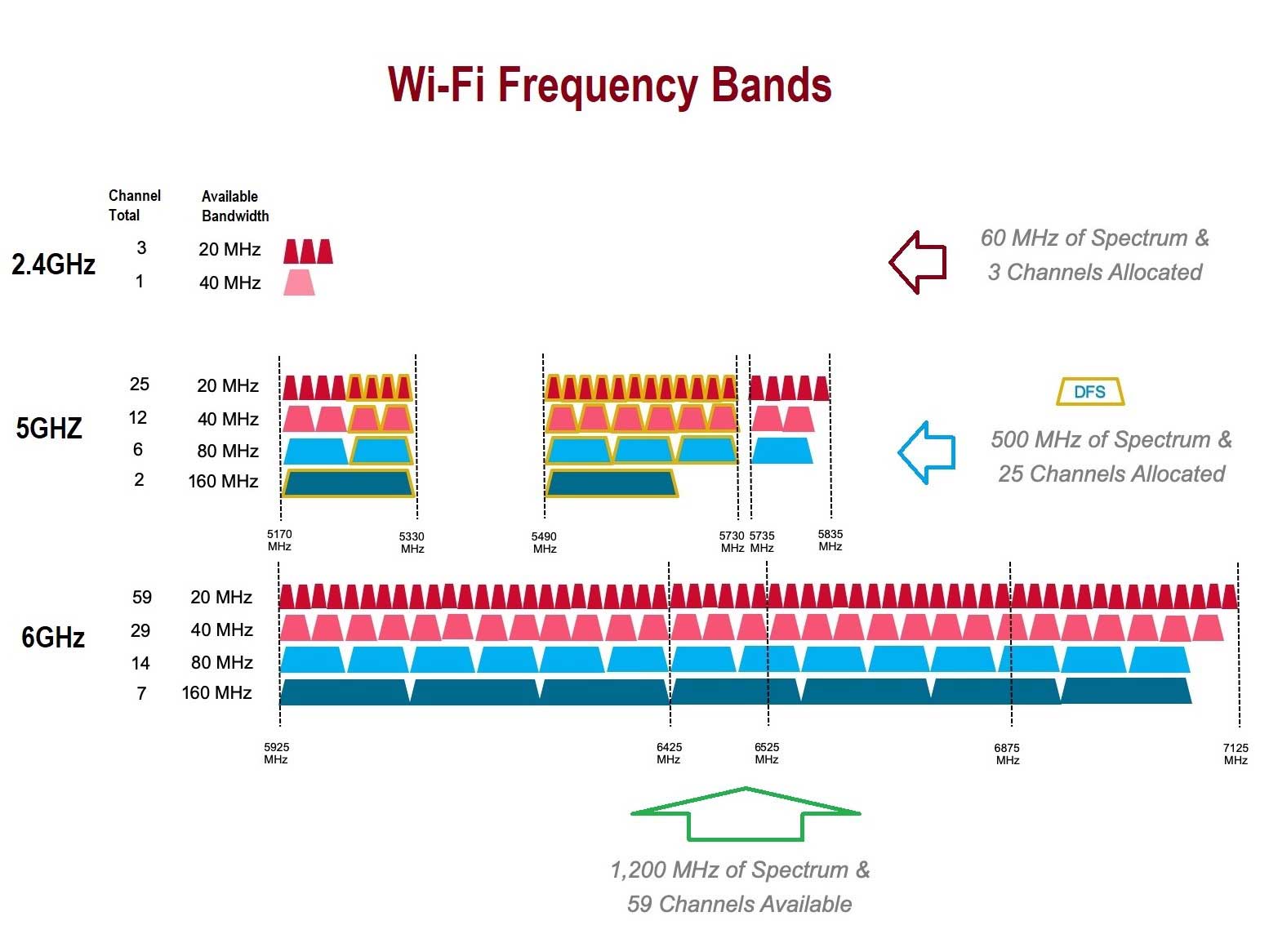

Without getting too deep into it, Wifi 6 (non-E) essentially added more channels to 5GHz band which is known as the 160Mhz channel width. This channel width allows more data to be carried which results in higher bandwidth than the former channel width such as 20/40/80Mhz but it is also more susceptible to interference compared to the smaller channel widths. Wifi 6E on the other hand has an entirely new band called the 6Ghz band which also has 20/40/80/160Mhz channel widths. So this means clients and devices connecting to Wifi 6E has an entire band with channels all to themselves where as older devices have to fight over congestion space on Wifi 6 with the 2.4/5Ghz band. Wifi 6E is also super fast because within the channel widths, Wifi 6 has only 2 channels for 160Mhz vs 6E has 7 channels for 160Mhz for example. The picture below should give you a better idea. There's also other stuff like MIMO/MU-MIMO, beamforming and etc but no one has time for that.

There is a kicker however. Wifi 6E is pretty short range largely due to much worse wall penetration. My room is in the top floor of my house which is where the router is, then there is a ground floor and then there is a basement where the network pc is. The router is in my room and if I go to the washroom for example which is right outside of my room on the same floor, I lose half the wifi bars where it went from 750Mbps in my room to 300-400 in the washroom while using Wifi 6E.. Meanwhile Wifi 6 using 5Ghz band can go all the way to the basement where my network PC stays and still have full bars however the speed there is 200-300Mbps. If I switch to 2.4Ghz, I get about 50Mbps from the basement and Wifi 6E signal doesn't reach there. In fact 6E doesn't even reach my living room from the router but 5Ghz reaches all the way to the yard.

So it's beginning to sound like Wifi 6E is a scam but really it's all about congestion. Even if say you have 3 devices using the Wifi 6E band and are close enough to it, those devices won't congest the 5Ghz band therefore the devices that are on the 5Ghz band will in turn have faster wifi.

The Fine Print

Now if you thought the Wifi 6E was about the Fine print, there is something more interesting that you have to be aware of and that is the antenna configuration. With my AC86U for example, that had 3x3 2.4Ghz and 4x4 5Ghz Wifi 5. While my AXE7800 actually has 2x2 2.4Ghz, 4x4 5Ghz Wifi 6 and 2x2 6Ghz. This means that clients that are on 2.4Ghz that have 3-4 antennas would actually be slower on my AXE7800 than on my AC86U. But in practice, not only does 5Ghz band cover my entire house, I don't have any 2.4Ghz only devices. And while 4x4 5Ghz might sound the same, because of 160Mhz of Wifi 6 channel and MU-MIMO and other tech, in practice and even on the specs, it is a lot faster.

Should you get Wifi 6E or buy a cheaper Wifi 6 router?

I think it depends on configuration. A lot of the benefits I stated can be largely achieved with a Wifi 6 router because Wifi 6E basically has little to no benefit with Wifi 6 devices. Wifi 6E is much faster than Wifi 6 but the limited range cucks it hard and even if you increase the antennas, the range won't improve too much more. The best placement for Wifi 6E would be placing the router in the living room on in an open area to get the most out of it. The important thing to make sure is you have the most amount of antennas for 5Ghz band and if you have devices that have low bars, to also make sure you have more antennas for 2.4Ghz but remember that 2.4Ghz is very slow. In some cases, a dual band Wifi 6 could be better than a triple band Wifi 6E depending on the antenna configuration. So it depends on your use case but I wouldn't buy a router solely Wifi 6E unless you have a small house or live in an apartment.

Essay Pro

Essay Pro