Soundwave said:

Pemalite said:

I would place two Tegra's X2 at roughly Xbox One levels of imagry to be honest, but with 720P resolution.

I just wan't answers and clear concise ones from Nintendo with a full NX reveal already. Haha

|

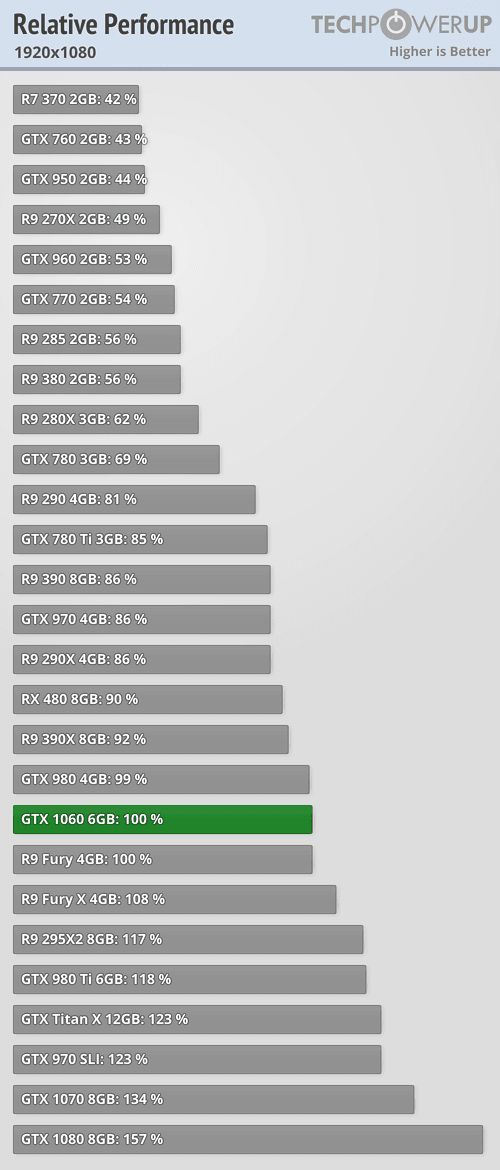

I know it's not the be all end all, but wouldn't two Tegra X2's in unison constitute about 1.25 TFLOP of performance, and if we average out that Nvidia's floating point performance is generally 30% higher, that would put the two at 1.625 TFLOPS in AMD terms.

Maybe if they added say 24-32MB of high speed eDRAM onto the SCD version of the TX2 ... would that change things? That would kinda cancel out the XB1's memory bandwidth advantage.

Where would it be lacking vs a XB1 in that scenario? Surely two X2 units would boost things like the poly count and fillrate?

|

Thats useing PC numbers, with DX11.

On consoles the differnce will be smaller, as will it with a differnt used API, like DX12 or Vulkan.

The "avg" advantage of flops to flops will be alot smaller in a console. Id be surprised if it was more than 10-15%.

I think Pemalite is correct, in saying that 2 x tegra x2 = roughly Xbox One level of performances, maybe limited by memory bandwidth so running lesser resolution.

Also if your going that route, your hypothetical 2x Tegra x2 chip system, will be more expensive than the Xbox One to make.

The reason Sony and Microsoft went with AMD, is because you can get everything in 1 chip.

Which cuts down on price, plus they have designed their cpu+gpu to be like lego's.

It cost them effeciency, but it means they can easily build basically anything you want with them.

Thats why they do custom soc's, because they designed the cpu and gpu to be able to funktion like that.

Nvidia doesnt. They cant just magically make a new chip thats doubled up.

They could use 2 in parrallel like SLI for the GPU part.... but that usually cuts effeciency of the GPU.

Suddenly that Gflop to Gflop advantage nvidia has, your talking about, becomes a dis-advantage.

And ontop of all that, it would mean the NX was more expensive than the Xbox One, for less performance.

Do you want Nintendo to launch a 400$ handheld hybrid ?

When Sony will release a 249$ PS4 slim soon? thats even more powerfull? I dont think Nintendo will use 2 chips.

It will be 1 Tegra X2, and that will be it. But hopefully priced around 199-249$.