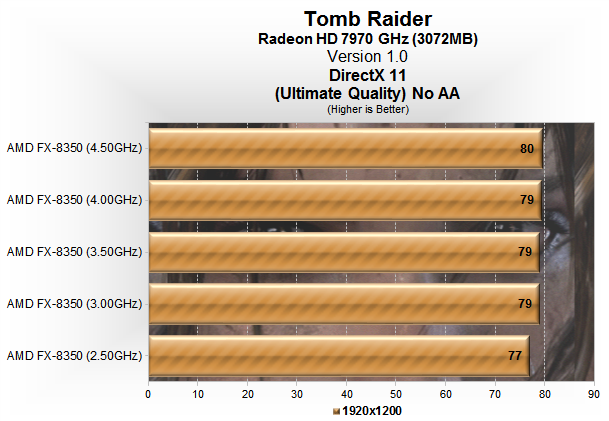

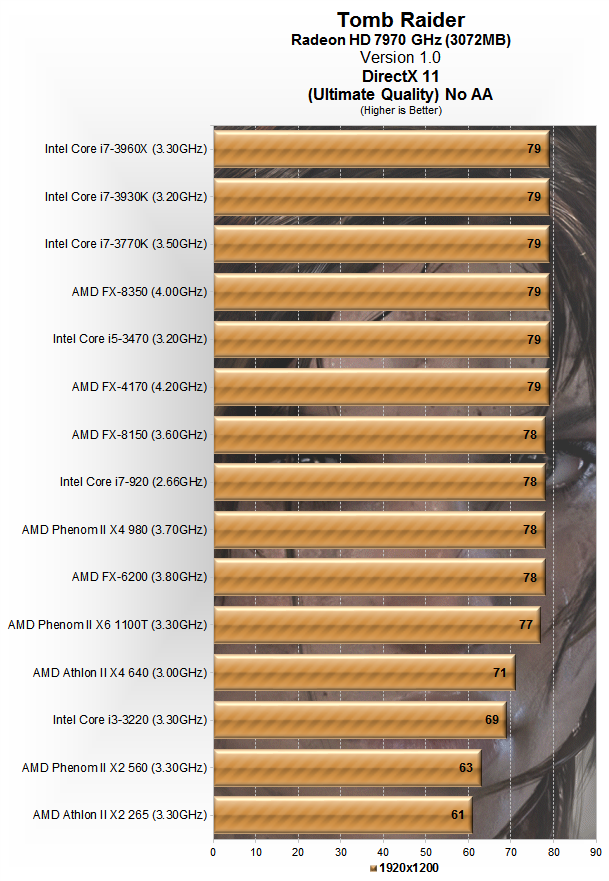

| DarkTemplar said:

Modern games are not CPU demanding. For instance, if you have a code that has a lot of float point operations and it is running on the CPU you probably are wasting resources since the GPU is much more suited to perform this type of operation (consequently the game becomes even less CPU demanding). This is why also think that 4 Jaguar cores and even 3 PPC 750 cores maybe more than enough to the next gen games (btw notice that the Wii U CPU was made for lazy developers since one of its cores has more cache, enabling single thread code to run quite well on it). And finally, since Sony and MS decided to use only half of the CPU (4 cores) for games this maybe a hint to an OS with lots things running on the background (perhaps they are working on even more features for the SO than what we know so far). |

Unfortunatly, not all floating point math can be done on the GPU, sometimes it has to be done in a serialised nature with other tasks due to various factors, which is what the CPU excells at, the GPU however will kick things into another gear when it comes to highly parallel stuff.

But you are right, that large chunks of the game can be offloaded onto the GPU, such as Physics, but that's really upto the Developer to choose.

For example, do they choose to go with amazing Physics? Or lighting so real it's beyond stupid.

Converesly, if they do say... Physics on the GPU, a CPU core or two can do some GPU tasks such as improving frame buffer effects or even Morphological Anti-Aliasing.

The previous generation was fairly restrictive in that regard, if you didn't do things in a certain way, you got poor performance, this time around though it's a different ball game.

--::{PC Gaming Master Race}::--